Hi, @hlacik,

diff --git a/ultralytics/nn/modules/head.py b/ultralytics/nn/modules/head.py

index f7105bf..4516390 100644

--- a/ultralytics/nn/modules/head.py

+++ b/ultralytics/nn/modules/head.py

@@ -57,9 +57,11 @@ class Detect(nn.Module):

cls = x_cat[:, self.reg_max * 4:]

else:

box, cls = x_cat.split((self.reg_max * 4, self.nc), 1)

- dbox = dist2bbox(self.dfl(box), self.anchors.unsqueeze(0), xywh=True, dim=1) * self.strides

- y = torch.cat((dbox, cls.sigmoid()), 1)

- return y if self.export else (y, x)

+ lt, rb = dist2bbox(self.dfl(box), self.anchors.unsqueeze(0), xywh=True, dim=1)

+ #dbox = dist2bbox(self.dfl(box), self.anchors.unsqueeze(0), xywh=True, dim=1) * self.strides

+ #y = torch.cat((dbox, cls.sigmoid()), 1)

+ #return y if self.export else (y, x)

+ return lt, rb, cls.sigmoid()#if self.export else (y, x)

And in /ultralytics/yolo/utils/tal.py:

diff --git a/ultralytics/yolo/utils/tal.py b/ultralytics/yolo/utils/tal.py

index aea8918..b0aef95 100644

--- a/ultralytics/yolo/utils/tal.py

+++ b/ultralytics/yolo/utils/tal.py

@@ -263,11 +263,13 @@ def dist2bbox(distance, anchor_points, xywh=True, dim=-1):

lt, rb = distance.chunk(2, dim)

x1y1 = anchor_points - lt

x2y2 = anchor_points + rb

- if xywh:

- c_xy = (x1y1 + x2y2) / 2

- wh = x2y2 - x1y1

- return torch.cat((c_xy, wh), dim) # xywh bbox

- return torch.cat((x1y1, x2y2), dim) # xyxy bbox

+ # if xywh:

+ # c_xy = (x1y1 + x2y2) / 2

+ # wh = x2y2 - x1y1

+ # return torch.cat((c_xy, wh), dim) # xywh bbox

+ #return torch.cat((x1y1, x2y2), dim) # xyxy bbox

+ return lt, rb # xyxy bbox

+ #return torch.cat((lt, rb), dim) # xyxy bbox

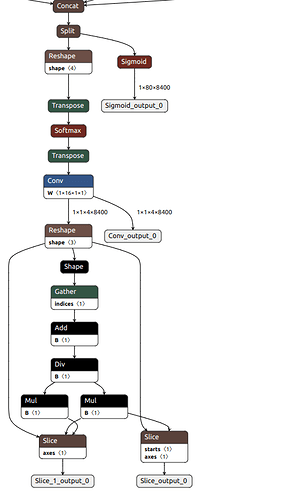

Those two modification is able to generate the onnx w/o those add/sub module.

I did not have a chance to test it in NPU.