Use YoloV8 in RK3588 NPU

I wasn’t lucky with the yolov8s.rknn , failed to submit.

My first attempt with yolo8m.rknn did not fail to submit but need to adjust something to work with coco.

Trying to recover from my eMMC disaster, let’s see what i can get…

same error for 8s - failed to submit…

yolo8m.rknn returns model input num: 1, output num: 1 while yolo5s.rknn return model input num: 1, output num: 3, i am lost in the ocean…

@gilankpam

@Mikhael_Danilov

QUANTIZE_ON = True

Works, BUT:

You must use smaller resolution,

i test with:

yolo export model=yolov8s-pose.pt imgsz=224,224 format=onnx opset=12

and

yolo export model=yolov8s-pose.pt imgsz=256,256 format=onnx opset=12

Version of RKNN v.1.5.0 is required

https://github.com/rockchip-linux/rknn-toolkit2

Code for post-processing in c++

here is for OpenCV, but with small changes can be used for RKNN

YoloPoseV8

#include "YoloPoseV8.h"

//#include <chrono>

using namespace std::chrono;

YoloPoseV8 *YoloPoseV8::instance = nullptr;

YoloPoseV8::Keypoint::Keypoint(float x, float y, float score) {

this->position = cv::Point2d(x, y);

this->conf = score;

}

YoloPoseV8::Person::Person(long _stamp, cv::Rect2i _box, float _score, std::vector<Keypoint> &_kp) {

this->stamp = _stamp;

this->box = _box;

this->score = _score;

this->kp = _kp;

}

inline static float clamp(float val, float min, float max) {

return val > min ? (val < max ? val : max) : min;

}

void YoloPoseV8::initInternal(const char *modelPath) {

nnapiClient.init(modelPath, true);

}

static const int PTR_BBOX_X = 0;

static const int PTR_BBOX_Y = 1;

static const int PTR_BBOX_W = 2;

static const int PTR_BBOX_H = 3;

static const int PTR_SCORE = 4;

static const int PTR_KP = 5;

std::vector<YoloPoseV8::Person> YoloPoseV8::detectInternal(const unsigned char *imageData) {

auto tensor = nnapiClient.invoke(imageData);

auto dimen = nnapiClient.dimensions(0);

int channels = (int) dimen[2];

auto ptrx = (float *) tensor[0].buf;

std::vector<cv::Rect> bboxList;

std::vector<float> scoreList;

std::vector<int> indicesList;

std::vector<std::vector<Keypoint>> kpList;

for (int ch = 0; ch < channels; ++ch) {

float score = ptrx[(channels * PTR_SCORE) + ch];

if (score > modelScoreThreshold) {

float x = ptrx[(channels * PTR_BBOX_X) + ch];

float y = ptrx[(channels * PTR_BBOX_Y) + ch];

float w = ptrx[(channels * PTR_BBOX_W) + ch];

float h = ptrx[(channels * PTR_BBOX_H) + ch];

float x0 = clamp((x - 0.5f * w) * 1.0F, 0.f, float(MODEL_SIZE_W));

float y0 = clamp((y - 0.5f * h) * 1.0F, 0.f, float(MODEL_SIZE_H));

float x1 = clamp((x + 0.5f * w) * 1.0F, 0.f, float(MODEL_SIZE_W));

float y1 = clamp((y + 0.5f * h) * 1.0F, 0.f, float(MODEL_SIZE_H));

cv::Rect_<float> bbox;

bbox.x = x0;

bbox.y = y0;

bbox.width = x1 - x0;

bbox.height = y1 - y0;

std::vector<Keypoint> kps;

for (int k = 0; k < 17; k++) {

float kpX = ptrx[(channels * (PTR_KP + (k * 3) + 0)) + ch];

float kpY = ptrx[(channels * (PTR_KP + (k * 3) + 1)) + ch];

float kpS = ptrx[(channels * (PTR_KP + (k * 3) + 2)) + ch];

kpX = clamp(kpX, 0.f, float(MODEL_SIZE_W));

kpY = clamp(kpY, 0.f, float(MODEL_SIZE_H));

kps.emplace_back(kpX, kpY, kpS);

}

bboxList.push_back(bbox);

scoreList.push_back(score);

kpList.push_back(kps);

}

}

cv::dnn::NMSBoxes(

bboxList,

scoreList,

modelScoreThreshold,

modelNMSThreshold,

indicesList

);

std::vector<YoloPoseV8::Person> result{};

result.reserve(indicesList.size());

long now = currentTimeMillis();

for (auto &i: indicesList) {

result.emplace_back(now, bboxList[i], scoreList[i], kpList[i]);

}

nnapiClient.releaseTensorOutput();

return result;

}

NnapiClient

#include "NnapiClient.h"

void NnapiClient::init(const char *_modelPath, bool outAsFloat) {

if (initState >= 0) return;

int model_data_size;

unsigned char *model_data = file_io::load_model(_modelPath, &model_data_size);

initState = rknn_init(&ctx, model_data, model_data_size, 0, nullptr);

if (initState < 0) throwErr("Open file %i failed..", initState);

initState = rknn_query(ctx, RKNN_QUERY_IN_OUT_NUM, &io_num, sizeof(io_num));

if (initState < 0) throwErr("rknn_init error %i", initState);

rknn_tensor_attr input_attrs[io_num.n_input];

memset(input_attrs, 0, sizeof(input_attrs));

for (int i = 0; i < io_num.n_input; i++) {

input_attrs[i].index = i;

initState = rknn_query(ctx, RKNN_QUERY_INPUT_ATTR, &(input_attrs[i]), sizeof(rknn_tensor_attr));

if (initState < 0) {

throwErr("rknn_init input error ret=%d", initState);

}

}

attrOut = static_cast<rknn_tensor_attr *>(calloc(sizeof(rknn_tensor_attr), io_num.n_output));

for (int i = 0; i < io_num.n_output; i++) {

attrOut[i].index = i;

initState = rknn_query(ctx, RKNN_QUERY_OUTPUT_ATTR, &(attrOut[i]), sizeof(rknn_tensor_attr));

if (initState < 0) { throwErr("rknn_init output error ret=%d", initState); }

}

if (input_attrs[0].fmt == RKNN_TENSOR_NCHW) {

printf("model is NCHW input fmt\n");

channel = (int) input_attrs[0].dims[1];

width = (int) input_attrs[0].dims[2];

height = (int) input_attrs[0].dims[3];

} else {

printf("model is NHWC input fmt\n");

width = (int) input_attrs[0].dims[1];

height = (int) input_attrs[0].dims[2];

channel = (int) input_attrs[0].dims[3];

}

memset(inputs, 0, sizeof(inputs));

inputs[0].index = 0;

inputs[0].type = RKNN_TENSOR_UINT8;

inputs[0].size = width * height * channel;

inputs[0].fmt = input_attrs[0].fmt;

inputs[0].pass_through = 0;

tensorOut = static_cast<rknn_output *>(calloc(sizeof(rknn_output), io_num.n_output));

for (int i = 0; i < io_num.n_output; i++) {

if (outAsFloat) {

tensorOut[i].want_float = 1;

} else {

tensorOut[i].want_float = 0;

}

}

}

rknn_output *NnapiClient::invoke(const unsigned char *image) {

if (initState < 0) { throwErr("rknn decode model not initialized %d", initState); }

inputs[0].buf = (void *) image;

rknn_inputs_set(ctx, io_num.n_input, inputs);

int runtimeResp = rknn_run(ctx, nullptr);

if (runtimeResp < 0) { throwErr("rknn decode run error ret=%d", runtimeResp); }

runtimeResp = rknn_outputs_get(ctx, io_num.n_output, tensorOut, nullptr);

if (runtimeResp < 0) { throwErr("rknn decode run error ret=%d", runtimeResp); }

return tensorOut;

}

void NnapiClient::releaseTensorOutput() {

rknn_outputs_release(ctx, io_num.n_output, tensorOut);

}

#pragma clang diagnostic push

#pragma ide diagnostic ignored "ConstantParameter"

inline static int32_t clip(float val, float min, float max) {

return (int32_t) (val <= min ? min : (val >= max ? max : val));

}

#pragma clang diagnostic pop

int8_t NnapiClient::f32_uInt8(int tensorIndex, float f32) {

float dst_val = (f32 / attrOut[tensorIndex].scale) + (float) attrOut[tensorIndex].zp;

auto res = (int8_t) clip((float) dst_val, -128.0f, 127.0f);

return res;

}

float NnapiClient::uInt8_f32(int tensorIndex, int8_t uInt8) {

return ((float) uInt8 - (float) attrOut[tensorIndex].zp) * attrOut[tensorIndex].scale;

}

uint32_t *NnapiClient::dimensions(int tensorIndex) {

static uint32_t dimensions[4];

for (int i = 0; i < 4; ++i) {

dimensions[i] = attrOut[tensorIndex].dims[i];

}

return dimensions;

}Hi @mallumoSK

Using quantize on, I always got incorrect scores, I think the score was not calculated, because it was the same for all objects.

Can you share your quantize method and probably the images used?

I thing:

model of YoloV8 on RKNN had problem because,

score is in range 0-1, but other dimensions are in range of model size

when you use model size of uInt max … it means 256 and lower, it should works

person pose estimation start working with these conditions

… tomorow i will send sources + model …

nothing extra is used, one default image + just core of deceode as yolov5 …

@nickliu973, sorry to spend your time. and I read all the post.

environtment: start a docker with ubuntu 22.04 + python3.10.

source model: official yolov8s.pt

convert to onnx with your command:

yolo export model=yolov8s.pt imgsz=640,640 format=onnx opset=12

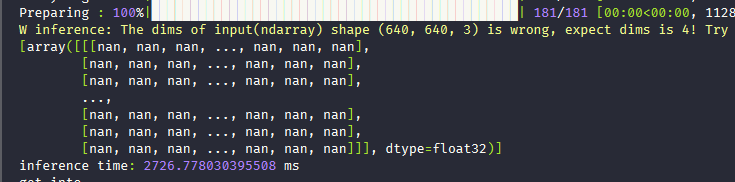

And copy the test.py from the yolov5/test.py, and I have to change the QUANTIZE_ON=False, to make the convert code to work. Or I will get an error:

E build: ValueError: cannot convert float NaN to integer

And with the QUANTIZE_ON=True, the onnx model was converted to rknn model.

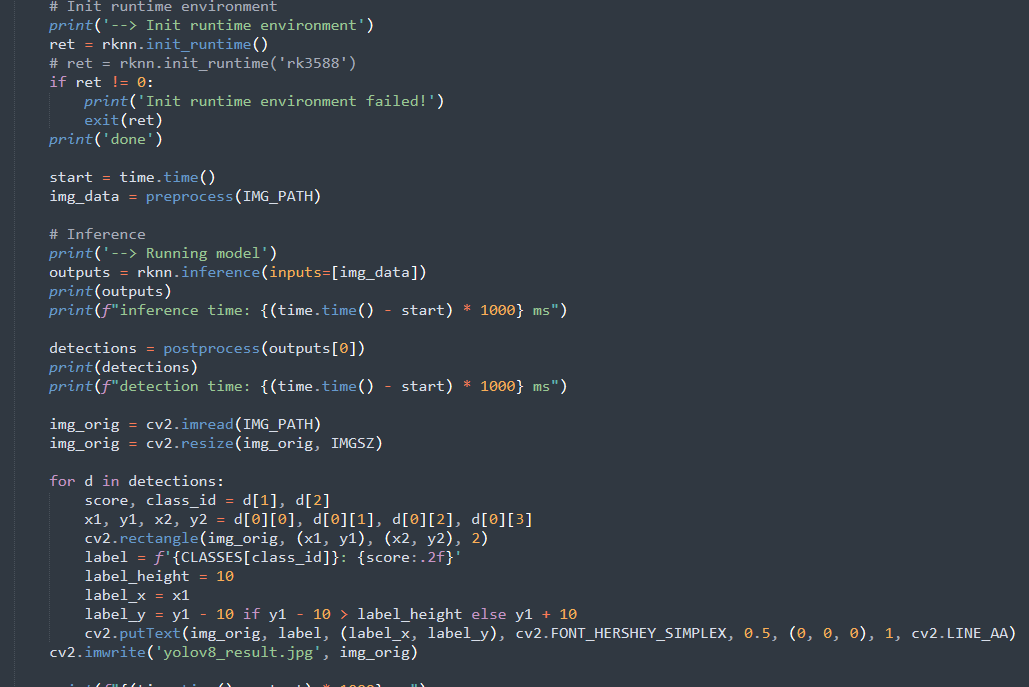

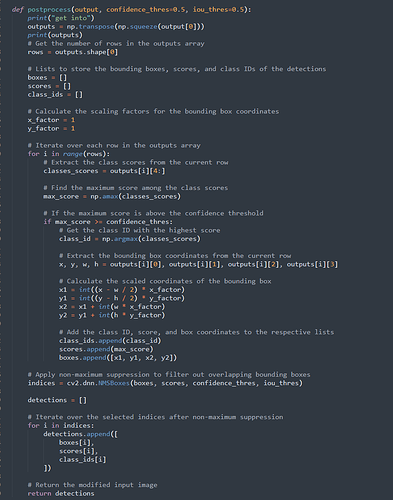

Then I change the post_process code:

But I got the output of the interface all is Nan

I guess the error was caused by process: convert the yolov8s.pt to onnx.

Can I get some hints to solve the problem.

Regards.

Hi, @Dean_Du

I have no issue with quantization on/off using the ubuntu 20.04+python3.8+rknn-tookit2-1.50 + official Yolov8s.pt.

Maybe it’s environment setup?

Rockchip github or forum may have a better answer.

HI, @hlacik,

Thanks for the confirmation and sorry for the late reply (I’m fully occupied with my startup).

This link comes with the python script to run the inference for YoloV5.

For YoloV8, the solution is pretty straightforward:

- Create the anchor according to the

make_anchors functionin the YoloV8 tal.py. - Implement the

dist2boxfunction according to the dist2bbox function in YoloV8 tal.py - Multiply the dist2box output with the self.strides generated from the

make_anchors - Get the post_processing code from here.

Good luck.

@gilankpam, @mallumoSK

Nick finding!

It looks like RKNN 1.5 does some with some improvement.

I remember changing the image resolution would not avoid the fail to submit issue in rknn 1.4.

I tried the rknn 1.5 + 640x640 resolution + quantization on/off.

The classification scores are not fixed to the same value.

Good luck.

@nickliu973 rknn-tookit lite 2-1.50 inference is not usable on hardware itself, it has nasty bug, where inference throws these errrors – https://github.com/rockchip-linux/rknn-toolkit2/issues/168 and inference is much slower as such . Error is present for inference with models other than yolo as well …

therefore is unusable as such and one need to still use rknn-toolkit1.4 for model conversion

models exported with rknn 1.5 are not compatible with rknn 1.4 tho

positive is that combination with rknn.so v 1.4 and rknn-lite2 1.5 (which has support for python 3.10) works for inference of rknn1.4 models on hardware , which is better than rknn-lite2 1.4 python library (as it only support python 3.7 and 3.9)

Thanks for the information, @hlacik !

I also noticed the the warning size_with_stride larger than model origin size in RKNN 1.5.

I never observe this warning in RKNN 1.4 and setup RKNN 1.5 is not a pleasant journey…

However, the inference (model converted by RKNN 1.5) seems good in hardware.

I used the web camera as input, looks like it’s able to inference what the model know and the classification score seems to be consistently with the benchmark.

With the python RKNN api, the inference throughput is ~20fps and ~10fps for the model w or w/o quantization, respectively.

Best regards.

Thanks, I will try the environment in a new docker, thanks for your confirmation.

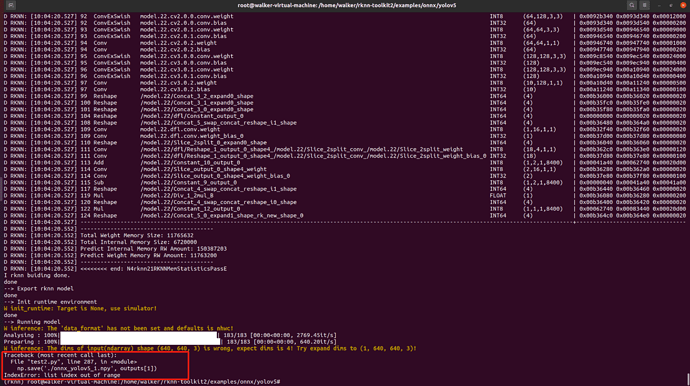

Hello everyone! I have followed these instructions exactly as my intent it to get my custom trained Yolov8 model working on the RK3588 too. Everything went fine so far and the test.py code works as far as loading and building my model and I do get the .rknn file out, which is good.

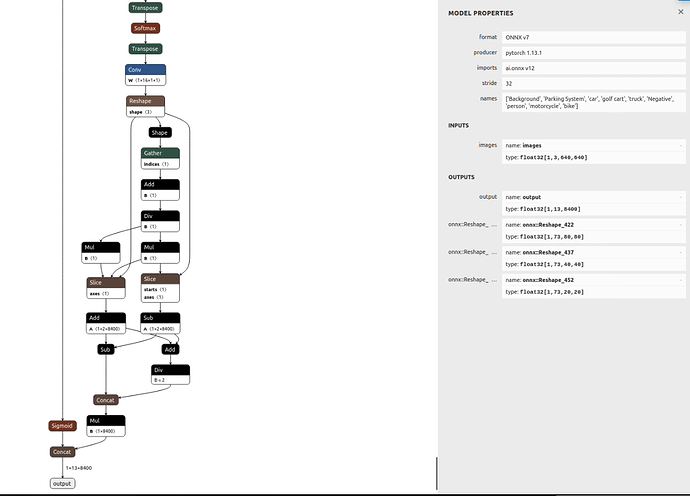

However, I get errors on the post processing, which is somewhat expected. I see in the comment by @nickliu973 that I need to:

The problem is I can’t seem to find the right code to copy in paste in test.py to process my output that works for my model. When I look at my model in Netron, the output layer is very different than the original yolov5 that came with the examples. Here is what my output section looks like. The main one is the “output” of “(1, 13, 8400)”

Can someone tell me where I can find the right code to postprocess this model output and get my scores, boxes, etc…?

Thanks!

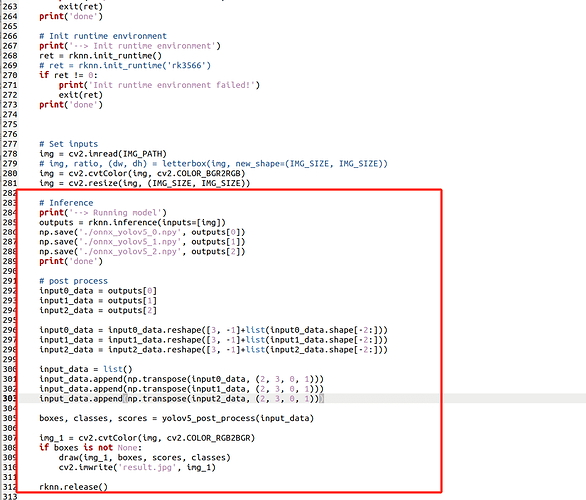

There is a link above from @gilankpam that provides most of the useful info. I’m using hide_warnings to hide the annoying warnings from the inference.

@hide_warnings

def inference(image):

return rknn_lite.inference(inputs=[image])

outputs = inference(img)

outputs = np.transpose(np.squeeze(outputs[0]))

# Get the number of rows in the outputs array

rows = outputs.shape[0]

# Lists to store the bounding boxes, scores, and class IDs of the detections

boxes = []

scores = []

class_ids = []

# Calculate the scaling factors for the bounding box coordinates

x_factor = 1

y_factor = 1

# Iterate over each row in the outputs array

for i in range(rows):

# Extract the class scores from the current row

classes_scores = outputs[i][4:]

# Find the maximum score among the class scores

max_score = np.amax(classes_scores)

# If the maximum score is above the confidence threshold

if max_score >= OBJ_THRESH:

# Get the class ID with the highest score

class_id = np.argmax(classes_scores)

# Extract the bounding box coordinates from the current row

x, y, w, h = outputs[i][0], outputs[i][1], outputs[i][2], outputs[i][3]

# Calculate the scaled coordinates of the bounding box

x1 = int((x - w / 2) * x_factor)

y1 = int((y - h / 2) * y_factor)

x2 = x1 + int(w * x_factor)

y2 = y1 + int(h * y_factor)

# Add the class ID, score, and box coordinates to the respective lists

class_ids.append(class_id)

scores.append(max_score)

boxes.append([x1, y1, x2, y2])

# Apply non-maximum suppression to filter out overlapping bounding boxes

indices = cv2.dnn.NMSBoxes(boxes, scores, OBJ_THRESH, NMS_THRESH)

detections = []

# Iterate over the selected indices after non-maximum suppression

for i in indices:

detections.append([

boxes[i],

scores[i],

class_ids[i]

])

# Draw any bounding boxes on the image for display

cv2.rectangle(img, (boxes[i][0], boxes[i][1]), (boxes[i][2], boxes[i][3]), (0, 255, 0), 2)

class_name = CLASSES[class_ids[i]]

label = f"Class ID: {class_name}, Confidence: {scores[i]:.2f}"

cv2.putText(img, label, (boxes[i][0], boxes[i][1] - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 0), 2)

As per above it only seems to give confidence scores with FP16 models, which is also my experience. I can only squeeze about 11 fps out of that. Not entirely certain whether I have the NMS thresholds in the right order.

I have skipped the rest of this post. Tip: Rockchip provides rknn-toolkit2 on github (you can just search it) and there you can find all your wheel packages needed to convert your onnx model file to rknn.

Goodluck with your converting, hopefully you can accomplish your goal soon.