I found the issues with my model export, and I have it matching yours now:

rknn_api/rknnrt version: 1.4.0 (a10f100eb@2022-09-09T09:07:14), driver version: 0.8.2

total weight size: 23487936, total internal size: 22118400

total dma used size: 63889408

model input num: 1, output num: 1

input tensors:

index=0, name=images, n_dims=4, dims=[1, 640, 640, 3], n_elems=1228800, size=2457600, w_stride = 640, size_with_stride=2457600, fmt=NHWC,type=FP16, qnt_type=AFFINE, zp=0, scale=1.000000

output tensors:

index=0, name=output0, n_dims=4, dims=[1, 84, 8400, 1], n_elems=705600, size=1411200, w_stride = 0, size_with_stride=1411200, fmt=NCHW, type=FP16, qnt_type=AFFINE, zp=0, scale=1.000000

Now this is the error:

custom string:

Warmup ...

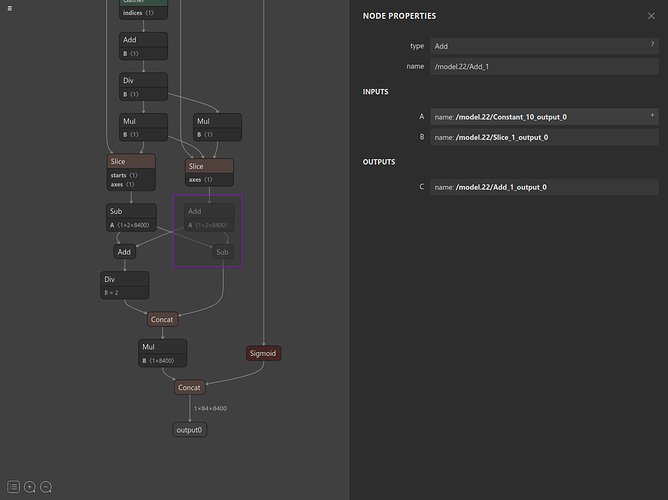

E RKNN: [14:40:02.997] failed to submit!, op id: 171, op name: Add:/model.22/Add_1, flags: 0x5, task start: 9781, task number: 3, run task counter: 0, int status: 0

rknn run error -1

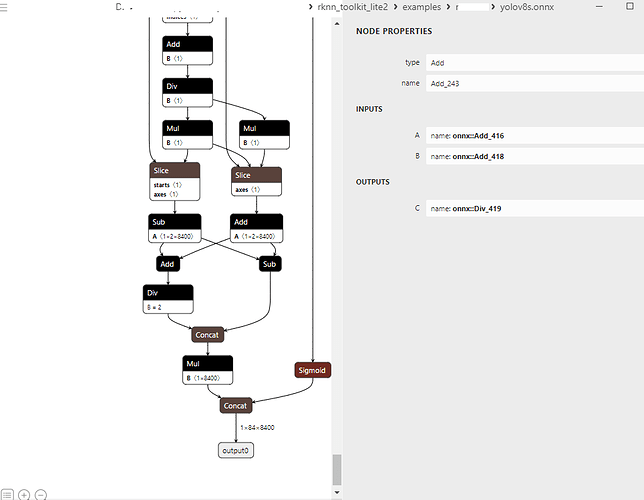

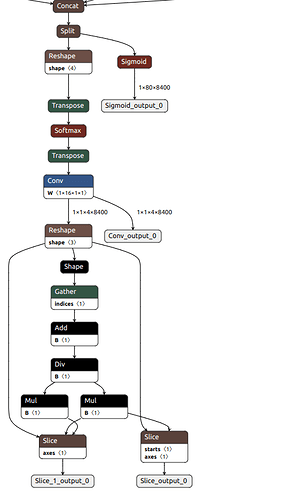

Which I suppose is this:

Is there an easy way to automatically/easily remove that node?