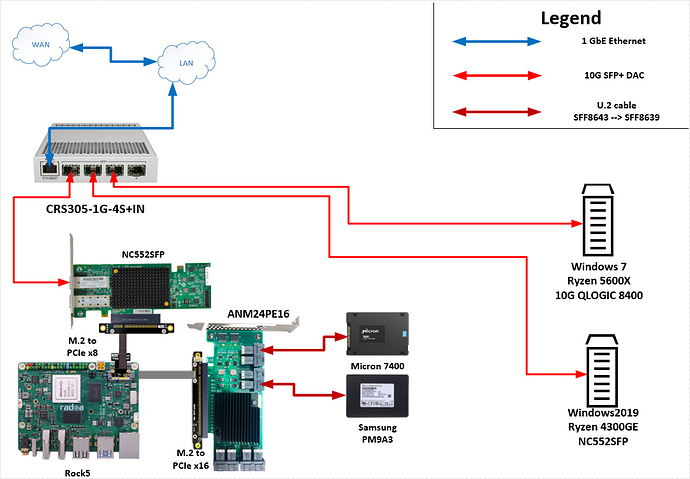

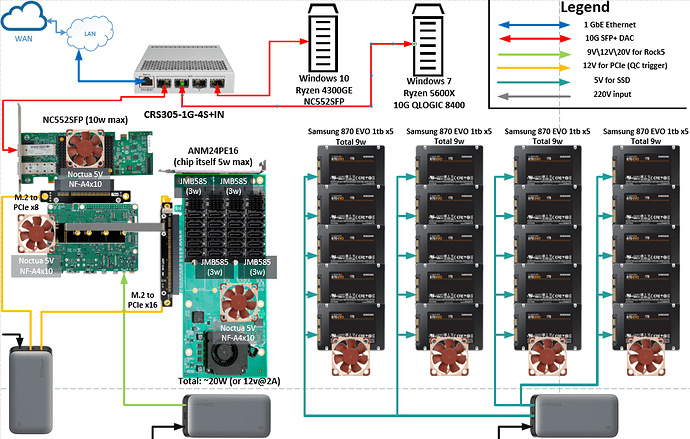

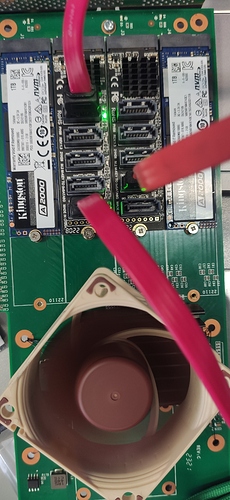

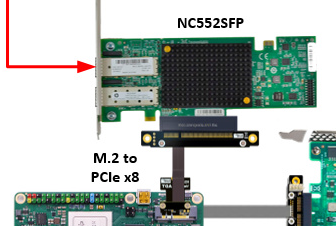

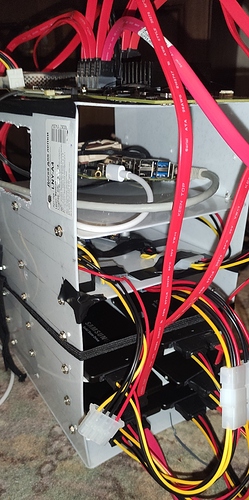

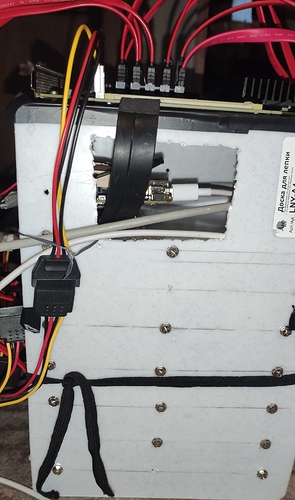

Since we finally have pcie 3.0 x4 lanes (and 2.1 x1 lane), I have decided to expand my RockPi4 storage to Rock5. While I’m still waiting for a few parts, namely

- A few m.2 to x5 sata

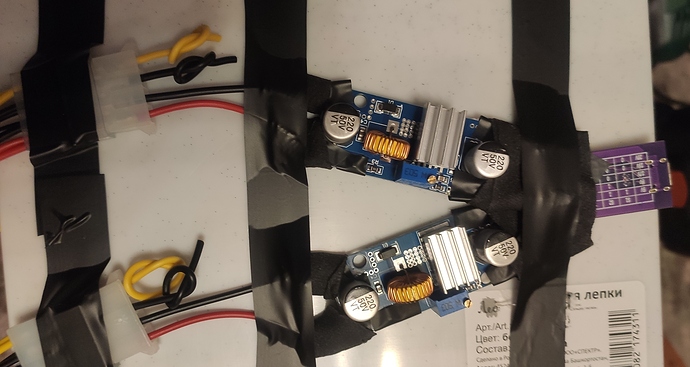

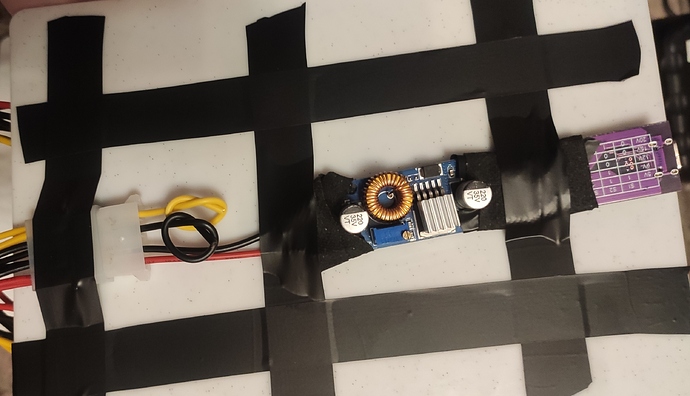

- QC trigger to power up disks

- my beautiful UPS)

- A lot of SSD disks

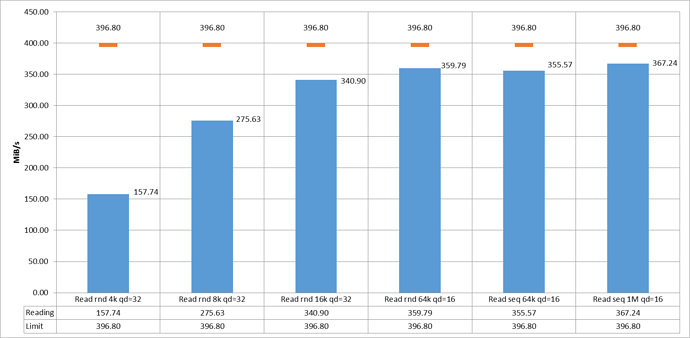

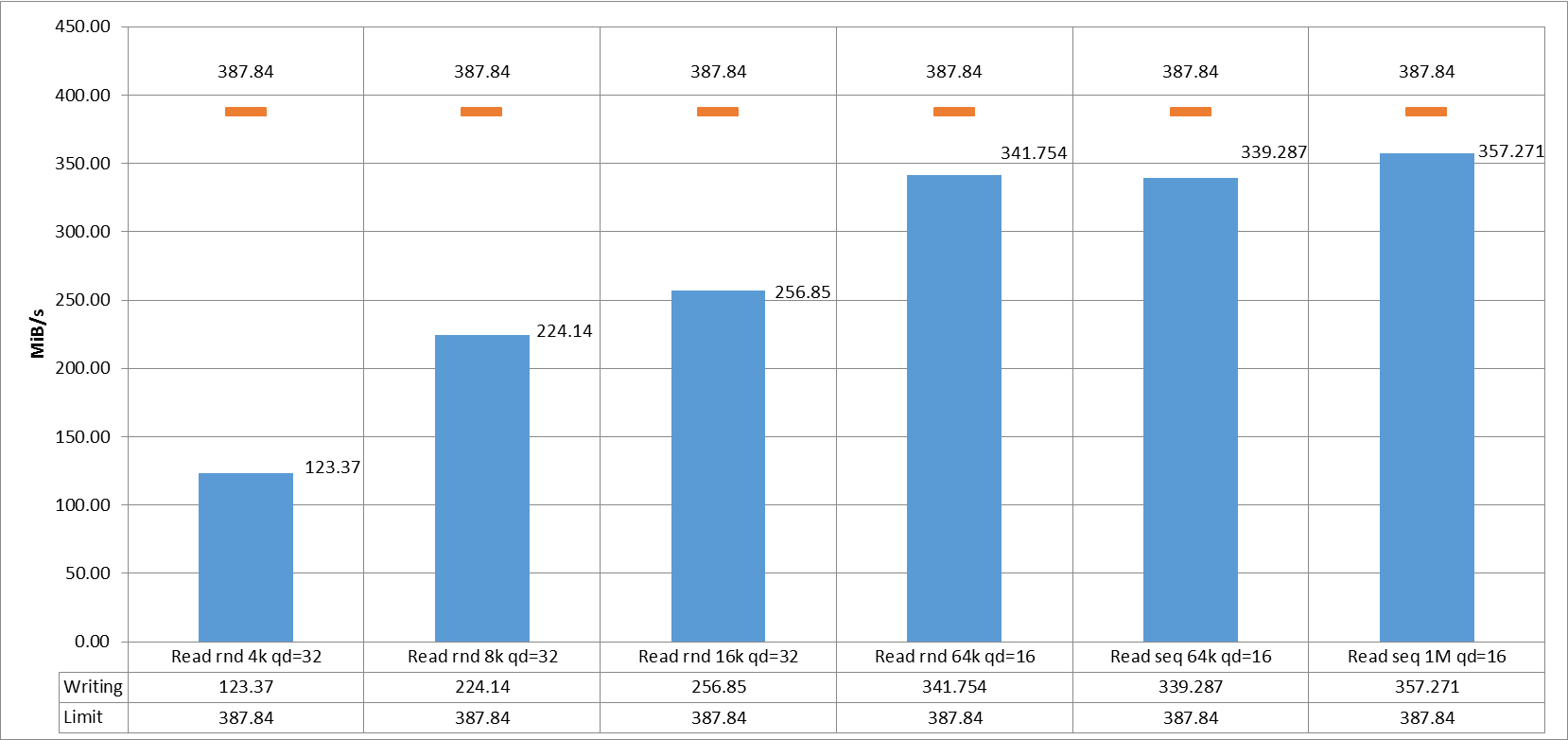

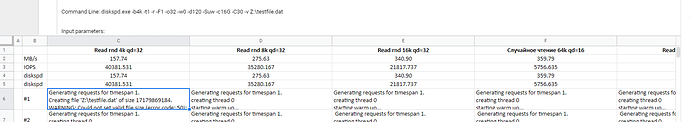

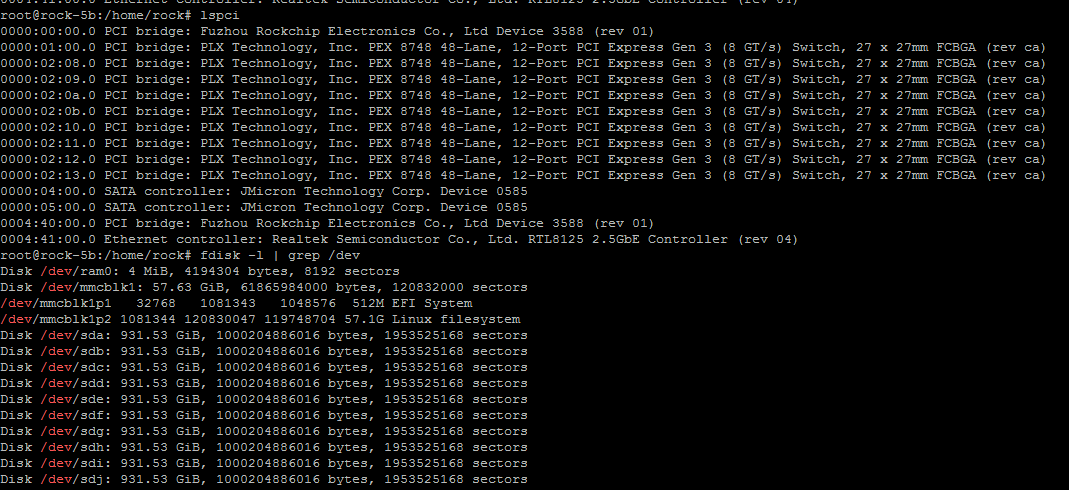

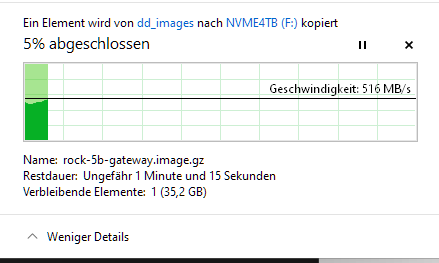

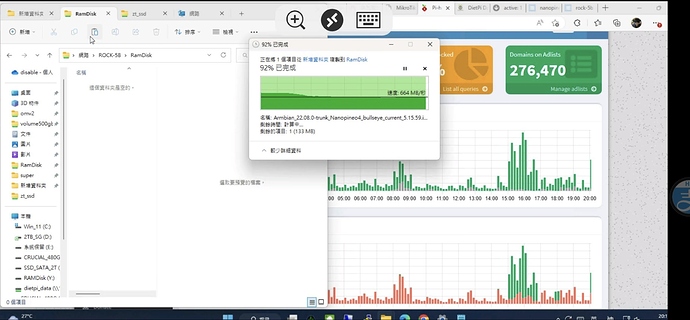

Here is first tests of network&storage performance. At first I was testing speed with FIO, but it’s results always lower than diskspd or real performance, so I have choosed to use diskspd. Both U.2 NVMe drives in mdadm raid0

The graphs:

Reading:

Writing:

The raw data:

smb tests.zip (47.2 KB)

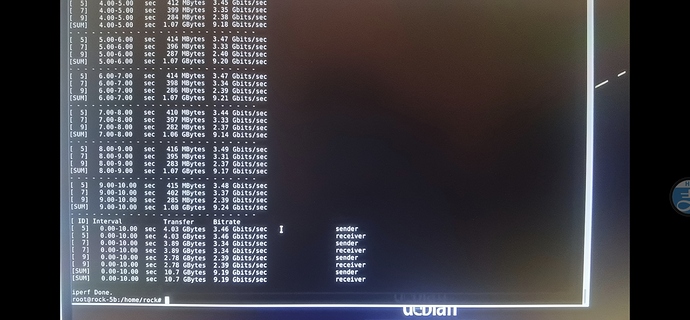

iperf3 between Windows & Rock5

root@rock-5b:/home/rock# iperf3 -s

Server listening on 5201

Accepted connection from 192.168.1.43, port 50063

[ 5] local 192.168.1.45 port 5201 connected to 192.168.1.43 port 50064

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 376 MBytes 3.16 Gbits/sec 0 4.01 MBytes

[ 5] 1.00-2.00 sec 368 MBytes 3.08 Gbits/sec 0 4.01 MBytes

[ 5] 2.00-3.00 sec 368 MBytes 3.08 Gbits/sec 0 4.01 MBytes

[ 5] 3.00-4.00 sec 368 MBytes 3.08 Gbits/sec 0 4.01 MBytes

[ 5] 4.00-5.00 sec 368 MBytes 3.08 Gbits/sec 0 4.01 MBytes

[ 5] 5.00-6.00 sec 368 MBytes 3.08 Gbits/sec 0 4.01 MBytes

[ 5] 6.00-7.00 sec 368 MBytes 3.08 Gbits/sec 0 4.01 MBytes

[ 5] 7.00-8.00 sec 366 MBytes 3.07 Gbits/sec 0 4.01 MBytes

[ 5] 8.00-9.00 sec 368 MBytes 3.08 Gbits/sec 0 4.01 MBytes

[ 5] 9.00-10.00 sec 368 MBytes 3.08 Gbits/sec 0 4.01 MBytes

[ 5] 10.00-10.02 sec 6.25 MBytes 3.02 Gbits/sec 0 4.01 MBytes

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.02 sec 3.60 GBytes 3.09 Gbits/sec 0 sender

root@rock-5b:/home/rock# iperf3 -c 192.168.1.43

Connecting to host 192.168.1.43, port 5201

[ 5] local 192.168.1.45 port 40424 connected to 192.168.1.43 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 357 MBytes 2.99 Gbits/sec 816 1.06 MBytes

[ 5] 1.00-2.00 sec 358 MBytes 3.00 Gbits/sec 0 1.45 MBytes

[ 5] 2.00-3.00 sec 362 MBytes 3.04 Gbits/sec 15 1.23 MBytes

[ 5] 3.00-4.00 sec 360 MBytes 3.02 Gbits/sec 1 970 KBytes

[ 5] 4.00-5.00 sec 362 MBytes 3.04 Gbits/sec 0 1.37 MBytes

[ 5] 5.00-6.00 sec 361 MBytes 3.03 Gbits/sec 1 1.13 MBytes

[ 5] 6.00-7.00 sec 361 MBytes 3.03 Gbits/sec 10 851 KBytes

[ 5] 7.00-8.00 sec 362 MBytes 3.04 Gbits/sec 0 1.30 MBytes

[ 5] 8.00-9.00 sec 361 MBytes 3.03 Gbits/sec 3 1.05 MBytes

[ 5] 9.00-10.00 sec 362 MBytes 3.04 Gbits/sec 0 1.45 MBytes

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 3.52 GBytes 3.03 Gbits/sec 846 sender

[ 5] 0.00-10.00 sec 3.51 GBytes 3.01 Gbits/sec receiver

iperf Done.

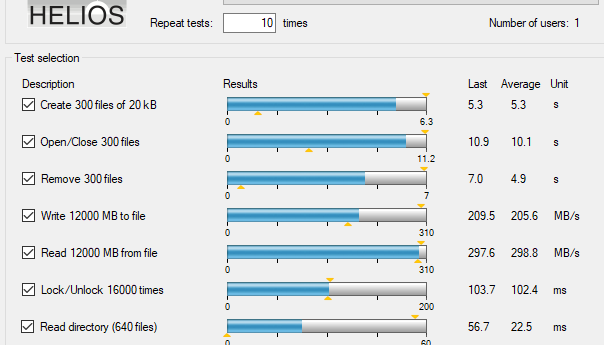

Also thanks @tkaiser for advice regarding HELIOS lantest. Here is also results from it:

The m.2 to SATA is working with switch. The power consumption is much lesser than i expected.