Some consumption data…

I’m measuring with a NetIO PowerBox 4KF in a rather time consuming process that means ‘at the wall’ with charger included with averaged idle values over 4 minutes. I tested with 2 chargers so far:

- RPi 15W USB-C power brick

- Apple 94 USB-C charger

When checking with sensors tcpm_source_psy_4_0022-i2c-4-22 this looks like this with the RPi part

in0: 5.00 V (min = +5.00 V, max = +5.00 V)

curr1: 3.00 A (max = +3.00 A)

and like this with the Apple part:

in0: 9.00 V (min = +9.00 V, max = +9.00 V)

curr1: 3.00 A (max = +3.00 A)

27W could become a problem with lots of peripherals but @hipboi said the whole USB PD negotiation thing should be configurable via DT or maybe even at runtime.

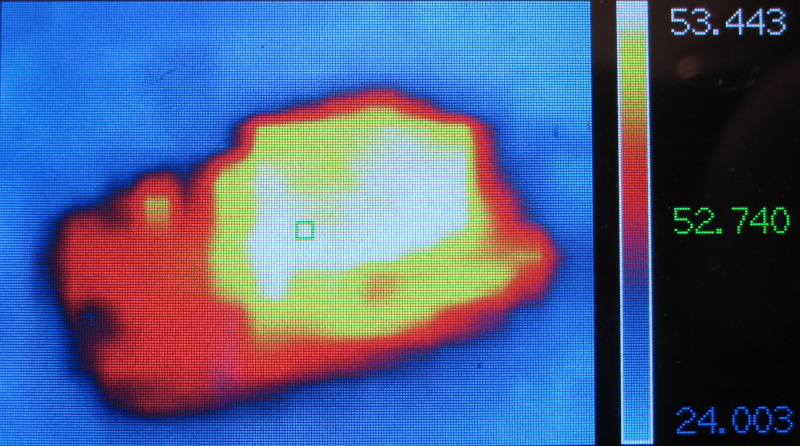

Measured with RPi power brick and 2.5GbE network connection:

- No fan: idle consumption: 2390mW

- With fan: idle consumption: 3100mW

That means the little fan on my dev sample consumes 700mW that can be substracted from numbers (given Radxa will provide a quality metal case that dissipates heat out of the enclosure so no fan needed)

Now with the Apple Charger:

- fan / 2.5GbE: idle consumption: 3270mW

- fan and only GbE: idle consumption: 2960mW

The Apple charger obviously needs a little more juice for itself (+ ~150mW compared to the RPi power brick) and powering the 2.5GbE PHY vs. only Gigabit Ethernet speeds results in 300mW consumption difference.

-

echo default >/sys/module/pcie_aspm/parameters/policy --> idle consumption: 3380mW

Switching from powersave to default with PCIe powermanagement (needed to get advertised network speeds) adds ~100mW to idle consumption.

As such I propose the following changes in all of Radxa’s OS images (preferably ASAP but at least before review samples are sent out to those YT clowns like ExplainingComputers, ETA Prime and so on):

- setting

/sys/module/pcie_aspm/parameters/policy to default (w/o network RX performance is ruined)

- appending

coherent_pool=2M to extlinux.conf (w/o most probably ‘UAS hassles’)

- configuring

ondemand cpufreq governor with io_is_busy and friends (w/o storage performance sucks)