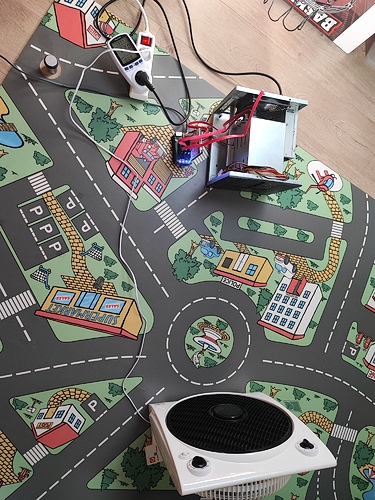

In this article, I share my experience with M.2 SATA controllers on three different Radxa boards. Since last year, my data relies on a new home made modern x86 NAS solution and one or two spare disks for regular backups, but this year, I would like to add a secondary NAS to replicate the data.

Radxa Rock models are interesting SBCs for the purpose, at least on paper, as some of them offer enough PCIe bandwidth for hard drives in RAID configurations. By the way, some low end to mid range NAS solutions from Synology or QNAP also rely on ARM for their 2 and even 4 bays offerings. In late September 2022, I only owned a Rock Pi 4B+ board and the SATA hats were unavailable, but four months later the Penta SATA hat was back in stock and I decided to first evaluate a secondary NAS solution based on Rock 3A and this Penta SATA hat, with low power usage and software RAID 5 on three regular hard drives in mind for reliability and cost.

Today, six months after my first tests, three Radxa boards and two M.2 SATA extensions boards have been roughly benchmarked and I also gathered some hardware to finalize the NAS case.

Here is the SBC hardware (excluding power supplies, case, cables, additional heatsinks…):

- Rock 3A 8GB with 32 GB eMMc (RK3568 4xA55 core @ 2.0 GHz, M.2 M key with PCIe 3.0 x 2)

- Rock 4B+ 4 GB with 32 GB eMMc (RK3399 OP1 2xA72@2.0 GHz and 4xA53@1.5 GHz, M.2 M key with PCIe 2.1 x 4)

- Rock 5B 16 GB with 64 GB eMMc (RK3588 4xA76@2.4 GHz and 4xA55@1.8 GHz, M.2 M key with PCIe 3.0 x 4)

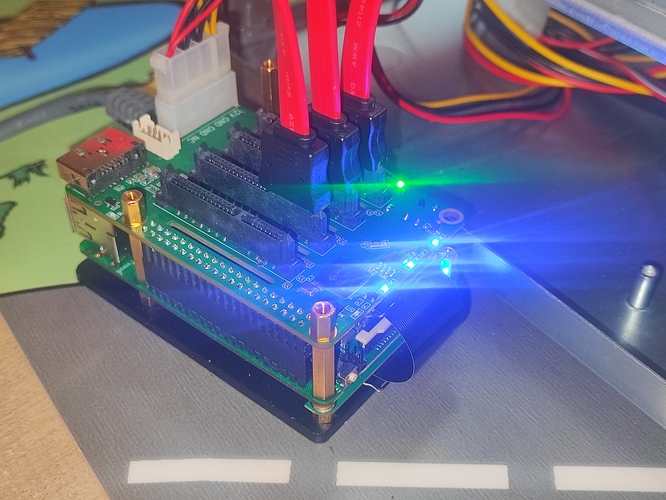

- Radxa Penta SATA hat with ribbon cable, compatible with Rock 3A and Rock 4 (JMB585 chip - SATA 3.0 x 4 + eSATA, optional Molex ATX power and 12V DC)

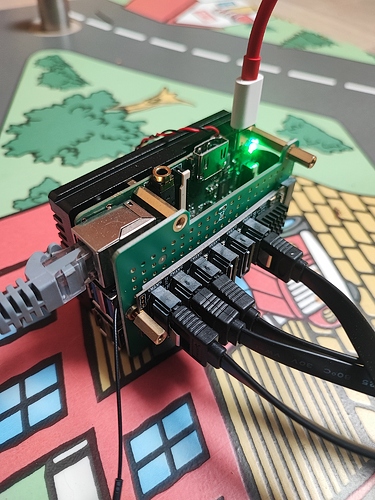

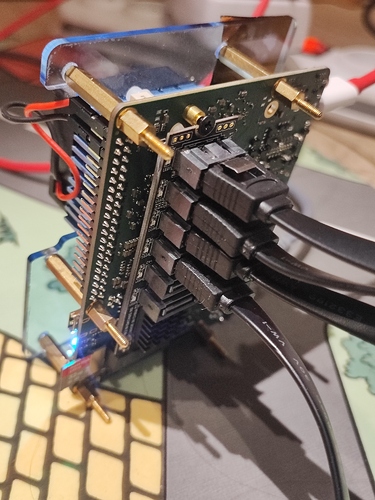

- QNINE M.2 NVME SATAx6 2280 module (ASM1166 chip – SATA 3.0 x 6), directly compatible with Rock 5B and usable on Rock 3A / 4 if using the M.2 extension board

- Radxa M.2 extension board/hat (to use NVME module on Rock 3A and Rock 4B)

The drives for performance measurements are:

- Seagate 4TB HDD (ST4000VN006) x 3

- Samsung SSD 870 EVO 2TB

Tests are run in “open” conditions and aim at measuring RAID5 and RAID0 performance on 3 HDDs. EXT4 is used for all volumes. Read/write tests are run between the RAID array and a SSD drive on a 40 GB set of large files to limit memory buffer effects.