I could finally run some network tests with a 4x10G NIC on my M.2->PCIe adapter. The NIC is the common dual-82599 behind a PCIe bridge. The PCIe bridge is PCIe 3.0 x8 converting to two PCIe 2.0 x8 for the network controllers. That’s perfect because it can convert the 8GT x4 to 5GT x8 with limited losses. We have 32Gbps theoretical PCIe bandwidth (27G effective with the standard 128-byte payload).

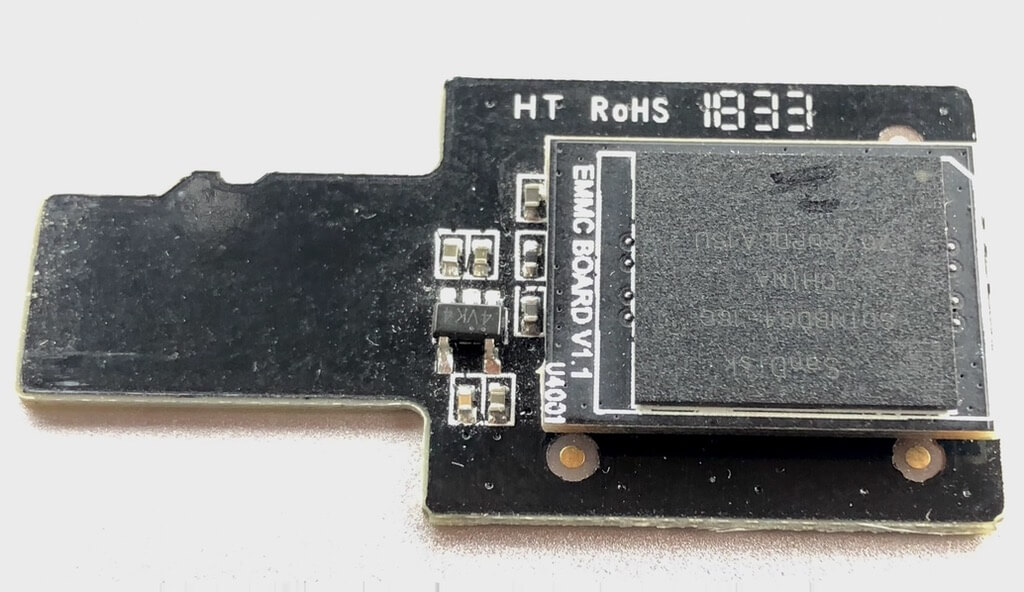

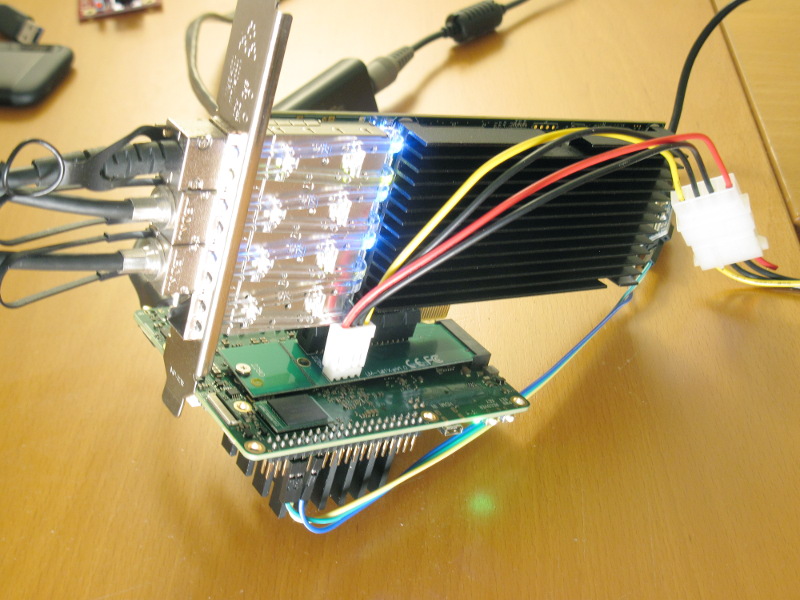

[edit: I forgot the mandatory setup photo]

Well, to make a story short, that board is a serious bit mover! Please see the graphs below. I’ve exchanged HTTP traffic between the board and 3 other devices (I needed that to make it give up). One was my mcbin (10G) and the two other ones were HoneyComb LX2 (one 10G port each). There was not much point going further, due to the bus width.

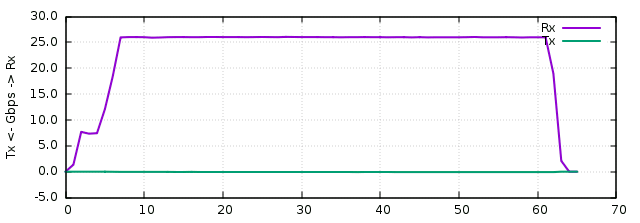

I started a server (httpterm) on each board. First tests consisted in using the Rock5B as a client only (download test). It reached 26.1 Gbps stable over the 3 NICs (measured at the ethernet layer, as reported by the interface), and there was still 24% idle CPU, indicating I was saturating the communication channel. It’s very close to the limit anyway:

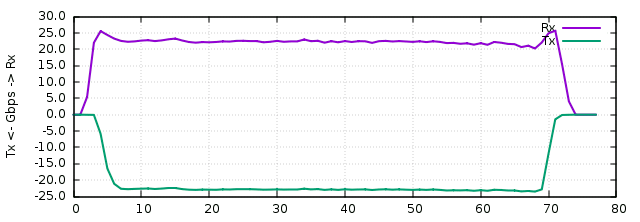

Then I tested the traffic in bidirectional mode. Rock5B had 3 clients (one per interface) and each other machine also had one client attacking Rock5B. Result: 23.0 Gbps in each direction and 0% idle! So the CPU can almost saturate the PCIe in both directions at once while processing network traffic (probably that with larger objects it could, they were only 1MB, that’s almost 3000 requests/s when you think about it). There were 2.1 million packets/s in each direction. There were It’s visible on the graph that the Rx traffic dropped a little bit when the Tx traffic arrived:

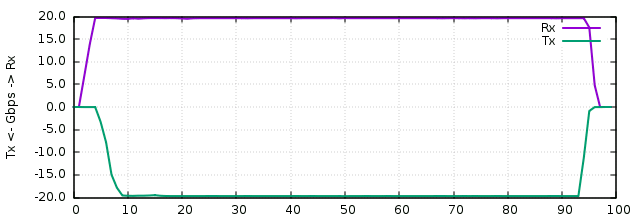

Finally I wanted to see what happens when the network definitely is the limit. I started the 20G bidirectional test (only two ports). It saturated both links in both directions, leaving 12% idle:

A few other points, I used all 8 CPUs during these tests. They seem to complement well, as the 4 little cores are able to download 14.5 Gbps together, which is pretty good!

A final test (not graphed) consisted in attacking the board with multiple HTTP clients sending small requests. It saturated at 540000 requests/s, which is also excellent for such a device.

Finally there was nothing in dmesg along all the tests, so that’s pretty reassuring, and indicates that the instabilities I faced with the older Myricom NIC was related to the card itself.

Thus I’m pretty sure we’ll start to see network gear and NAS devices made around this chip for its PCIe capabilities and its memory bandwidth, which play in the yard of entry-level Xeons. That’s pretty cool.

:

: