Yeah I am not really sure what causes that as I gave up on my 90watt PD as its was doing the same and used a 12v with usb-c adapter.

I tried many times to get it to boot without rebooting and then to cause more confusion after getting it to boot on 12v and going back to PD to do some more tests it booted with the 90watt PD?

ROCK 5B Debug Party Invitation

I wonder if the problem is mostly with voltages above 12V. My board bootloops when using a 65W power supply, but with another adapter supporting a maximum of 12V 1.5A via USB-PD it’s been perfect. (That’s just a normal USB-C adapter, none of the barrel plug shenanigans.)

@jack for your wiki article about successfully tested chargers I added those I personally confirmed to work with Radxa’s Ubuntu 20.04 image to my review.

Important note: the two Apple USB-C chargers (96W and 140W) do not work with Armbian. Here the board stalls in a reboot loop. Initially (see many posts above) I thought it’s related to adjusting the PDO_FIXED device-tree nodes but it’s related to Armbian. At least the image I tested with (Armbian_20220707f-rpardini_Rock-5b_jammy_legacy_5.10.66.img.xz) shows this behaviour with 2 out of 5 chargers.

Now that I learned that I’m participating in the most stupid ‘debug party’ ever (not just some babbling in closed/hidden Discord channels but also in ‘radxa’s official QQ group’ whatever that is) it’s really time to stop.

Edit: debugfs info queried that way: cat /sys/kernel/debug/usb/fusb302-4-0022 /sys/kernel/debug/usb/tcpm-4-0022 | sort | curl -s -F 'f:1=<-' ix.io

@tkaiser That QQ group is just for Chinese users since most people in China don’t use discord. And actually amazingfate is the only one who gets board and lives in China.

Maybe you can get a PD protocol analyzer (such as power-z’s) to investigate what actually happens during reboot.

Me? Seriously?

Maybe @amazingfate can try out another image with those problematic USB-C chargers and report back here? If it works with Radxa’s image then someone willing to help could start to bisect?

BTW: I put the Ubuntu image I tested with online since it disappeared from releases page.

He often reports in radxa’s public discord group, have you checked yet?

Nope, I can’t check since I’m not using this Discord crap for obvious reasons. If participating in such a ‘debug party’ requires me handing my mobile number over to some shitty US tech company that is specialised in user tracking then I guess I shouldn’t be part of this ‘party’.

Asides that I have no idea why Radxa printed this when they actively force fragmentation of user discussions:

Well seems that most board owners are discussing there. If you don’t want to expose your phone number to discord, you can try google voice, which creates a virtual phone number.

Discord is there and has a more informal chat basis and generally from what I have seen often a more formal summary is posted here, as many use both.

If you don’t want to sign up to discord then don’t, but its not as if you are missing all that much.

Generally there are enough on both and likely would post anything missing that needs to be.

See if Radxa will make FPC adapter cables. The RPi “Standard” is based on the limitations of an arm11 SoC from forever ago. I don’t disagree that compatible ports are nice, but trying to merge 2 connectors to one device is way harder than just selecting the desired signals with a flex cable.

Actually I mean rpi cm4io’s CSI/DSI ports, which has full 4-lane bus. Beaglebone AI-64 is also using this port. I think convert cable is also a good choice, but if it’s done by a dedicated pcb, there would be a mess to use those ports. @Tonymac32

So a less common connector then than even the normal RPi. 22-pin, 2 or 4 lane (1 of each on cm4io). An adapter ribbon cable should be simple I would think.

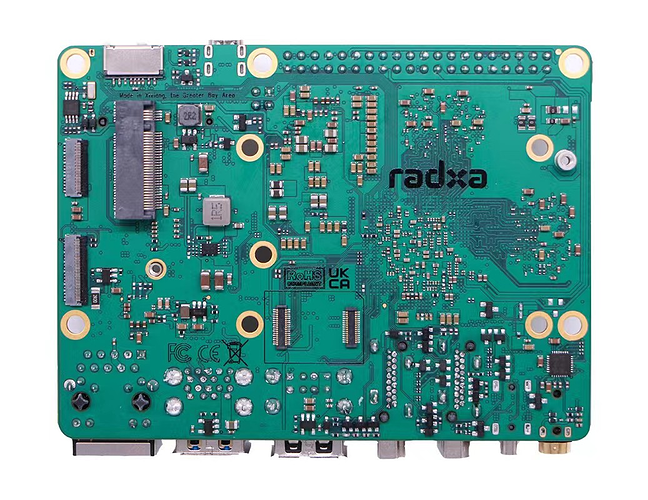

Just a tangential question on PCB art but the crazy track paths you often see on memory is that to make the track length all equal as is it that sensitive in timing?

Yep. Ideally you match impedance, too.

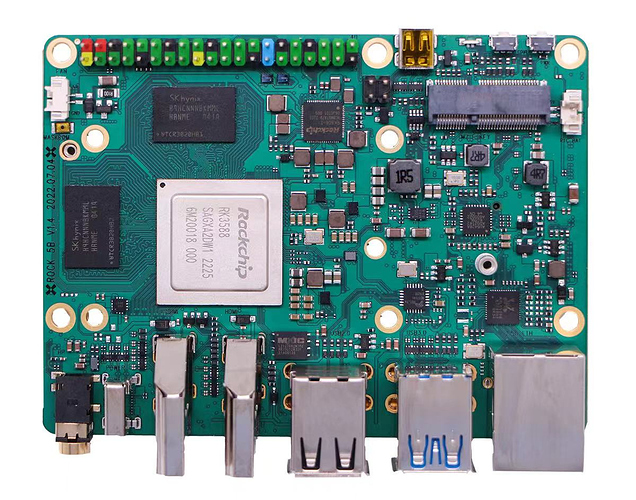

Great job! Looks like the heatsink will hold firmly in place now, that was worth it!

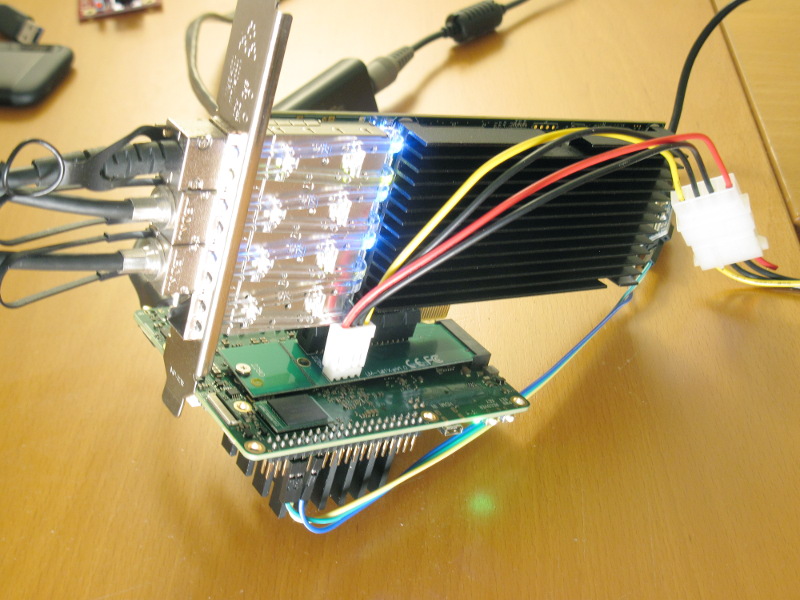

I could finally run some network tests with a 4x10G NIC on my M.2->PCIe adapter. The NIC is the common dual-82599 behind a PCIe bridge. The PCIe bridge is PCIe 3.0 x8 converting to two PCIe 2.0 x8 for the network controllers. That’s perfect because it can convert the 8GT x4 to 5GT x8 with limited losses. We have 32Gbps theoretical PCIe bandwidth (27G effective with the standard 128-byte payload).

[edit: I forgot the mandatory setup photo]

Well, to make a story short, that board is a serious bit mover! Please see the graphs below. I’ve exchanged HTTP traffic between the board and 3 other devices (I needed that to make it give up). One was my mcbin (10G) and the two other ones were HoneyComb LX2 (one 10G port each). There was not much point going further, due to the bus width.

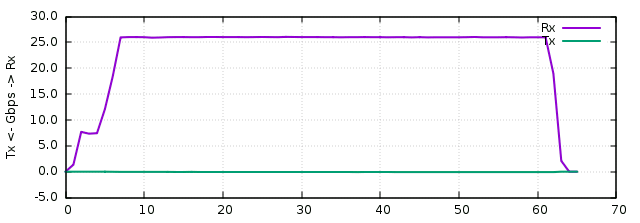

I started a server (httpterm) on each board. First tests consisted in using the Rock5B as a client only (download test). It reached 26.1 Gbps stable over the 3 NICs (measured at the ethernet layer, as reported by the interface), and there was still 24% idle CPU, indicating I was saturating the communication channel. It’s very close to the limit anyway:

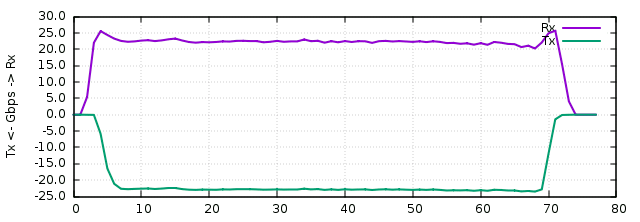

Then I tested the traffic in bidirectional mode. Rock5B had 3 clients (one per interface) and each other machine also had one client attacking Rock5B. Result: 23.0 Gbps in each direction and 0% idle! So the CPU can almost saturate the PCIe in both directions at once while processing network traffic (probably that with larger objects it could, they were only 1MB, that’s almost 3000 requests/s when you think about it). There were 2.1 million packets/s in each direction. There were It’s visible on the graph that the Rx traffic dropped a little bit when the Tx traffic arrived:

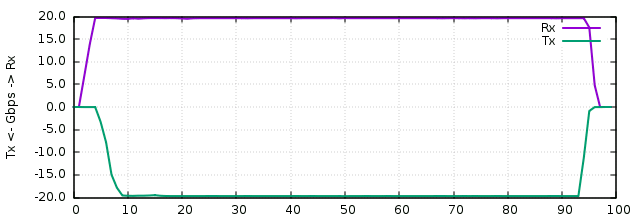

Finally I wanted to see what happens when the network definitely is the limit. I started the 20G bidirectional test (only two ports). It saturated both links in both directions, leaving 12% idle:

A few other points, I used all 8 CPUs during these tests. They seem to complement well, as the 4 little cores are able to download 14.5 Gbps together, which is pretty good!

A final test (not graphed) consisted in attacking the board with multiple HTTP clients sending small requests. It saturated at 540000 requests/s, which is also excellent for such a device.

Finally there was nothing in dmesg along all the tests, so that’s pretty reassuring, and indicates that the instabilities I faced with the older Myricom NIC was related to the card itself.

Thus I’m pretty sure we’ll start to see network gear and NAS devices made around this chip for its PCIe capabilities and its memory bandwidth, which play in the yard of entry-level Xeons. That’s pretty cool.

There is no such thing as a “standard” CSI or DSI port; they’re deliberately left undefined by MIPI. It’s a major problem with the standard, IMO.

Neither QQ group or Discord is a good place for technical discussion since they are not public search engine indexing.

I think why there is official QQ group or discord is social purpose.