Not just me

No idea, I’m not a hardware guy, just started to read about the latency stuff recently…

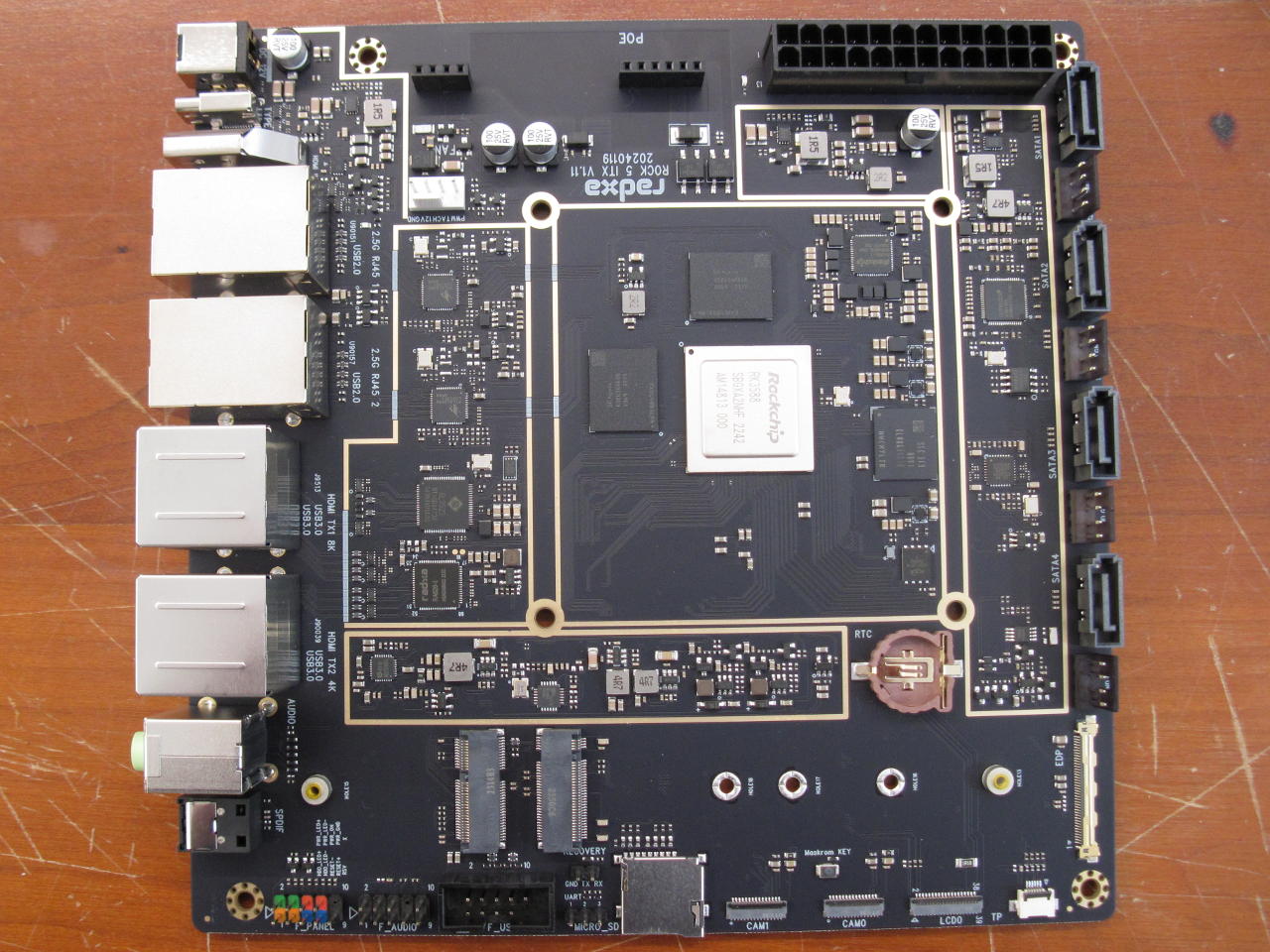

That doesn’t seem to end up with ‘software in general’ getting faster. Synthetic benchmarks are always a problem since unless it’s known to which use case they relate they just generate random numbers. And for example 7-zip (sbc-bench's main metric for some reasons) depending more on low latency than high bandwidth will generate slightly lower scores on RK3588 with LPDDR5 at just 5472 MT/s while my naive assumption would be that most other software will more benefit from higher memory bandwidth.

But if we look at Geekbench6 then LPDDR4X and LPDDR5 generate more or less same scores (don’t look at the total scores but the individual ones):

- A55 cluster only: https://browser.geekbench.com/v6/cpu/compare/5424883?baseline=5768888

- A76 cluster only: https://browser.geekbench.com/v6/cpu/compare/5424972?baseline=5768992

But Geekbench in itself is a problem as it uses memory access patterns that are not that typical (at least according to RPi guys that try to explain the low multi GB6 scores their RPi 5 achieves) and we don’t know which tests are sensitive to memory latency and which to bandwidth. I did a test weeks ago with Rock 5B comparing LPDDR4X clocked at 528 MHz and 2112 MHz so we know at least which individual tests are not affected by memory clock at all (on RK3588 – on other CPUs with different cache sizes this may differ): https://github.com/raspberrypi/firmware/issues/1876#issuecomment-2021505017

But this also is not sufficient to understand GB6 scores. It would need a system where CAS latency and memory clock can be adjusted in a wide range and even then the question remains: how do the generated scores translate to real world workloads.

I think at the moment we can conclude that the faster LPDDR5 clock does not result in a significantly faster system while in some areas where memory bandwidth is everything (video stuff for example) measurable improvements are possible.