I apologize for not replying right away.

Reading speed through ‘hdparm’ program:

# sudo hdparm -Tt /dev/sda1

/dev/sda1:

Timing cached reads: 1416 MB in 2.00 seconds = 707.67 MB/sec

Timing buffered disk reads: 972 MB in 3.01 seconds = 323.44 MB/sec

# sudo hdparm -Tt /dev/sda1

/dev/sda1:

Timing cached reads: 1732 MB in 2.00 seconds = 866.20 MB/sec

Timing buffered disk reads: 994 MB in 3.00 seconds = 330.79 MB/sec

# sudo hdparm -Tt /dev/sda1

/dev/sda1:

Timing cached reads: 1296 MB in 2.00 seconds = 647.73 MB/sec

Timing buffered disk reads: 934 MB in 3.00 seconds = 311.22 MB/sec

=========

Reading speed through ‘dd’ program:

# sudo sh -c "sync && echo 3 > /proc/sys/vm/drop_caches"

# dd if=./tempfile.1 of=/dev/null bs=4k

262144+0 записей получено

262144+0 записей отправлено

1073741824 байт (1,1 GB, 1,0 GiB) скопирован, 4,92929 s, 218 MB/s

# sudo sh -c "sync && echo 3 > /proc/sys/vm/drop_caches"

# dd if=./tempfile.1 of=/dev/null bs=8k

131072+0 записей получено

131072+0 записей отправлено

1073741824 байт (1,1 GB, 1,0 GiB) скопирован, 4,98249 s, 216 MB/s

# sudo sh -c "sync && echo 3 > /proc/sys/vm/drop_caches"

# dd if=./tempfile.1 of=/dev/null bs=1M

1024+0 записей получено

1024+0 записей отправлено

1073741824 байт (1,1 GB, 1,0 GiB) скопирован, 3,18422 s, 337 MB/s

# sudo sh -c "sync && echo 3 > /proc/sys/vm/drop_caches"

# dd if=./tempfile.1 of=/dev/null bs=512K

2048+0 записей получено

2048+0 записей отправлено

1073741824 байт (1,1 GB, 1,0 GiB) скопирован, 3,35237 s, 320 MB/s

==========

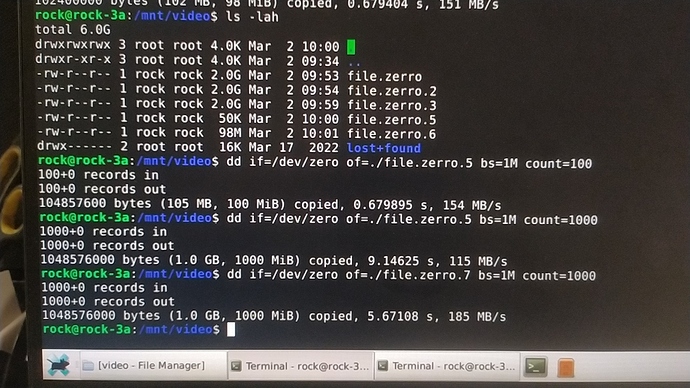

Write speed via ‘dd’:

# sync; dd if=/dev/zero of=tempfile.1 bs=1M count=1024; sync

1024+0 записей получено

1024+0 записей отправлено

1073741824 байт (1,1 GB, 1,0 GiB) скопирован, 6,39575 s, 168 MB/s

# sync; dd if=/dev/zero of=tempfile.2 bs=1M count=1024; sync

1024+0 записей получено

1024+0 записей отправлено

1073741824 байт (1,1 GB, 1,0 GiB) скопирован, 6,20996 s, 173 MB/s

# sync; dd if=/dev/zero of=tempfile.2 bs=1M count=1024; sync

1024+0 записей получено

1024+0 записей отправлено

1073741824 байт (1,1 GB, 1,0 GiB) скопирован, 6,20996 s, 173 MB/s

ext4