New Nvidia beta driver:

Orion O6 Debug Party Invitation

The more I read, the more I postpone ordering the board.

Hope that someone outside of Radxa will do review. With tests, with official Debian/Fedora/etc. images (instead of Radxa provided ones), etc.

There’s a reason it’s currently open for devs and it’s the “debug party”. Just like for rock5b, it’s normal that the beginning is a bit chaotic at this stage (and it’s amazingly good for a first week). Hopefully soon most distros will boot off it out of the box and most stuff will work optimally. Don’t get worried by what you read, and just observe when you feel confident that the remaining issues are not a problem for you.

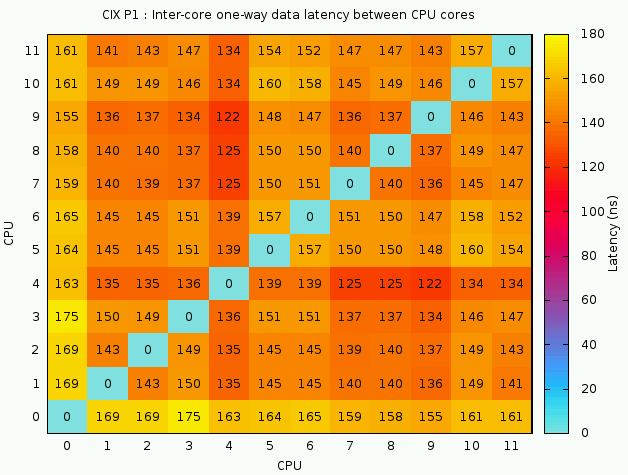

@tkaiser, the CPU cores organization is definitely weird. Here are the cache-to-cache latencies between cores, for reads:

See that yellow border ? Core 0 has ~10% higher latencies with any other core than any of them. It does definitely explain your previous observations.

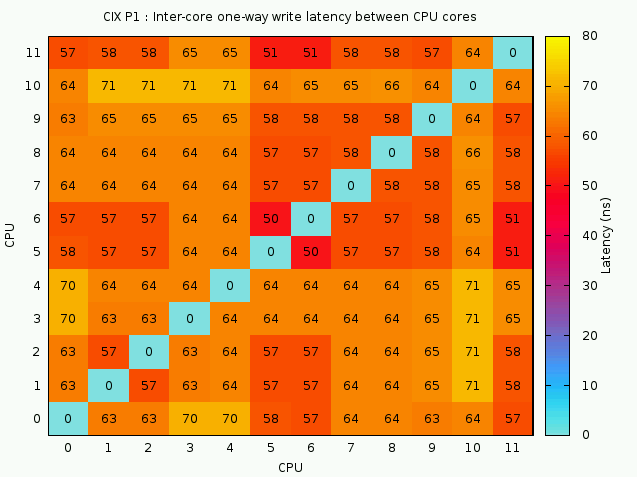

For writes, the latencies are excellent, but still core 0 seems to be out of the band (less pronounced though). It’s also visible that the block of A720 is faster than the A520, but at these small latencies, such a high frequency difference ratio starts to count quite a bit:

CPU 5 and 6 show the smallest latency to any other ones and are even best together. CPU 11 comes immediately next. Then you see core 10 in the same range as core 0. I suspect the L3 connectivity looks a bit funny. Maybe it’s sort of a tore that is not complete and those at certain ends are not as well served.

Overall these results are good, but while they show the topology is not perfectly uniform, it’s hard to guess more for now.

Ah, also something else, the A520 seem not to have L2 (or maybe a tiny one?). Their memory latencies directly jump from L1 latency to L3 latency directly (4-32kB, then 64k-8MB).

Core 0 (A720):

$ taskset -c 0 ./ramlat -s -n 100 524288

size: 1x32 2x32 1x64 2x64 1xPTR 2xPTR 4xPTR 8xPTR

4k: 1.539 1.539 1.539 1.541 1.540 1.540 1.539 2.841

8k: 1.540 1.540 1.539 1.539 1.540 1.539 1.540 2.903

16k: 1.539 1.539 1.539 1.540 1.539 1.539 1.540 2.907

32k: 1.540 1.540 1.539 1.539 1.539 1.540 1.540 2.910

64k: 1.541 1.540 1.541 1.541 1.541 1.541 1.541 2.910 <-- 1.5ns

128k: 3.724 3.746 3.727 3.739 3.728 4.544 6.403 16.93

256k: 3.525 3.535 3.527 3.549 3.525 4.565 8.659 17.29

512k: 3.490 3.483 3.488 3.481 3.492 4.571 8.689 17.32 <-- 3.5ns

1024k: 12.12 13.91 12.82 13.90 12.82 16.61 23.78 35.86

2048k: 12.73 14.80 12.91 14.72 12.58 20.00 21.44 38.43 <-- 12ns

4096k: 20.91 17.81 21.04 17.75 21.26 23.09 22.78 35.79

8192k: 30.52 23.05 29.42 22.97 30.05 26.44 27.76 37.50 <-- 30ns

16384k: 54.21 27.02 36.52 26.65 37.43 35.77 33.39 46.09

32768k: 42.76 47.18 42.62 46.02 43.53 56.52 72.77 116.8

65536k: 51.22 66.22 50.97 62.08 51.27 63.01 90.07 137.1

131072k: 49.01 70.56 51.58 72.87 48.97 67.44 95.10 140.9

262144k: 43.89 64.96 44.74 63.73 45.83 66.52 91.89 138.6

524288k: 58.22 53.42 51.21 53.93 51.21 59.57 89.17 134.8

Now the A520:

$ taskset -c 1 ./ramlat -s -n 100 524288

size: 1x32 2x32 1x64 2x64 1xPTR 2xPTR 4xPTR 8xPTR

4k: 2.787 2.785 2.785 2.786 2.230 2.228 2.228 2.339

8k: 2.786 2.789 2.785 2.786 2.227 2.228 2.492 2.629

16k: 2.789 2.786 2.788 2.794 2.232 2.232 2.885 4.132

32k: 2.814 3.689 2.809 3.682 2.250 2.251 3.735 14.75 <-- 2.5ns

64k: 33.29 39.12 33.41 39.22 31.44 37.26 44.64 63.74

128k: 38.37 46.86 38.36 46.65 35.12 41.63 47.47 70.18

256k: 38.95 46.54 38.92 46.63 37.23 44.11 49.17 72.18

512k: 39.16 39.06 38.93 39.31 38.26 38.93 47.29 72.68

1024k: 39.52 39.11 38.88 39.06 38.51 39.57 48.23 73.14

2048k: 40.07 39.32 38.91 39.14 38.64 38.93 46.88 73.77

4096k: 42.42 40.80 40.31 40.63 39.93 39.98 48.04 72.04

8192k: 44.77 41.02 40.28 40.81 39.89 40.36 48.67 71.18 <-- ~40ns

16384k: 65.27 94.39 112.2 73.19 64.73 129.7 78.72 101.9

32768k: 195.8 216.2 214.4 221.0 219.2 220.9 222.2 302.6

65536k: 221.4 228.5 230.8 228.8 230.7 223.6 233.9 319.7

131072k: 227.6 226.0 227.8 226.2 227.1 223.9 235.8 325.8

262144k: 226.9 228.3 228.6 225.9 228.4 224.4 236.6 328.1

524288k: 229.8 228.4 229.0 227.8 229.7 224.0 237.7 329.1

That’s all for tonight.

Edit: forgot to mention, I killed cix-audio.sh and gnome to avoid measurement noise since they were pretty active as already reported.

So it’s already at the cache layer and not just accessing DRAM?

Yes, that’s exactly it. But it can still be caused by some software init code. Some chips for example support configuring priorities for each core, maybe there’s something like this. Or maybe it’s related to the way the CPUs are reordered.

I also checked OpenSSL performance in RSA, which is both relevant to my use cases, and a good indication of how advanced the CPU design is. First, here’s what I got on rock5b:

-

A76:

$ taskset -c 4 openssl speed rsa2048 sign verify sign/s verify/s rsa 2048 bits 0.003765s 0.000103s 265.6 9713.8 -

A55:

$ taskset -c 0 openssl speed rsa2048 sign verify sign/s verify/s rsa 2048 bits 0.005938s 0.000158s 168.4 6320.9

Now on Orion O6, default openssl (3.0.1):

-

A720 big (core 0 or 11):

$ taskset -c 0 openssl speed rsa2048 sign verify sign/s verify/s rsa 2048 bits 0.003951s 0.000074s 253.1 13424.6 -

A520 (core 1):

$ taskset -c 1 openssl speed rsa2048 sign verify sign/s verify/s rsa 2048 bits 0.012525s 0.000323s 79.8 3098.4

All that is quite shocking, it’s slower than rock5b by default. That might be a bug in the version, as it’s totally outdated (3.0.1) and openssl-3.0 is known for suffering from major performance issues. So I rebuilt openssl-3.0.15 which is the up-to-date and must-use version for the 3.0 branch, and now it’s way better:

-

A720 (big)

$ LD_LIBRARY_PATH=$PWD taskset -c 11 ./apps/openssl speed rsa2048 sign verify sign/s verify/s rsa 2048 bits 0.001136s 0.000029s 880.0 34618.0 ## 3.3x faster than rock5's A76 -

A520:

$ LD_LIBRARY_PATH=$PWD taskset -c 1 ./apps/openssl speed rsa2048 sign verify sign/s verify/s rsa 2048 bits 0.005395s 0.000144s 185.3 6935.7 ## 10% faster than rock5's A55

OK now it’s much better, it changed from quite poor to excellent!

One important difference I’m seeing is this:

-

stock openssl:

[EDIT: as reported by @tkaiser, that version is not in /usr/bin but in an alternate dir that is in the path; the one in /usr/bin is up to date and works fine]$ openssl version -b -v -p -c OpenSSL 3.0.1 14 Dec 2021 (Library: OpenSSL 3.0.1 14 Dec 2021) built on: Thu Jan 9 10:44:37 2025 UTC platform: linux-aarch64 CPUINFO: N/A -

rebuild openssl:

$ LD_LIBRARY_PATH=$PWD ./apps/openssl version -b -v -p -c OpenSSL 3.0.15 3 Sep 2024 (Library: OpenSSL 3.0.15 3 Sep 2024) built on: Fri Jan 31 04:43:22 2025 UTC platform: linux-aarch64 CPUINFO: OPENSSL_armcap=0xfd

The build options seem to be the same, so that was likely a bug of 3.0.1 to fail to detect the CPU correctly, causing all operations to fall back to generic unoptimized ones. I think it’s important to fix it in the final product because openssl speed definitely is part of the metrics used to compare boards, and it would be too bad if it would compare unfavorably to other ones.

880 sign/s is very good at this frequency. My skylake at 4.4 GHz gives me 2160, thus 491 keys/s/GHz. Here we’re at 338/GHz, that’s impressive for an Arm platform.

I’m using the Radxa supplied FrankenDebian 12 image and here it looks quite different:

sh-5.2# /usr/bin/openssl version -b -v -p -c

OpenSSL 3.0.15 3 Sep 2024 (Library: OpenSSL 3.0.15 3 Sep 2024)

built on: Sun Oct 27 14:16:28 2024 UTC

platform: debian-arm64

CPUINFO: OPENSSL_armcap=0xfd

But the openssl binary lying around below /usr/share/cix/bin/ is the outdated 3.0.1 version though using 3.0.15 libs:

sh-5.2# /usr/share/cix/bin/openssl version -b -v -p -c

OpenSSL 3.0.1 14 Dec 2021 (Library: OpenSSL 3.0.15 3 Sep 2024)

built on: Sun Oct 27 14:16:28 2024 UTC

platform: debian-arm64

CPUINFO: OPENSSL_armcap=0xfdInteresting, because I also used their debian 12 image. I picked the USB image from https://dl.radxa.com/orion/o6/images/debian/.

Oh now I’m starting to understand. Indeed, the correct version is in /usr/bin/ but the path is bad and the LD_LIBRARY_PATH as well:

willy@orion-o6:~$ /usr/bin/openssl version

/usr/bin/openssl: /usr/share/cix/lib/libcrypto.so.3: version `OPENSSL_3.0.9' not found (required by /usr/bin/openssl)

/usr/bin/openssl: /usr/share/cix/lib/libcrypto.so.3: version `OPENSSL_3.0.3' not found (required by /usr/bin/openssl)

willy@orion-o6:~$ echo $LD_LIBRARY_PATH

/usr/share/cix/lib

Now quickly fixed this way:

$ sudo mv /usr/share/cix/bin/{,cix.}openssl

$ sudo mv /usr/share/cix/lib/{,cix.}libssl.so.3

$ sudo mv /usr/share/cix/lib/{,cix.}libcrypto.so.3

$ openssl version -b -p -v -c

OpenSSL 3.0.15 3 Sep 2024 (Library: OpenSSL 3.0.15 3 Sep 2024)

built on: Sun Oct 27 14:16:28 2024 UTC

platform: debian-arm64

CPUINFO: OPENSSL_armcap=0xfd

Now fixed, thank you!

If your looking for a seamless out of the box experience or using the Orion as your daily driver then it is isn’t ready for that. The CD8180 is new so there is lot to discover about it capabilities/limitations. So you would be on that journey if you commit to purchasing one at this point and live with the consequences. Unfortunately even the debug party may not reveal all the limitations. As a example for the RK3588 only once the TRM was released could we determine limitations on some of the IP blocks, for me it was PCIE and the NPU. CD8180 TRM is planned to be released Q2 2025. I guess its easy to get caught up in the fever of wanting something new to play with.

While @hrw being an experienced developer maybe even willing to join this journey I wonder how many people ordered the O6, waiting eagerly to be shipped in February and thinking this would be a final product. Based on state of software side of things I would suspect it’s a loooong journey till O6 is ready for consumers while I see the board being advertised as ‘immediately ready’.

I guess we’ll see a lot of angry people and lots of complaints within the next months here…

Regarding memory speed, it’s quite good (basically twice Rock5 which is not surprising since it’s twice as wide):

- 10 GB/s per A520 core, for a limit of 40 GB/s total for the 4.

- 25-28GB/s per A720 core (depending on frequency), 40 GB/s for 2, 45 GB/s for 3, 43 GB/s for 4 and decreases a bit above to converge to 40GB/s.

Raw results:

-

A520 alone:

$ for c in 1 1,2 1,2,3 1,2,3,4; do echo $c: $(taskset -c $c ./rambw 200 3);done 1: 10871 10868 10891 1,2: 21050 21489 21491 1,2,3: 31179 31612 31655 1,2,3,4: 40232 40445 40415 -

A720 alone:

$ for c in 0 0,11 0,10,11 0,9,10,11; do echo $c: $(taskset -c $c ./rambw 200 3);done 0: 26912 27550 27523 0,11: 40291 40605 40698 0,10,11: 45128 45385 45307 0,9,10,11: 43622 43587 43592

I couldn’t have put it better myself … hopefully, chance to snag one on eBay.

Currently digging a bit below /sys/kernel/debug I had to find that I was completely wrong since the CPU cores have those properties set (and sbc-bench needs an adoption since reporting nonsense).

Even the ‘weird’ setup with cpu0 and cpu11 being members of the same cluster is reflected wrt energy aware scheduling:

sh-5.2# cat /sys/kernel/debug/energy_model/cpu?/cpus

0,11

1-4

5-6

7-8

9-10

sh-5.2# grep . /sys/kernel/debug/energy_model/cpu0/ps\:799858/*

/sys/kernel/debug/energy_model/cpu0/ps:799858/cost:1690412

/sys/kernel/debug/energy_model/cpu0/ps:799858/frequency:799858

/sys/kernel/debug/energy_model/cpu0/ps:799858/inefficient:0

/sys/kernel/debug/energy_model/cpu0/ps:799858/power:520000

sh-5.2# grep . /sys/kernel/debug/energy_model/cpu0/ps\:2600173/*

/sys/kernel/debug/energy_model/cpu0/ps:2600173/cost:7640000

/sys/kernel/debug/energy_model/cpu0/ps:2600173/frequency:2600173

/sys/kernel/debug/energy_model/cpu0/ps:2600173/inefficient:0

/sys/kernel/debug/energy_model/cpu0/ps:2600173/power:7640000

According to these properties the fastest A720 consume almost 15 times more power at 2.6 GHz compared to 800 MHz

Edit 1: Looking at what we get regarding USB-C and USB power delivery:

grep . /sys/class/typec/port*/* 2>/dev/null --> https://0x0.st/88Yj.txt

Even with BSP kernel that’s not much, just host vs. device and sink vs. source (my O6 is powered via port1 from an USB PD capable power brick and on port0 an USB SSD is connected from which the board booted). /sys/class/usb_power_delivery is empty and /sys/class/usb_role/ only shows host vs. device.

There’s only one udc entry for port 1 (cdnsp-gadget confirming that not only PCIe but also the USB stack is licensed from Cadence):

grep -r . /sys/class/udc/90f0000.usb-controller/* 2>/dev/null --> https://0x0.st/88Y2.txt

Oh great catch! Now at least we’re certain they’re from the same cluster, and that very likely a rotation is applied on the core numbers as enumerated.

no I haven’t seen any such settings.

Though there’s setpci to renegotiate PCIe speed. I use this script in production to reduce consumption of a bunch of DC SSDs when performance is not needed (which is true for approx 16 hours of the day  )

)

Edit 1: Since my O6 is equipped with a Samsung SSD after a slight modification adjusting the bus address (no 0000: prefix) this works flawlessly on O6:

root@orion-o6:~# lspci -vv -s '0000:91:00.0' | grep LnkSta:

LnkSta: Speed 16GT/s, Width x4

root@orion-o6:~# set-samsung-speed.sh 3

root@orion-o6:~# lspci -vv -s '0000:91:00.0' | grep LnkSta:

LnkSta: Speed 8GT/s (downgraded), Width x4

root@orion-o6:~# set-samsung-speed.sh 1

root@orion-o6:~# lspci -vv -s '0000:91:00.0' | grep LnkSta:

LnkSta: Speed 2.5GT/s (downgraded), Width x4

Script is here: https://gist.github.com/ThomasKaiser/2c2bd04539a64a906f5520432a651d1d and ofc awk search pattern needs to be adapted for a graphics card or whatever else.

Edit 2: For anyone interested in adjusting PCIe speeds on O6 just use the generic set-pcie-speed script. It gets called with bus address and PCIe Gen as two parameters.

For example pcie_set_speed.sh 0001:c1:00.0 1 to set the PCIe device in the x16 slot to Gen1.

To test I added another RTL8126 NIC via M.2-PCIe-adapter:

lspci will show the bus address (I know it’s on bus enumeration changes dynamically so the new NIC is now on 0003 since I have compared with before0001):

radxa@orion-o6:~$ lspci

0000:90:00.0 PCI bridge: Device 1f6c:0001

0000:91:00.0 Non-Volatile memory controller: Samsung Electronics Co Ltd NVMe SSD Controller S4LV008[Pascal]

0001:c0:00.0 PCI bridge: Device 1f6c:0001

0001:c1:00.0 Ethernet controller: Realtek Semiconductor Co., Ltd. Device 8126 (rev 01)

0002:00:00.0 PCI bridge: Device 1f6c:0001

0002:01:00.0 Ethernet controller: Realtek Semiconductor Co., Ltd. Device 8126 (rev 01)

0003:30:00.0 PCI bridge: Device 1f6c:0001

0003:31:00.0 Ethernet controller: Realtek Semiconductor Co., Ltd. Device 8126 (rev 01)

Next we’re checking the actual speed (can only be Gen3 since RTL8126 is not Gen4 capable), then set it to Gen1 and re-check (requires superuser privileges):

radxa@orion-o6:~$ sudo lspci -vv -s '0001:c1:00.0' 2>/dev/null | grep LnkSta:

LnkSta: Speed 8GT/s, Width x1

radxa@orion-o6:~$ sudo pcie_set_speed.sh 0001:c1:00.0 1

radxa@orion-o6:~$ sudo lspci -vv -s '0001:c1:00.0' 2>/dev/null | grep LnkSta:

LnkSta: Speed 2.5GT/s (downgraded), Width x1Nice, thanks for sharing!

I’m realizing from your captures (and a local lspci -nnvv) that the LnkCap for the root bridge advertises x4, not x8, while the PCIe connector is supposed to be x8 (and that’s even what’s indicated on the schematic). Thus I don’t know if it’s a device tree limitation or anything else, but I suspect that the network tests I planned will be seriously limited if only 4 lanes are active for now. I’ll see this week-end. Maybe there’s some form of bifurcation and the BIOS changes the configuration when it detects a connected device.

There could also situation when more lanes are used when there is a need.

I had system where gfx card started low speed, low lanes and went up to maximum when heavy used.