Not sure I would advise to buy the tested NVME’s. 2 of three failed.

KingSpec 128GB M.2 NVMe not found Debian+Ubuntu

what brand and models have you tried? Could you list here, it might helps others.

Sorry I was not clear. I was referring to the ones you guys tested. 2 of your 3 failed to perform correctly.

I am interested in using NVMe on the RockPi4B.

- Can we boot from it?

- If we cant boot from it can you provide instructions to create a boot mSD or EMMC and have the file system on the NVMe?

- Is there some type of HAT or adapter board to secure it in place?

I meant the ones that are tested and found ok. But the KingSpec is still in the list.

- Known working -

- Samsung EVO series( M key, NVMe ), work well on ROCK Pi 4, fast speed

- KingSpec NVMe M.2 2280( M key, NVMe ), works well

- MaxMemory NVMe M.2 128G( B&M key, NVMe ), works well

- Known not working -

- HP EX900( B&M Key, NVMe ), detection failed on ROCK Pi 4, works with PC.

I tried the KingSpec128GB in Armbian and also no luck.

@TheDude Q3 :

“We have made a M.2 extended board to put the M.2 SSD on top of ROCK Pi4. It looks like this. See picture here and here.”

https://wiki.radxa.com/Rockpi4/FAQs

Thanks. I knew I saw something like that somewhere.

Allnet China is out of stock and the austria & german sites wont let me register to purchase.

I was also looking at that big heatsink too but its also out of stock. Where else can I purchase?

The big heatsink will start shipping after next Wednesday.

I bay GoodRAM 120GB m2 2280 and on Armbian image not working. Is m2 included in armbans kernel?

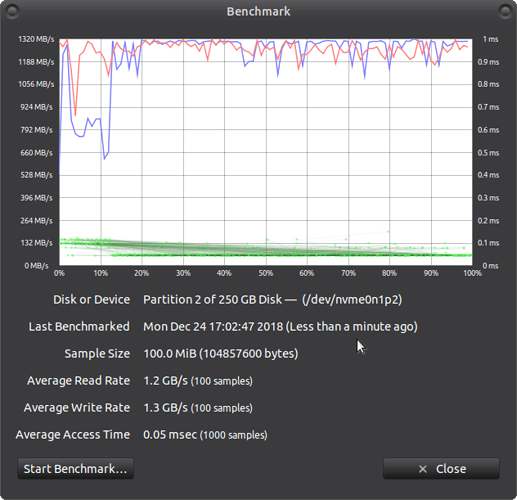

Samsung EVO 970 NVME M.2 250GB installed and seems to be working good. $77 delivered.

Booting from MicroSD and filesystem on NVME

------------------------------------------------------------------------------------------------------------------------------------

FULL WRITE PASS

------------------------------------------------------------------------------------------------------------------------------------

writefile: (g=0): rw=write, bs=(R) 4096KiB-4096KiB, (W) 4096KiB-4096KiB, (T) 4096KiB-4096KiB, ioengine=libaio, iodepth=200

fio-3.1

Starting 1 process

Jobs: 1 (f=0): [f(1)][100.0%][r=0KiB/s,w=1468MiB/s][r=0,w=367 IOPS][eta 00m:00s]

writefile: (groupid=0, jobs=1): err= 0: pid=2343: Sat Dec 22 16:03:24 2018

write: IOPS=198, BW=792MiB/s (831MB/s)(10.0GiB/12923msec)

slat (usec): min=719, max=8530, avg=2995.68, stdev=847.41

clat (msec): min=78, max=1076, avg=970.65, stdev=140.19

lat (msec): min=80, max=1079, avg=973.65, stdev=140.15

clat percentiles (msec):

| 1.00th=[ 203], 5.00th=[ 718], 10.00th=[ 995], 20.00th=[ 1003],

| 30.00th=[ 1003], 40.00th=[ 1003], 50.00th=[ 1003], 60.00th=[ 1003],

| 70.00th=[ 1003], 80.00th=[ 1003], 90.00th=[ 1011], 95.00th=[ 1011],

| 99.00th=[ 1020], 99.50th=[ 1028], 99.90th=[ 1070], 99.95th=[ 1070],

| 99.99th=[ 1083]

bw ( KiB/s): min=114688, max=825675, per=96.36%, avg=781855.67, stdev=142262.46, samples=24

iops : min= 28, max= 201, avg=190.83, stdev=34.72, samples=24

lat (msec) : 100=0.20%, 250=1.17%, 500=1.91%, 750=1.95%, 1000=10.82%

lat (msec) : 2000=83.95%

cpu : usr=39.53%, sys=13.87%, ctx=3292, majf=0, minf=22

IO depths : 1=0.1%, 2=0.1%, 4=0.2%, 8=0.3%, 16=0.6%, 32=1.2%, >=64=97.5%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1%

issued rwt: total=0,2560,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=200

Run status group 0 (all jobs):

WRITE: bw=792MiB/s (831MB/s), 792MiB/s-792MiB/s (831MB/s-831MB/s), io=10.0GiB (10.7GB), run=12923-12923msec

Disk stats (read/write):

nvme0n1: ios=24/10340, merge=0/0, ticks=80/813176, in_queue=813520, util=90.69%

------------------------------------------------------------------------------------------------------------------------------------

RAND READ PASS

------------------------------------------------------------------------------------------------------------------------------------

benchmark: (g=0): rw=randread, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=128

...

fio-3.1

Starting 4 processes

Jobs: 4 (f=4): [r(4)][100.0%][r=664MiB/s,w=0KiB/s][r=170k,w=0 IOPS][eta 00m:00s]

benchmark: (groupid=0, jobs=4): err= 0: pid=2388: Sat Dec 22 16:03:55 2018

read: IOPS=167k, BW=653MiB/s (685MB/s)(19.1GiB/30001msec)

slat (usec): min=6, max=28457, avg=13.17, stdev=41.48

clat (usec): min=67, max=44178, avg=3041.52, stdev=1214.98

lat (usec): min=82, max=44203, avg=3055.53, stdev=1216.38

clat percentiles (usec):

| 1.00th=[ 1975], 5.00th=[ 2073], 10.00th=[ 2147], 20.00th=[ 2278],

| 30.00th=[ 2507], 40.00th=[ 2769], 50.00th=[ 2900], 60.00th=[ 3097],

| 70.00th=[ 3195], 80.00th=[ 3261], 90.00th=[ 3458], 95.00th=[ 4817],

| 99.00th=[ 8717], 99.50th=[10159], 99.90th=[12780], 99.95th=[15533],

| 99.99th=[28181]

bw ( KiB/s): min=97653, max=205186, per=25.15%, avg=168204.46, stdev=13527.72, samples=239

iops : min=24413, max=51296, avg=42050.92, stdev=3381.90, samples=239

lat (usec) : 100=0.01%, 250=0.01%, 500=0.01%, 750=0.01%, 1000=0.01%

lat (msec) : 2=1.91%, 4=91.08%, 10=6.44%, 20=0.52%, 50=0.02%

cpu : usr=28.59%, sys=58.64%, ctx=238159, majf=0, minf=580

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1%

issued rwt: total=5017168,0,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=128

Run status group 0 (all jobs):

READ: bw=653MiB/s (685MB/s), 653MiB/s-653MiB/s (685MB/s-685MB/s), io=19.1GiB (20.6GB), run=30001-30001msec

Disk stats (read/write):

nvme0n1: ios=4993296/151, merge=0/0, ticks=7113608/20, in_queue=7271444, util=100.00%

------------------------------------------------------------------------------------------------------------------------------------

RAND WRITE PASS

------------------------------------------------------------------------------------------------------------------------------------

benchmark: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=128

...

fio-3.1

Starting 4 processes

Jobs: 4 (f=4): [w(4)][100.0%][r=0KiB/s,w=309MiB/s][r=0,w=78.0k IOPS][eta 00m:00s]

benchmark: (groupid=0, jobs=4): err= 0: pid=2397: Sat Dec 22 16:04:26 2018

write: IOPS=141k, BW=550MiB/s (576MB/s)(16.1GiB/30007msec)

slat (usec): min=7, max=29844, avg=15.78, stdev=39.77

clat (usec): min=155, max=58346, avg=3615.78, stdev=1959.79

lat (usec): min=200, max=58356, avg=3632.55, stdev=1963.67

clat percentiles (usec):

| 1.00th=[ 2212], 5.00th=[ 2212], 10.00th=[ 2245], 20.00th=[ 2245],

| 30.00th=[ 2245], 40.00th=[ 2278], 50.00th=[ 3359], 60.00th=[ 3425],

| 70.00th=[ 3458], 80.00th=[ 5669], 90.00th=[ 6521], 95.00th=[ 6915],

| 99.00th=[ 7767], 99.50th=[ 8717], 99.90th=[18744], 99.95th=[32637],

| 99.99th=[45351]

bw ( KiB/s): min=72848, max=228256, per=25.06%, avg=141069.82, stdev=58131.44, samples=239

iops : min=18212, max=57064, avg=35267.34, stdev=14532.85, samples=239

lat (usec) : 250=0.01%, 500=0.01%, 750=0.01%, 1000=0.01%

lat (msec) : 2=0.04%, 4=76.87%, 10=22.70%, 20=0.30%, 50=0.07%

lat (msec) : 100=0.01%

cpu : usr=29.55%, sys=56.29%, ctx=50270, majf=0, minf=73

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1%

issued rwt: total=0,4222617,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=128

Run status group 0 (all jobs):

WRITE: bw=550MiB/s (576MB/s), 550MiB/s-550MiB/s (576MB/s-576MB/s), io=16.1GiB (17.3GB), run=30007-30007msec

Disk stats (read/write):

nvme0n1: ios=49/4212455, merge=0/0, ticks=164/5898416, in_queue=6182236, util=100.00%By default, the nvme is running at gen1 mode for compatibility, so the speed is limited. You can enabled gen2 mode by decompile and recompile the device tree:

fdtdump rockpi-4b-linux.dtb > /tmp/rockpi4.dts

Find pcie@f8000000 section

change from

max-link-speed = <0x00000001>;

to

max-link-speed = <0x00000002>;

Now recompile the dtb:

dtc -I dts -O dtb /tmp/rockpi4.dts -o /tmp/rockpi4.dtb

Replace the original rockpi-4b-linux.dtb(backup it first), reboot.

Thanks Jack

Thats a pretty big increase.

------------------------------------------------------------------------------------------------------------------------------------

FULL WRITE PASS

------------------------------------------------------------------------------------------------------------------------------------

writefile: (g=0): rw=write, bs=(R) 4096KiB-4096KiB, (W) 4096KiB-4096KiB, (T) 4096KiB-4096KiB, ioengine=libaio, iodepth=200

fio-3.1

Starting 1 process

writefile: Laying out IO file (1 file / 10240MiB)

Jobs: 1 (f=1): [W(1)][88.9%][r=0KiB/s,w=1390MiB/s][r=0,w=347 IOPS][eta 00m:01s]

writefile: (groupid=0, jobs=1): err= 0: pid=1777: Mon Dec 24 16:43:26 2018

write: IOPS=346, BW=1384MiB/s (1452MB/s)(10.0GiB/7397msec)

slat (usec): min=955, max=36941, avg=1049.63, stdev=733.97

clat (msec): min=6, max=613, avg=551.03, stdev=89.82

lat (msec): min=7, max=614, avg=552.08, stdev=89.83

clat percentiles (msec):

| 1.00th=[ 79], 5.00th=[ 368], 10.00th=[ 558], 20.00th=[ 567],

| 30.00th=[ 567], 40.00th=[ 567], 50.00th=[ 567], 60.00th=[ 575],

| 70.00th=[ 575], 80.00th=[ 575], 90.00th=[ 584], 95.00th=[ 609],

| 99.00th=[ 617], 99.50th=[ 617], 99.90th=[ 617], 99.95th=[ 617],

| 99.99th=[ 617]

bw ( MiB/s): min= 1194, max= 1426, per=99.10%, avg=1371.84, stdev=64.89, samples=13

iops : min= 298, max= 356, avg=342.69, stdev=16.30, samples=13

lat (msec) : 10=0.08%, 20=0.12%, 50=0.43%, 100=0.66%, 250=2.07%

lat (msec) : 500=3.44%, 750=93.20%

cpu : usr=56.41%, sys=32.29%, ctx=2551, majf=0, minf=21

IO depths : 1=0.1%, 2=0.1%, 4=0.2%, 8=0.3%, 16=0.6%, 32=1.2%, >=64=97.5%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1%

issued rwt: total=0,2560,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=200

Run status group 0 (all jobs):

WRITE: bw=1384MiB/s (1452MB/s), 1384MiB/s-1384MiB/s (1452MB/s-1452MB/s), io=10.0GiB (10.7GB), run=7397-7397msec

Disk stats (read/write):

nvme0n1: ios=0/10367, merge=0/0, ticks=0/84952, in_queue=84948, util=85.55%

------------------------------------------------------------------------------------------------------------------------------------

RAND READ PASS

------------------------------------------------------------------------------------------------------------------------------------

benchmark: (g=0): rw=randread, bs=(R) 32.0KiB-32.0KiB, (W) 32.0KiB-32.0KiB, (T) 32.0KiB-32.0KiB, ioengine=libaio, iodepth=128

...

fio-3.1

Starting 4 processes

Jobs: 4 (f=4): [r(4)][100.0%][r=967MiB/s,w=0KiB/s][r=30.9k,w=0 IOPS][eta 00m:00s]

benchmark: (groupid=0, jobs=4): err= 0: pid=1792: Mon Dec 24 16:43:57 2018

read: IOPS=30.2k, BW=944MiB/s (990MB/s)(27.7GiB/30002msec)

slat (usec): min=15, max=9778, avg=125.19, stdev=100.40

clat (usec): min=301, max=37557, avg=16798.40, stdev=2704.00

lat (usec): min=355, max=37832, avg=16924.36, stdev=2722.99

clat percentiles (usec):

| 1.00th=[11338], 5.00th=[12387], 10.00th=[13435], 20.00th=[14353],

| 30.00th=[15008], 40.00th=[15795], 50.00th=[16909], 60.00th=[17957],

| 70.00th=[18482], 80.00th=[19006], 90.00th=[20055], 95.00th=[20841],

| 99.00th=[23725], 99.50th=[25035], 99.90th=[26870], 99.95th=[27395],

| 99.99th=[28967]

bw ( KiB/s): min=172544, max=304578, per=25.02%, avg=241929.63, stdev=25099.97, samples=240

iops : min= 5392, max= 9518, avg=7560.12, stdev=784.36, samples=240

lat (usec) : 500=0.01%, 750=0.01%, 1000=0.01%

lat (msec) : 2=0.01%, 4=0.01%, 10=0.07%, 20=90.32%, 50=9.60%

cpu : usr=4.86%, sys=30.53%, ctx=771047, majf=0, minf=4169

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1%

issued rwt: total=906608,0,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=128

Run status group 0 (all jobs):

READ: bw=944MiB/s (990MB/s), 944MiB/s-944MiB/s (990MB/s-990MB/s), io=27.7GiB (29.7GB), run=30002-30002msec

Disk stats (read/write):

nvme0n1: ios=903426/235, merge=0/0, ticks=980104/188, in_queue=990116, util=100.00%

------------------------------------------------------------------------------------------------------------------------------------

RAND WRITE PASS

------------------------------------------------------------------------------------------------------------------------------------

benchmark: (g=0): rw=randwrite, bs=(R) 32.0KiB-32.0KiB, (W) 32.0KiB-32.0KiB, (T) 32.0KiB-32.0KiB, ioengine=libaio, iodepth=128

...

fio-3.1

Starting 4 processes

Jobs: 4 (f=4): [w(4)][100.0%][r=0KiB/s,w=317MiB/s][r=0,w=10.2k IOPS][eta 00m:00s]

benchmark: (groupid=0, jobs=4): err= 0: pid=1800: Mon Dec 24 16:44:28 2018

write: IOPS=20.6k, BW=643MiB/s (674MB/s)(18.8GiB/30005msec)

slat (usec): min=19, max=45426, avg=186.90, stdev=394.09

clat (msec): min=2, max=105, avg=24.69, stdev=19.49

lat (msec): min=3, max=106, avg=24.88, stdev=19.64

clat percentiles (usec):

| 1.00th=[ 8291], 5.00th=[ 8848], 10.00th=[ 9110], 20.00th=[ 9503],

| 30.00th=[10159], 40.00th=[12125], 50.00th=[12911], 60.00th=[13960],

| 70.00th=[41681], 80.00th=[49021], 90.00th=[55837], 95.00th=[58459],

| 99.00th=[63701], 99.50th=[70779], 99.90th=[85459], 99.95th=[89654],

| 99.99th=[96994]

bw ( KiB/s): min=68369, max=447353, per=25.06%, avg=164956.83, stdev=132069.05, samples=240

iops : min= 2136, max=13979, avg=5154.59, stdev=4127.13, samples=240

lat (msec) : 4=0.01%, 10=28.40%, 20=37.59%, 50=15.06%, 100=18.96%

lat (msec) : 250=0.01%

cpu : usr=6.27%, sys=19.83%, ctx=479319, majf=0, minf=82

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1%

issued rwt: total=0,617292,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=128

Run status group 0 (all jobs):

WRITE: bw=643MiB/s (674MB/s), 643MiB/s-643MiB/s (674MB/s-674MB/s), io=18.8GiB (20.2GB), run=30005-30005msec

Disk stats (read/write):

nvme0n1: ios=0/615562, merge=0/0, ticks=0/985784, in_queue=987744, util=100.00%

------------------------------------------------------------------------------------------------------------------------------------

Hi @jack

witch one do i decompile, I found 4 files.

/usr/lib/linux-image-4.4.154-59-rockchip-g5e70f14/rockchip/rockpi-4b-linux.dtb

/media/rock/ubt-bionic/usr/lib/linux-image-4.4.154-59-rockchip-g5e70f14/rockchip/rockpi-4b-linux.dtb

/boot/dtbs/4.4.154-59-rockchip-g5e70f14/rockchip/rockpi-4b-linux.dtb

/boot/dtbs/4.4.154-59-rockchip-g5e70f14/rockchip/rockpi-4b-linux.dtb.dpkg-tmp

Thanks

Pierre

I guess this is the one:

This should be a backup from a previous version that was created before an update:

I have created a script to Do this. Mayby we can Put Together a serier of them and create a RocKpiConfig(A want to be raspiconfig) Please feel free to Improve the script. It Need Some Love.

#!/bin/bash

#By default, the nvme is running at gen1 mode for compatibility, so the speed is limited.

#You can enabled gen2 mode by decompile and recompile the device tree:

sudo cp -p /boot/dtbs/4.4.154-59-rockchip-g5e70f14/rockchip/rockpi-4b-linux.dtb /boot/dtbs/4.4.154-59-rockchip-g5e70f14/rockchip/rockpi-4b-linux.dtb.bak

sudo fdtdump /boot/dtbs/4.4.154-59-rockchip-g5e70f14/rockchip/rockpi-4b-linux.dtb > /tmp/rockpi4.dts

sudo sed -i ‘s/max-link-speed = <0x00000001>;/max-link-speed = <0x00000002>;/g’ /tmp/rockpi4.dts

#Find pcie@f8000000 section

#change from

#max-link-speed = <0x00000001>;

#to

#max-link-speed = <0x00000002>;

#Now recompile the dtb:

sudo dtc -I dts -O dtb /tmp/rockpi4.dts -o /tmp/rockpi4.dtb

sudo mv /boot/dtbs/4.4.154-59-rockchip-g5e70f14/rockchip/rockpi-4b-linux.dtb /boot/dtbs/4.4.154-59-rockchip-g5e70f14/rockchip/rockpi-4b-linux.dtb.tmp

sudo cp /tmp/rockpi4.dtb /boot/dtbs/4.4.154-59-rockchip-g5e70f14/rockchip/rockpi-4b-linux.dtb

ls -l /boot/dtbs/4.4.154-59-rockchip-g5e70f14/rockchip/rockpi-4b-linux.dtb

#Replace the original rockpi-4b-linux.dtb(backup it first), reboot.

What NVMe SSDs are recommended?

I think I’m going to order the large heatsink and the SSD extender (I should have ordered them when I got my rock pi4b but I wanted to test the SBC out a bit first)

Is there a list where I can see which ones are compatible, I don’t want to order one that isn’t compatible.

I’m using a Samsung MZ-V7S250BW SSD 970 EVO Plus 250 GB M.2 Internal NVMe SSD, for about 85,- Euro and I’m satisfied with it.

Thanks I’ll keep that in mind when i shop for one :)

Update:

Now we have a hw_config to set the pcie gen2 mode. Uncomment to enable PCIE gen2 mode. Update the rockpi4-dtbo package to 0.7 or later.

# PCIE running on GEN2 mode

intfc:dtoverlay=pcie-gen2

Here is my modified Pierre’s script working on Armbian

#!/bin/bash

#By default, the nvme is running at gen1 mode for compatibility, so the speed is limited.

#You can enabled gen2 mode by decompile and recompile the device tree:

sudo cp -p /boot/dtb/rockchip/rockpi-4b-linux.dtb /boot/dtb/rockchip/rockpi-4b-linux.dtb.bak

sudo fdtdump /boot/dtb/rockchip/rockpi-4b-linux.dtb > /tmp/rockpi4.dts

sudo sed -i 's/max-link-speed = <0x00000001>;/max-link-speed = <0x00000002>;/g' /tmp/rockpi4.dts

#Find pcie@f8000000 section

#change from

#max-link-speed = <0x00000001>;

#to

#max-link-speed = <0x00000002>;

#Now recompile the dtb:

sudo dtc -I dts -O dtb /tmp/rockpi4.dts -o /tmp/rockpi4.dtb

sudo mv /boot/dtb/rockchip/rockpi-4b-linux.dtb /boot/dtb/rockchip/rockpi-4b-linux.dtb.tmp

sudo cp /tmp/rockpi4.dtb /boot/dtb/rockchip/rockpi-4b-linux.dtb

ls -l /boot/dtb/rockchip/rockpi-4b-linux.dtb

#Replace the original rockpi-4b-linux.dtb(backup it first), reboot.Hi,

how can I use this? On my system there is no hw_intfc.config. I followed the tutorials for updating u-boot etc. but it did not help (https://wiki.radxa.com/Rockpi4/radxa-apt)

Performance for NVME (970EVO) is unchanged and bad:

sudo hdparm -Tt --direct /dev/nvme0n1

/dev/nvme0n1:

Timing O_DIRECT cached reads: 534 MB in 2.01 seconds = 266.22 MB/sec

Timing O_DIRECT disk reads: 800 MB in 3.00 seconds = 266.34 MB/sec

Any ideas?

Carsten

Pretty sure if you did follow the you would have hw_intfc.config

either the above and edit the dts or go through https://wiki.radxa.com/Rockpi4/radxa-apt more methodically.

But what system (linux) are you using?