I’ bought the Radxa Camera 4K and Rock5b as a beginner. I just want to write a Python file that calls the camera function to take a photo, or to record a video. I would appreciate it if anyone could help me since the introduction of the camera on https://wiki.radxa.com/Rock5/accessories/radxa-camera-4k is not detailed, and doesn’t mention the API of the camera. A detailed process and a simple example would be much appreciated.

How to use Radxa Camera 4K

You asked for, here is the shortest and most intense course for beginners.

- Camera

- Environment

- Working with the camera, examples

- References

1. Camera

The camera has a Sony IMX-415 sensor, 4K (8MP), Low-light sensitivity, and fixed focus with MIPI interface. The current driver is able to get a picture (frame) of 3840x2160 pixels (high resolution) in NV12 format.

2. Environment

Debian 11 is used here, with the camera_engine_rkaiq installed and running, there should be a file in your setups like this one or a similar file:

/etc/iqfiles/imx415_CMK-OT2022-PX1_IR0147-50IRC-8M-F20.json

Note: You might have a newer file from radxa:

/etc/iqfiles/imx415_RADXA-CAMERA-4K_DEFAULT.json

Basically, when you take a picture, the camera engine does some pre-processing using that appropriate file, helping the camera adjust colors, light, and exposure. It runs in the background in auto mode. Without the camera engine, you get a dark and green image.

Gstreamer is the software that can work with the camera, gstreamer works with plugins and you need a special plugin called rockchipmpp that can encode the frames into H264/H265 with hardware acceleration. If you want to save a video in an MP4 container you will use the plugin. Other software that usually works with USB cameras, like FFmpeg or OpenCV, will not work and it will need some modifications in order to work properly.

Before we work with the camera, we check if we have the correct setup.

Camera nodes (11 will be our device):

When Linux detects a camera it creates a device node for that camera. Our camera will be in /dev/video11

rock@rock5b:~/rockchip/camera/python$ ls /dev/video*

/dev/video0 /dev/video13 /dev/video18 /dev/video22 /dev/video5

/dev/video1 /dev/video14 /dev/video19 /dev/video23 /dev/video6

/dev/video10 /dev/video15 /dev/video2 /dev/video24 /dev/video7

/dev/video11 /dev/video16 /dev/video20 /dev/video3 /dev/video8

/dev/video12 /dev/video17 /dev/video21 /dev/video4 /dev/video9

Gstreamer plugin:

Rock@rock5b:~/rockchip/camera/python$ gst-inspect-1.0 |grep mpp

rockchipmpp: mppjpegdec: Rockchip’s MPP JPEG image decoder

rockchipmpp: mppvideodec: Rockchip’s MPP video decoder

rockchipmpp: mppjpegenc: Rockchip Mpp JPEG Encoder

rockchipmpp: mpph265enc: Rockchip Mpp H265 Encoder

rockchipmpp: mpph264enc: Rockchip Mpp H264 Encoder

Camera engine:

rock@rock5b:~/rockchip/camera/python$ systemctl status rkaiq_3A

● rkaiq_3A.service - rkisp 3A engine

Loaded: loaded (/lib/systemd/system/rkaiq_3A.service; enabled; vendor pres>

Active: active (running) since Sat 2022-11-26 11:52:57 UTC; 8h ago

Process: 359 ExecStart=/etc/init.d/rkaiq_3A.sh start (code=exited, status=0>

Tasks: 7 (limit: 18511)

Memory: 22.7M

CPU: 1min 6.898s

CGroup: /system.slice/rkaiq_3A.service

├─376 /usr/bin/rkaiq_3A_server

└─377 logger -t rkaiq

Python:

rock@rock5b:~/rockchip/camera/python$ python3 --version

Python 3.9.2

If you don’t have the correct environment, this guide will not work.

3. Working with the camera, examples

Finding video formats for our device node (/dev/video11) examples:

rock@rock5b:~/rockchip/camera/python$ v4l2-ctl --device /dev/video11 --list-formats-ext

ioctl: VIDIOC_ENUM_FMT

Type: Video Capture Multiplanar

[0]: 'UYVY' (UYVY 4:2:2)

Size: Stepwise 32x16 - 3840x2160 with step 8/8

[1]: 'NV16' (Y/CbCr 4:2:2)

Size: Stepwise 32x16 - 3840x2160 with step 8/8

[2]: 'NV61' (Y/CrCb 4:2:2)

Size: Stepwise 32x16 - 3840x2160 with step 8/8

[3]: 'NV21' (Y/CrCb 4:2:0)

Size: Stepwise 32x16 - 3840x2160 with step 8/8

[4]: 'NV12' (Y/CbCr 4:2:0)

Size: Stepwise 32x16 - 3840x2160 with step 8/8

[5]: 'NM21' (Y/CrCb 4:2:0 (N-C))

Size: Stepwise 32x16 - 3840x2160 with step 8/8

[6]: 'NM12' (Y/CbCr 4:2:0 (N-C))

Size: Stepwise 32x16 - 3840x2160 with step 8/8

For this example, we use python3, but i think python2 will also work.

rock@rock5b:~/rockchip/camera/python$ python3 --version

Python 3.9.2

Our python example calls gstreamer to grab the frames or a frame from the camera and save video in MP4 or JPG.

Take a picture python command line example (640x480 in size):

- command line argument (pass a file name)

take_photo.py [file name.jpg]

- running

rock@rock5b:~/rockchip/camera/python$ python3 take_photo.py photo.jpg

rga_api version 1.8.1_[4]

-rw-r–r-- 1 rock rock 234576 Nov 26 19:51 photo.jpg

Python File:

import gi import sys import time gi.require_version('Gst', '1.0') from gi.repository import Gst # initialize GStreamer Gst.init(sys.argv) pipeline = Gst.parse_launch ("v4l2src device=/dev/video11 io-mode=dmabuf num-buffers=1 ! video/x-raw,format=NV12,width=640,height=480 ! mppjpegenc ! filesink location=" + sys.argv[1]) pipeline.set_state(Gst.State.PLAYING) time.sleep(1) pipeline.set_state(Gst.State.NULL)

Take a video in MP4 (h264 640x480)

- command line example (640x480 in size):

take_video.py [name.mp4] [time in seconds approximate]

- running

rock@rock5b:~/rockchip/camera/python$ python3 take_video.py video_30s_30fps_640x480.mp4 30

rga_api version 1.8.1_[4]

-rw-r–r-- 1 rock rock 4311511 Nov 26 19:49 video_30s_30fps_640x480.mp4

Python file:

import gi

import sys

import time

gi.require_version('Gst', '1.0')

from gi.repository import Gst

# initialize GStreamer

Gst.init(sys.argv)

pipeline = Gst.parse_launch ("v4l2src device=/dev/video11 io-mode=dmabuf ! video/x-raw,format=NV12,width=640,height=480,framerate=30/1 ! mpph264enc ! filesink location=" + sys.argv[1])

pipeline.set_state(Gst.State.PLAYING)

time.sleep(int(sys.argv[2]))

pipeline.set_state(Gst.State.NULL)

4. References

http://paulbourke.net/dataformats/nv12/

https://www.electronicdesign.com/technologies/communications/article/21799475/understanding-mipi-alliance-interface-specifications

https://gstreamer.freedesktop.org/documentation/tutorials/index.html?gi-language=c

Now that you can get pictures from the camera, please, move the thread to ROCK 5 series and not ROCK 4 series.

Thanks a lot!!! Btw, are there any differences in ubuntu?

And if I plugin a USB camera, which device(video0-video20) will it be on the rock5B? Does it matter which port(since there are fore USE ports on the rock5B) I use for the camera?

The Linux kernel assigns a virtual device node (or more than one for different purposes) for every camera you attach to the board, being USB, MIPI, or any other type.

It does not matter which port you use.

You can find the camera and the device node assigned with this command:

rock@rock5b:~$ v4l2-ctl --list-devices

rk_hdmirx (fdee0000.hdmirx-controller):

/dev/video20

rkisp-statistics (platform: rkisp):

/dev/video18

/dev/video19

rkcif-mipi-lvds2 (platform:rkcif):

/dev/media0

rkcif (platform:rkcif-mipi-lvds2):

/dev/video0

/dev/video1

/dev/video2

/dev/video3

/dev/video4

/dev/video5

/dev/video6

/dev/video7

/dev/video8

/dev/video9

/dev/video10

rkisp_mainpath (platform:rkisp0-vir0):

/dev/video11

/dev/video12

/dev/video13

/dev/video14

/dev/video15

/dev/video16

/dev/video17

/dev/media1

USB 2.0 Camera: USB Camera (usb-fc880000.usb-1):

/dev/video21

/dev/video22

/dev/video23

/dev/video24

/dev/media2

USB 2.0 Camera: HD 720P Webcam (usb-xhci-hcd.8.auto-1):

/dev/video25

/dev/video26

/dev/media3

in the case of Radxa Camera, you can find several nodes, say, high resolution, and lower res with different video formats that can be convenient for some users.

rock@rock5b:~$ v4l2-ctl --device /dev/video11 --list-formats-ext

ioctl: VIDIOC_ENUM_FMT

Type: Video Capture Multiplanar

[0]: 'UYVY' (UYVY 4:2:2)

Size: Stepwise 32x16 - 3840x2160 with step 8/8

[1]: 'NV16' (Y/CbCr 4:2:2)

Size: Stepwise 32x16 - 3840x2160 with step 8/8

[2]: 'NV61' (Y/CrCb 4:2:2)

Size: Stepwise 32x16 - 3840x2160 with step 8/8

[3]: 'NV21' (Y/CrCb 4:2:0)

Size: Stepwise 32x16 - 3840x2160 with step 8/8

[4]: 'NV12' (Y/CbCr 4:2:0)

Size: Stepwise 32x16 - 3840x2160 with step 8/8

[5]: 'NM21' (Y/CrCb 4:2:0 (N-C))

Size: Stepwise 32x16 - 3840x2160 with step 8/8

[6]: 'NM12' (Y/CbCr 4:2:0 (N-C))

Size: Stepwise 32x16 - 3840x2160 with step 8/8

rock@rock5b:~$ v4l2-ctl --device /dev/video12 --list-formats-ext

ioctl: VIDIOC_ENUM_FMT

Type: Video Capture Multiplanar

[0]: 'UYVY' (UYVY 4:2:2)

Size: Stepwise 32x16 - 1920x2160 with step 8/8

[1]: 'NV16' (Y/CbCr 4:2:2)

Size: Stepwise 32x16 - 1920x2160 with step 8/8

[2]: 'NV61' (Y/CrCb 4:2:2)

Size: Stepwise 32x16 - 1920x2160 with step 8/8

[3]: 'NV21' (Y/CrCb 4:2:0)

Size: Stepwise 32x16 - 1920x2160 with step 8/8

[4]: 'NV12' (Y/CbCr 4:2:0)

Size: Stepwise 32x16 - 1920x2160 with step 8/8

[5]: 'NM21' (Y/CrCb 4:2:0 (N-C))

Size: Stepwise 32x16 - 1920x2160 with step 8/8

[6]: 'NM12' (Y/CbCr 4:2:0 (N-C))

Size: Stepwise 32x16 - 1920x2160 with step 8/8

[7]: 'GREY' (8-bit Greyscale)

Size: Stepwise 32x16 - 1920x2160 with step 8/8

[8]: 'XR24' (32-bit BGRX 8-8-8-8)

Size: Stepwise 32x16 - 1920x2160 with step 8/8

[9]: 'RGBP' (16-bit RGB 5-6-5)

Size: Stepwise 32x16 - 1920x2160 with step 8/8

Regarding Ubuntu x Debian x any other, it does not matter which distro.

Here is the python program to record H265 and view video simultaneously.

Python program:

import gi

import sys

import time

gi.require_version('Gst', '1.0')

from gi.repository import Gst

# initialize GStreamer

Gst.init(sys.argv)

pipeline = Gst.parse_launch ("v4l2src device=/dev/video11 ! video/x-raw,format=NV12,width=1920,height=1080,framerate=30/1 ! timeoverlay ! tee name=liveTee ! queue leaky=downstream ! videoconvert ! ximagesink async=false liveTee. ! queue leaky=downstream ! videoconvert ! queue ! mpph265enc ! filesink location=" + sys.argv[1])

pipeline.set_state(Gst.State.PLAYING)

time.sleep(int(sys.argv[2]))

pipeline.set_state(Gst.State.NULL)

command line arg:

take_video2.py [ file_name.mkv ] [ time in secs ]

Save as take_python2.py and run the program like so:

python3 take_video2.py test.mkv 30

Thank you for this guide! I was already worried that my cameras were broken.

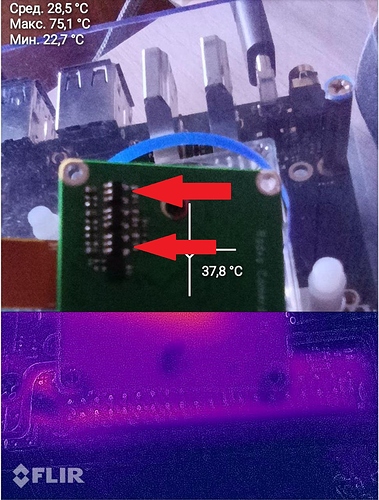

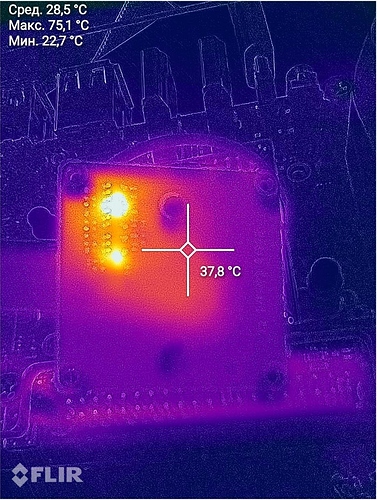

One question: Some components on the back of the camera are getting really hot when displaying video. Is that normal? Does a cooling solution have any advantages?

quite normal. I think the cooler the better for the long run, specification say it can run 60º C.

Thank you! It feels like its hotter than 60° C. I think I will put some passive coolers with thermal tape on there. Again thank you. It is nice to see a picture which is not green

Launched the camera from under SSH using the command

gst-launch-1.0 v4l2src device=/dev/video11 ! video/x-raw,format=NV12,width=800,height=600, framerate=30/1 ! autovideosink

Some elements heated up to ~75 degrees in 10 seconds. I didn’t watch further, I’m afraid to burn the electronics.

I touched the position of the sensor and, indeed, these components get really hot, so I didn’t worry.

I left it running for a week, and it didn’t burn.

i see green picture when i use camera 4k with RADXA-5b on ubuntu - https://github.com/Joshua-Riek/ubuntu-rockchip

how to fix that ?

- Look at your DTS for parameters “rockchip,camera-module-name” and “rockchip,camera-module-lens-name” for your camera (imx415?). Their values are refered below as MODULENAME and LENSNAME.

- Look at directory /etc/iqfiles. It must contain file “imx415_MODULENAME_LENSNAME.json”. If not then you can try to use some other JSON file for imx415 (for exanple, from the beginning of this topic), may be renamed.

- Check if rkaiq_3A_server can be started either automatically as service or manually (just run rkaiq_3A_server). If it crashes then it seems that the JSON file is not compatible with this version of server. You could try to use rkaiq_3A_server and librkaiq.so from other distro.

i found out that rkaiq_3A_server does all stuff.

i`m on Debian 11 now and camera works fine if rkaiq_3A_server service is working.

But when rkaiq_3A_server service is stopped camera gives dark green as it was on Ubuntu.

I tried to start rkaiq_3A_server service on Ubuntu but it fails.

Should i take:

/usr/lib/librkaiq.so

/usr/bin/rkaiq_3A_server

from Debian and replace the same files on Ubuntu ?

in /etc/iqfiles i see:

imx415_CMK-OT2022-PX1_IR0147-50IRC-8M-F20.json

imx415_RADXA-CAMERA-4K_DEFAULT.json

…

should be copied to Ubuntu ?

The order of actions:

- Try just to copy JSON files to Ubuntu’s directory /etc/iqfiles and run rkaiq_3A_server.

- If rkaiq_3A_server crashes then check your DTS for module/lens names (see my post above) and try to rename some of JSON file as described in my post…

- If rkaiq_3A_server still crashes then copy both rkaiq_3A_server and librkaiq.so from working distro (Debian?) to some temporary directory in Ubuntu and run server manually:

sudo LD_LIBRARY_PATH=. ./rkaiq_3A_server

If it works then you can replace corresponding files in your Ubuntu.

i tried to replace rkaiq_3A_server and librkaiq.so from Debian to Ubuntu - rkaiq_3A_server doesnt start.

librkaiq.so - there was no such lib there so i just copied and chmod it.

but i got some result.

camera starts working after i just installed https://github.com/radxa/debos-radxa/raw/main/rootfs/packages/arm64/camera/camera-engine-rkaiq_3.0.2_arm64.deb

but after reboot i see again green ((

have a look results here please -

The official answer I got from radxa was “do not use ubuntu it doesn’t work”

Unfortunately, no enough information to understand what happens.

Did you check your DTS for module/lens names? If you have 2 or more JSON files for your camera (imx415), then you could try to rename them to fit DTS values. May be settings in one of these files are more correct.

It works on Ubuntu and Debian. most likely they are referring to some GUI apps or their updated engine.

See: IMX415 + NPU demo on ROCK 5B

The issue here looks like the 3A engine crashed on reboot. If i am not mistaken they reverted some of the new changes made (that was causing a crash?).

Unfortunately, the engine couldn’t be natively compiled, so I couldn’t do much with it, as I don’t cross-compile.

I compiled rkaiq_3A_server from Firefly repo. It works on my NanoPC-T6 board. You can see

my exercises  . Server and library are in the second post there.

. Server and library are in the second post there.