The Optane Memory H10 is actually a QLC SSD and an optane memory placed on the single board, each one occupies its pcie3.0 x2 bus. There are no PCIe switch / mux / cache controller on the board, just two components glued together

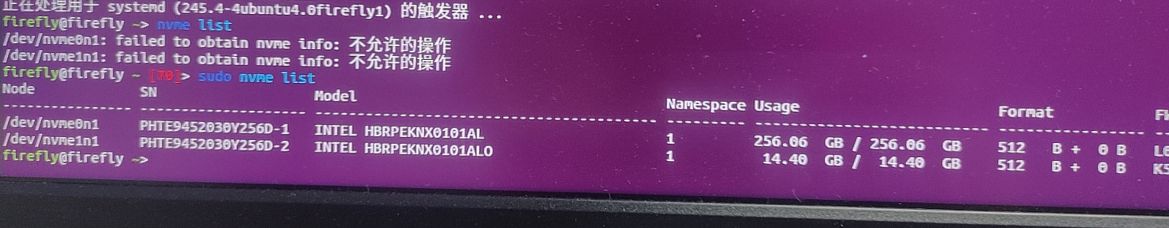

By default the m.2 m key slot is configured to pcie3.0x4 mode so only one component will be visible. In my case it is the optane memory.

To switch the slot to pcie3.0 x2 +x2 mode, refer to Rockchip_Developer_Guide_PCIe Chapter 2.3.2 and change the dts accordingly. Please note the reset_gpio property for the pcie3x2 node should be set to GPIO1 B7 following board design.

Also you can use this dtb I modified

rk3588-rock-5b.dtb.zip (44.8 KB)

NOTE The Optane H10 is power-hungry, if you encounter problems like power loss please use a DC power supply instead of PD supply!