Hello,

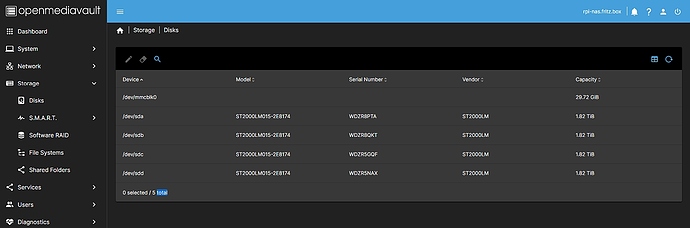

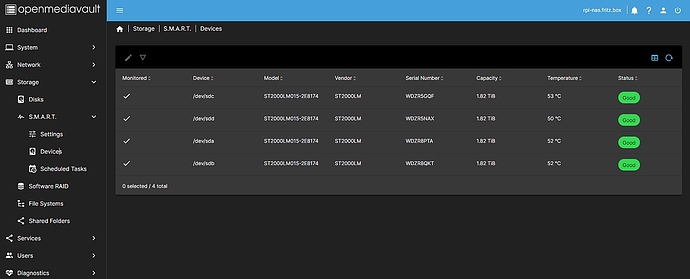

I have been using the Quad SATA HAT with RAID10 set up for quite some time. There are 4 identical hard drives in use and so far everything worked without problems.

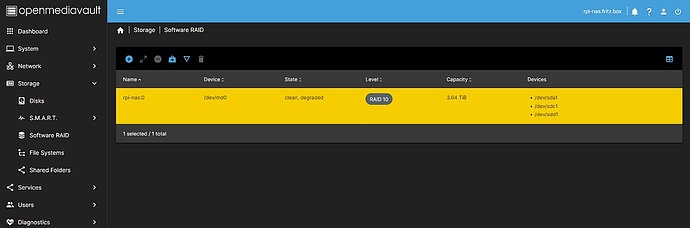

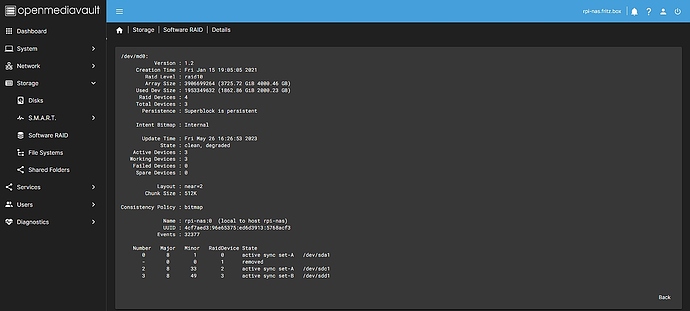

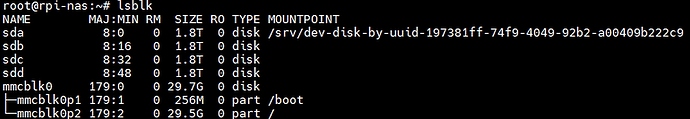

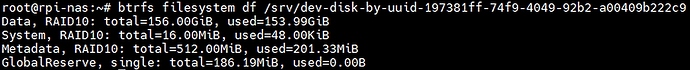

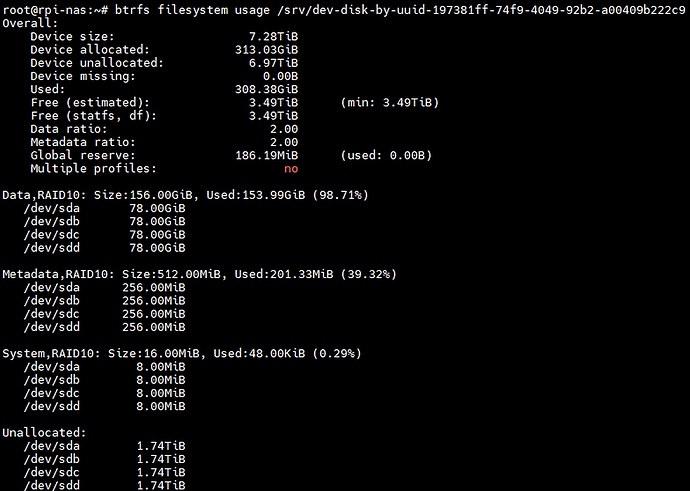

Now I noticed that one hard disk is no longer part of the RAID10. I have not noticed any other problems so far.

Since I am not a Linux guru, I would ask for some help in fixing the problem.

However, the hard disk sdb, which is not part of RAID10, is not defective. I cannot explain why it is so suddenly no longer integrated.

I’m not so sure how I set up the RAID10 back then, with mdadm, the jms56x raid controller console app or both.

I’ll post a few issues of the jms56x raid controller console app once:

JMS56X>GC

–> Total available JMS56X controllers = 2 <–

JMS56X>DC C0

Controller[0]

– ChipID = 10

– SerialNumber = 427491329

– SuperUserPwd = ▒▒▒▒▒▒▒▒

– Sata[0]

------ ModelName = ST2000LM015-2E8174

------ SerialNumber = WDZR8PTA

------ FirmwareVer = 0001

------ Capacity = 1863 GB

------ PortType = Hard Disk

------ PortSpeed = Gen 3

------ Page0State = Hooked to PM

------ Page0RaidIdx = 0

------ Page0MbrIdx = 0

– Sata[1]

------ ModelName = ST2000LM015-2E8174

------ SerialNumber = WDZR8QKT

------ FirmwareVer = 0001

------ Capacity = 1863 GB

------ PortType = Hard Disk

------ PortSpeed = Gen 3

------ Page0State = Hooked to PM

------ Page0RaidIdx = 0

------ Page0MbrIdx = 0

JMS56X>DC C1

Controller[1]

– ChipID = 11

– SerialNumber = 427491329

– SuperUserPwd = ▒▒▒▒▒▒▒▒

– Sata[0]

------ ModelName = ST2000LM015-2E8174

------ SerialNumber = WDZR5GQF

------ FirmwareVer = 0001

------ Capacity = 1863 GB

------ PortType = Hard Disk

------ PortSpeed = Gen 3

------ Page0State = Hooked to PM

------ Page0RaidIdx = 0

------ Page0MbrIdx = 0

– Sata[1]

------ ModelName = ST2000LM015-2E8174

------ SerialNumber = WDZR5NAX

------ FirmwareVer = 0001

------ Capacity = 1863 GB

------ PortType = Hard Disk

------ PortSpeed = Gen 3

------ Page0State = Hooked to PM

------ Page0RaidIdx = 0

------ Page0MbrIdx = 0

root@rpi-nas:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 1.8T 0 disk

└─sda1 8:1 0 1.8T 0 part

└─md0 9:0 0 3.6T 0 raid10 /srv/dev-disk-by-uuid-ea0838f8-5efe-44a3-8cf7-40fd60a73de0

sdb 8:16 0 1.8T 0 disk

└─sdb1 8:17 0 1.8T 0 part

sdc 8:32 0 1.8T 0 disk

└─sdc1 8:33 0 1.8T 0 part

└─md0 9:0 0 3.6T 0 raid10 /srv/dev-disk-by-uuid-ea0838f8-5efe-44a3-8cf7-40fd60a73de0

sdd 8:48 0 1.8T 0 disk

└─sdd1 8:49 0 1.8T 0 part

└─md0 9:0 0 3.6T 0 raid10 /srv/dev-disk-by-uuid-ea0838f8-5efe-44a3-8cf7-40fd60a73de0

mmcblk0 179:0 0 29.7G 0 disk

├─mmcblk0p1 179:1 0 256M 0 part /boot

└─mmcblk0p2 179:2 0 29.5G 0 part /

Of course, it would be great if I could mount the hard drive again without losing any data.

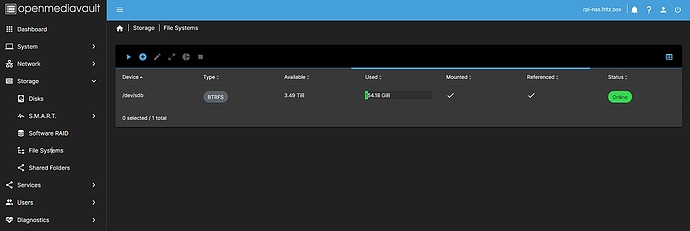

Here are a few more screenshots from Openmediavault:

Best regards, Carsten