There is a problem with the clock configuration of RK3588 in every known implementation, mainline, bsp etc, and thus the GPU / NPU clocks are not being set to nominal max values when CRU is used.

To understand why, better to look at how clocks are assigned to individual soc cores.

The base source frequency in 24MHz that is feeding everywhere and it is fixed.

There are several PLLs that take this frequency, and creates or synthesize another frequency from it. But the PLLs are not generally changed once they are configured. So they are configured once the device boots up and thats it.

Individual cores (ie, gpu) get their source clock form the PLL output. The cores however dynamically change the PLL output by dividing it. So they can reduce the PLL input to their own desired clock, by dividing them (only decrease, they cant multiply).

So simply something like below:

24Mhz -> PLL (multipliers and dividers [p,m,s,k]) -> GPU/NPU (divider) = dynamic frequency.

There are a bunch of PLLs but for GPU only CPL, GPLL, V0PLL, AUPLL, SPLL, NPLL is relevant. Gpu can select one of those and can divide it with a value in between [1-32].

default configuration for those PLLs are:

AUPLL: 786.432 Mhz

CPLL : 1500 Mhz

V0PLL: 1188 Mhz

NPLL : 850 Mhz

GPLL : 1188 Mhz

you can verify this by

sudo cat /sys/kernel/debug/clk/clk_summary | grep pll_

The problem with frequency divider is, you get your step frequency more sensitive in the lower frequency range, in the higher freq. range your steps will be collasal.

Ie:

for CPLL: 1500/1=1500Mhz, 1500/2=750Mhz, 1500/3=500Mhz, 1500/4=375Mhz ....

so you can jump from 1500 to 750 and to 500 from there, there frequencies in between can not be set.

and if you take all frequencies above listed PLLs and divide them you will get the below steps:

... 500, 594, 702, 750, 786, 850, 1188, 1500 Mhz. And this is the exact problem.

When you look at the opp_table of gpu/npu, you can see that it is configured to get ... 500, 600, 700, 800, 900, 1000 Mhz already, but the PLLs and the core dividers can not create such frequency.

Instead, when you request ie: 1000Mhz, clock driver gets the highest possible frequency, which is less than the reeusted in above table, In this case it is 850 Mhz. This is the top frequency you can get and thats the problem.

You can also verify this by.

set the the gpu governor to performance

sudo bash -c "echo performance > /sys/class/devfreq/fb000000.gpu/governor" (Note: you fb00000.gpu node might have different name, check it first)

check the actual assigned clock

sudo cat /sys/kernel/debug/clk/clk_summary | grep gpu

you will see that it will be maximum 850Mhz no matter what.

The Solution:

The actual solution is to tune the PLL clocks so that they can provide the frequencies which are requested in the opp_tables but this is not as easy as it sounds, because the above PLLs are also used in bunch of other cores which are not gpu, and if your new clock does not meet the frequency tolerance of the other cores then you will break other hardware cores.

But there is an easy approach. NPLL is mainly used for NPU and it has the same clock opptable as GPU. So if we modify this we will have the least impact to other components.

So i just pumped the NPLL clock from 850Mhz to 4Ghz so that we will bunch more divided frequencies. With that change, the available frequencies would be:

500, 571, 594, 666, 702, 750, 786, 800, 1000, 1188, 1333, 1500 ...

Now we can get proper 1Ghz or 800Mhz.

Here is the fix https://github.com/hbiyik/linux/commit/e4fd428dd34fe13cbd5fa6ed79e2f787bc7655b0

new when the governor is set to performance you can actually get 1Ghz set any can verify this by.

sudo cat /sys/kernel/debug/clk/clk_summary | grep gpu

I have also benchmarked this with glmark2-wayland -b terrain on weston.

Before the score was 112fps with the fix it is 130fps! So this is our way of getting lost %16 performance.

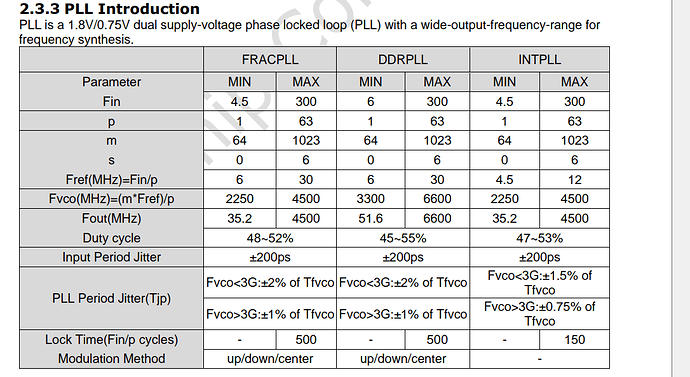

It worth to note that setting NPLL to 4Ghz is just a workaround, becuase step frequencies like 900 are still not available. Also 4Ghz is way above of this max 1.5Ghz define in bsp SOC, which also contradicts with TRM statement of 4.5Ghz (The FracPLL Fout part)

Future Plans

It is also possible to bypass the kernel and set the individual CRU registers with mmm tool that i created. I have also set the GPU freq to 1.5GHz so the tool works, but a second tool is actually needed to change the regulator voltages over RK806 to feed such frequencies to gpu properly otherwise it will just crash when voltage is not enough.

a general tip to get involved with mmm.

//to get the actual CRU registers status

sudo python mmm.py get -c rk3588 -d CRU

//to get clocks in the CRU

sudo python mmm.py get -c rk3588 -d CRU -p clock

//to set PLL source of to GPU to GPLL

sudo python mmm.py set -c rk3588 -d CRU -r GPU_CLKSEL -p sel GPLL

//to set PLL source of to GPU to SPLL

sudo python mmm.py set -c rk3588 -d CRU -r GPU_CLKSEL -p sel SPLL

//to set GPU clock divider to 1 (catuion value - 1 must be entered )

sudo python mmm.py set -c rk3588 -d CRU -r GPU_CLKSEL -p div 0

//to set GPU clock divider to 2 (catuion value - 1 must be entered )

sudo python mmm.py set -c rk3588 -d CRU -r GPU_CLKSEL -p div 1

so the tool is quite capable, but be careful, dont burn your device or break it. You have been warned…

Any developer or tinkerer interest is appreciated in the tool, but again be careful, it is a scalpel for a surgeon…

But it makes an interesting project I should consider. I have no idea where to start to run code on the GPU there, I’m totally ignorant of these things.

But it makes an interesting project I should consider. I have no idea where to start to run code on the GPU there, I’m totally ignorant of these things.