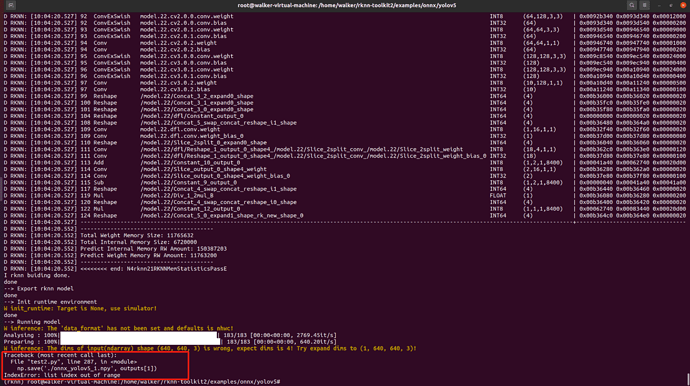

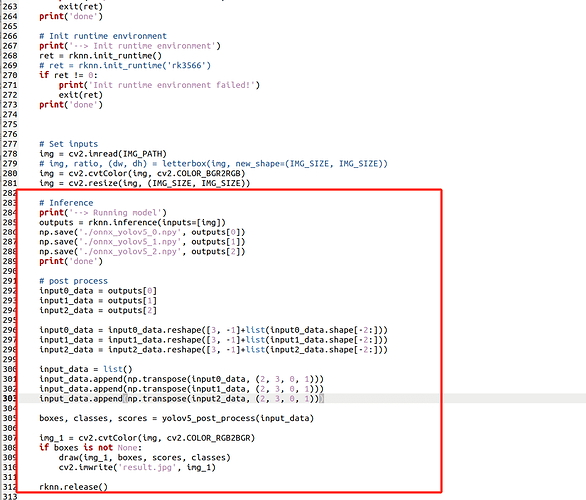

what‘s going on? how can i fix it?

Use YoloV8 in RK3588 NPU

try img = np.reshape(img, (1, 640,640,3)) before inference?

PS using the cpu with yolov8

(venv) ubuntu@ubuntu:~/ultralytics$ yolo predict model=./yolov8n_saved_model/yolov8n_int8.tflite imgsz=320

WARNING ⚠️ Unable to automatically guess model task, assuming 'task=detect'. Explicitly define task for your model, i.e. 'task=detect', 'segment', 'classify', or 'pose'.

WARNING ⚠️ 'source' is missing. Using default 'source=/home/ubuntu/ultralytics/ultralytics/assets'.

Ultralytics YOLOv8.0.215 🚀 Python-3.10.12 torch-2.1.1 CPU (Cortex-A55)

/usr/lib/python3/dist-packages/scipy/__init__.py:146: UserWarning: A NumPy version >=1.17.3 and <1.25.0 is required for this version of SciPy (detected version 1.26.2

warnings.warn(f"A NumPy version >={np_minversion} and <{np_maxversion}"

Loading yolov8n_saved_model/yolov8n_int8.tflite for TensorFlow Lite inference...

INFO: Created TensorFlow Lite XNNPACK delegate for CPU.

image 1/2 /home/ubuntu/ultralytics/ultralytics/assets/bus.jpg: 320x320 4 persons, 1 bus, 78.5ms

image 2/2 /home/ubuntu/ultralytics/ultralytics/assets/zidane.jpg: 320x320 2 persons, 67.7ms

Speed: 5.9ms preprocess, 73.1ms inference, 3.5ms postprocess per image at shape (1, 3, 320, 320)

Results saved to /home/ubuntu/ultralytics/runs/detect/predict5

💡 Learn more at https://docs.ultralytics.com/modes/predict

That is a single big core and likely more optimisation could be done.

The 3core NPU is such a strange inclusion as if it was 4 core and you could partition models across cpu cores and NPU then wow…

can i run this with your tool?

https://developer.nvidia.com/embedded/community/jetson-projects/spaghetti_detective#:~:text=Obico%20is%20an%20open-source,alerts%20when%20one%20is%20detected.

Prob could but looks very like x86 orientated the nvidia option is likely just cuda and any nvidia GPU.

Unravelling the x86 spaghetti could be simple or turn into a marathon and what they use the GPU for I dunno and its just an option.

I think I got the wrong tflite last time but also compiled the tflite_runtime native for the rk3588

(venv) (base) stuart@stuart-desktop:~/ultralytics$ yolo predict model=./yolov8n_saved_model/yolov8n_full_integer_quant.tflite imgsz=320 conf=0.25

WARNING ⚠️ Unable to automatically guess model task, assuming 'task=detect'. Explicitly define task for your model, i.e. 'task=detect', 'segment', 'classify', or 'pose'.

WARNING ⚠️ 'source' is missing. Using default 'source=/home/stuart/ultralytics/ultralytics/assets'.

Ultralytics YOLOv8.0.216 🚀 Python-3.11.5 torch-2.1.1 CPU (Cortex-A55)

Loading yolov8n_saved_model/yolov8n_full_integer_quant.tflite for TensorFlow Lite inference...

INFO: Created TensorFlow Lite XNNPACK delegate for CPU.

image 1/2 /home/stuart/ultralytics/ultralytics/assets/bus.jpg: 320x320 7 persons, 1 bus, 39.0ms

image 2/2 /home/stuart/ultralytics/ultralytics/assets/zidane.jpg: 320x320 4 persons, 33.2ms

Speed: 4.6ms preprocess, 36.1ms inference, 3.6ms postprocess per image at shape (1, 3, 320, 320)

Results saved to /home/stuart/ultralytics/runs/detect/predict10

💡 Learn more at https://docs.ultralytics.com/modes/predict

That seems to be running on a single core as the Yolov8 framework is just so great but if hacking in 4 threads to tflite

(venv) (base) stuart@stuart-desktop:~/ultralytics$ yolo predict model=./yolov8n_saved_model/yolov8n_full_integer_quant.tflite imgsz=320 conf=0.25

WARNING ⚠️ Unable to automatically guess model task, assuming 'task=detect'. Explicitly define task for your model, i.e. 'task=detect', 'segment', 'classify', or 'pose'.

WARNING ⚠️ 'source' is missing. Using default 'source=/home/stuart/ultralytics/ultralytics/assets'.

Ultralytics YOLOv8.0.216 🚀 Python-3.11.5 torch-2.1.1 CPU (Cortex-A55)

Loading yolov8n_saved_model/yolov8n_full_integer_quant.tflite for TensorFlow Lite inference...

INFO: Created TensorFlow Lite XNNPACK delegate for CPU.

image 1/2 /home/stuart/ultralytics/ultralytics/assets/bus.jpg: 320x320 7 persons, 1 bus, 26.6ms

image 2/2 /home/stuart/ultralytics/ultralytics/assets/zidane.jpg: 320x320 4 persons, 19.2ms

Speed: 3.4ms preprocess, 22.9ms inference, 3.0ms postprocess per image at shape (1, 3, 320, 320)

Results saved to /home/stuart/ultralytics/runs/detect/predict23

💡 Learn more at https://docs.ultralytics.com/modes/predict

I seem to remember that threading with tflite gives diminishing returns and like better keeping to a core but loading x4 models and running input on a round robin on the large cores (x4) and prob push to 100fps maybe higher.

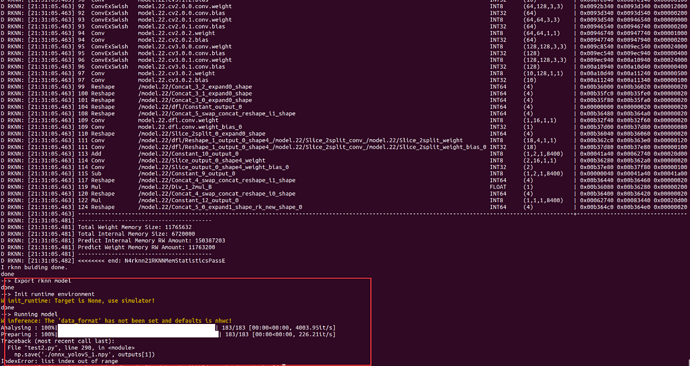

I had a go with rknn and got it to run but almost x2 as slow as I can on the cpu and it complains about stride length… ?

The output also is crock so gave up.

Running as x4 seperate cli processes

(venv) stuart@stuart-desktop:~/ultralytics$ ./test

WARNING ⚠️ Unable to automatically guess model task, assuming 'task=detect'. Explicitly define task for your model, i.e. 'task=detect', 'segment', 'classify', or 'pose'.

WARNING ⚠️ Unable to automatically guess model task, assuming 'task=detect'. Explicitly define task for your model, i.e. 'task=detect', 'segment', 'classify', or 'pose'.

WARNING ⚠️ Unable to automatically guess model task, assuming 'task=detect'. Explicitly define task for your model, i.e. 'task=detect', 'segment', 'classify', or 'pose'.

WARNING ⚠️ Unable to automatically guess model task, assuming 'task=detect'. Explicitly define task for your model, i.e. 'task=detect', 'segment', 'classify', or 'pose'.

Ultralytics YOLOv8.0.217 🚀 Python-3.10.12 torch-2.1.1 CPU (Cortex-A55)

Ultralytics YOLOv8.0.217 🚀 Python-3.10.12 torch-2.1.1 CPU (Cortex-A55)

Ultralytics YOLOv8.0.217 🚀 Python-3.10.12 torch-2.1.1 CPU (Cortex-A55)

Ultralytics YOLOv8.0.217 🚀 Python-3.10.12 torch-2.1.1 CPU (Cortex-A55)

Loading yolov8n_saved_model/yolov8n_full_integer_quant.tflite for TensorFlow Lite inference...

INFO: Created TensorFlow Lite XNNPACK delegate for CPU.

Loading yolov8n_saved_model/yolov8n_full_integer_quant.tflite for TensorFlow Lite inference...

INFO: Created TensorFlow Lite XNNPACK delegate for CPU.

Loading yolov8n_saved_model/yolov8n_full_integer_quant.tflite for TensorFlow Lite inference...

INFO: Created TensorFlow Lite XNNPACK delegate for CPU.

Loading yolov8n_saved_model/yolov8n_full_integer_quant.tflite for TensorFlow Lite inference...

INFO: Created TensorFlow Lite XNNPACK delegate for CPU.

image 1/28 /tmp/images/0x0.webp: 320x320 1 car, 38.5ms

image 1/28 /tmp/images/0x0.webp: 320x320 1 car, 37.9ms

image 1/28 /tmp/images/0x0.webp: 320x320 1 car, 42.3ms

image 2/28 /tmp/images/1_JS286428040.webp: 320x320 3 persons, 30.9ms

image 2/28 /tmp/images/1_JS286428040.webp: 320x320 3 persons, 30.2ms

image 2/28 /tmp/images/1_JS286428040.webp: 320x320 3 persons, 95.0ms

image 1/28 /tmp/images/0x0.webp: 320x320 1 car, 44.6ms

image 3/28 /tmp/images/1d6830cc-9382-11ed-ad8c-0210609a3fe2.jpg: 320x320 5 persons, 36.6ms

image 2/28 /tmp/images/1_JS286428040.webp: 320x320 3 persons, 32.7ms

image 3/28 /tmp/images/1d6830cc-9382-11ed-ad8c-0210609a3fe2.jpg: 320x320 5 persons, 45.8ms

image 3/28 /tmp/images/1d6830cc-9382-11ed-ad8c-0210609a3fe2.jpg: 320x320 5 persons, 33.4ms

image 4/28 /tmp/images/220805-border-collie-play-mn-1100-82d2f1.webp: 320x320 1 dog, 31.2ms

image 5/28 /tmp/images/90-vauxhall-corsa-electric-best-small-cars.jpg: 320x320 1 car, 29.4ms

image 4/28 /tmp/images/220805-border-collie-play-mn-1100-82d2f1.webp: 320x320 1 dog, 52.2ms

image 3/28 /tmp/images/1d6830cc-9382-11ed-ad8c-0210609a3fe2.jpg: 320x320 5 persons, 42.7ms

image 4/28 /tmp/images/220805-border-collie-play-mn-1100-82d2f1.webp: 320x320 1 dog, 30.6ms

image 6/28 /tmp/images/Can-a-single-person-own-a-firm-in-India.jpg: 320x320 1 person, 30.4ms

image 5/28 /tmp/images/90-vauxhall-corsa-electric-best-small-cars.jpg: 320x320 1 car, 32.9ms

image 4/28 /tmp/images/220805-border-collie-play-mn-1100-82d2f1.webp: 320x320 1 dog, 33.0ms

image 5/28 /tmp/images/90-vauxhall-corsa-electric-best-small-cars.jpg: 320x320 1 car, 30.3ms

image 7/28 /tmp/images/DCTM_Penguin_UK_DK_AL697473_RGB_PNG_namnse.webp: 320x320 1 cat, 29.7ms

image 6/28 /tmp/images/Can-a-single-person-own-a-firm-in-India.jpg: 320x320 1 person, 31.5ms

image 5/28 /tmp/images/90-vauxhall-corsa-electric-best-small-cars.jpg: 320x320 1 car, 34.9ms

image 6/28 /tmp/images/Can-a-single-person-own-a-firm-in-India.jpg: 320x320 1 person, 44.4ms

image 8/28 /tmp/images/Planning-a-teen-party-narrow.jpg: 320x320 9 persons, 30.1ms

image 6/28 /tmp/images/Can-a-single-person-own-a-firm-in-India.jpg: 320x320 1 person, 31.8ms

image 7/28 /tmp/images/DCTM_Penguin_UK_DK_AL697473_RGB_PNG_namnse.webp: 320x320 1 cat, 42.6ms

image 7/28 /tmp/images/DCTM_Penguin_UK_DK_AL697473_RGB_PNG_namnse.webp: 320x320 1 cat, 29.7ms

image 9/28 /tmp/images/RollingStoneAwardsAaronParsonsPhotography23.11_highres_30-1024x683.jpg: 320x320 4 persons, 30.6ms

image 7/28 /tmp/images/DCTM_Penguin_UK_DK_AL697473_RGB_PNG_namnse.webp: 320x320 1 cat, 30.3ms

image 8/28 /tmp/images/Planning-a-teen-party-narrow.jpg: 320x320 9 persons, 29.7ms

image 8/28 /tmp/images/Planning-a-teen-party-narrow.jpg: 320x320 9 persons, 38.5ms

image 8/28 /tmp/images/Planning-a-teen-party-narrow.jpg: 320x320 9 persons, 29.5ms

image 10/28 /tmp/images/VIER PFOTEN_2016-07-08_011-5184x2712-1200x628.jpg: 320x320 1 cat, 33.2ms

image 9/28 /tmp/images/RollingStoneAwardsAaronParsonsPhotography23.11_highres_30-1024x683.jpg: 320x320 4 persons, 31.0ms

image 9/28 /tmp/images/RollingStoneAwardsAaronParsonsPhotography23.11_highres_30-1024x683.jpg: 320x320 4 persons, 33.0ms

image 9/28 /tmp/images/RollingStoneAwardsAaronParsonsPhotography23.11_highres_30-1024x683.jpg: 320x320 4 persons, 29.8ms

image 11/28 /tmp/images/XLB_4.png: 320x320 1 dog, 30.1ms

image 10/28 /tmp/images/VIER PFOTEN_2016-07-08_011-5184x2712-1200x628.jpg: 320x320 1 cat, 30.8ms

image 10/28 /tmp/images/VIER PFOTEN_2016-07-08_011-5184x2712-1200x628.jpg: 320x320 1 cat, 31.0ms

image 10/28 /tmp/images/VIER PFOTEN_2016-07-08_011-5184x2712-1200x628.jpg: 320x320 1 cat, 29.5ms

image 12/28 /tmp/images/_119254021_lotusemira.jpg: 320x320 1 car, 30.7ms

image 11/28 /tmp/images/XLB_4.png: 320x320 1 dog, 30.1ms

image 11/28 /tmp/images/XLB_4.png: 320x320 1 dog, 31.1ms

image 13/28 /tmp/images/athletic-women-walking-together-on-remote-trail-royalty-free-image-1626378592.jpg: 320x320 5 persons, 30.1ms

image 11/28 /tmp/images/XLB_4.png: 320x320 1 dog, 32.8ms

image 12/28 /tmp/images/_119254021_lotusemira.jpg: 320x320 1 car, 29.8ms

image 12/28 /tmp/images/_119254021_lotusemira.jpg: 320x320 1 car, 30.5ms

image 14/28 /tmp/images/birthdayparty.jpg: 320x320 5 persons, 30.3ms

image 12/28 /tmp/images/_119254021_lotusemira.jpg: 320x320 1 car, 29.7ms

image 13/28 /tmp/images/athletic-women-walking-together-on-remote-trail-royalty-free-image-1626378592.jpg: 320x320 5 persons, 31.1ms

image 13/28 /tmp/images/athletic-women-walking-together-on-remote-trail-royalty-free-image-1626378592.jpg: 320x320 5 persons, 31.4ms

image 15/28 /tmp/images/bloat_md.jpg: 320x320 3 persons, 3 bicycles, 1 bench, 30.1ms

image 13/28 /tmp/images/athletic-women-walking-together-on-remote-trail-royalty-free-image-1626378592.jpg: 320x320 5 persons, 31.9ms

image 14/28 /tmp/images/birthdayparty.jpg: 320x320 5 persons, 40.1ms

image 14/28 /tmp/images/birthdayparty.jpg: 320x320 5 persons, 32.6ms

image 16/28 /tmp/images/bus.jpg: 320x320 5 persons, 1 bus, 29.5ms

image 14/28 /tmp/images/birthdayparty.jpg: 320x320 5 persons, 39.0ms

image 15/28 /tmp/images/bloat_md.jpg: 320x320 3 persons, 3 bicycles, 1 bench, 30.4ms

image 15/28 /tmp/images/bloat_md.jpg: 320x320 3 persons, 3 bicycles, 1 bench, 31.8ms

image 15/28 /tmp/images/bloat_md.jpg: 320x320 3 persons, 3 bicycles, 1 bench, 30.5ms

image 17/28 /tmp/images/gettyimages-1094874726.png: 320x320 1 dog, 1 sheep, 30.3ms

image 16/28 /tmp/images/bus.jpg: 320x320 5 persons, 1 bus, 29.7ms

image 16/28 /tmp/images/bus.jpg: 320x320 5 persons, 1 bus, 32.5ms

image 16/28 /tmp/images/bus.jpg: 320x320 5 persons, 1 bus, 29.5ms

image 18/28 /tmp/images/image (1).jpg: 320x320 5 persons, 38.7ms

image 17/28 /tmp/images/gettyimages-1094874726.png: 320x320 1 dog, 1 sheep, 30.6ms

image 17/28 /tmp/images/gettyimages-1094874726.png: 320x320 1 dog, 1 sheep, 30.7ms

image 19/28 /tmp/images/image.jpg: 320x320 1 car, 5 trucks, 30.0ms

image 17/28 /tmp/images/gettyimages-1094874726.png: 320x320 1 dog, 1 sheep, 29.7ms

image 18/28 /tmp/images/image (1).jpg: 320x320 5 persons, 35.0ms

image 18/28 /tmp/images/image (1).jpg: 320x320 5 persons, 31.9ms

image 20/28 /tmp/images/images.jpg: 320x320 2 persons, 30.2ms

image 18/28 /tmp/images/image (1).jpg: 320x320 5 persons, 30.2ms

image 19/28 /tmp/images/image.jpg: 320x320 1 car, 5 trucks, 29.7ms

image 21/28 /tmp/images/kiss-haunted-house-party-watch-back-performances.jpg: 320x320 4 persons, 29.6ms

image 19/28 /tmp/images/image.jpg: 320x320 1 car, 5 trucks, 32.4ms

image 19/28 /tmp/images/image.jpg: 320x320 1 car, 5 trucks, 31.7ms

image 20/28 /tmp/images/images.jpg: 320x320 2 persons, 32.7ms

image 22/28 /tmp/images/man-walking-1024x651.jpg: 320x320 2 persons, 29.6ms

image 20/28 /tmp/images/images.jpg: 320x320 2 persons, 30.5ms

image 20/28 /tmp/images/images.jpg: 320x320 2 persons, 30.8ms

image 21/28 /tmp/images/kiss-haunted-house-party-watch-back-performances.jpg: 320x320 4 persons, 31.3ms

image 23/28 /tmp/images/party-games.png: 320x320 6 persons, 29.7ms

image 21/28 /tmp/images/kiss-haunted-house-party-watch-back-performances.jpg: 320x320 4 persons, 29.0ms

image 21/28 /tmp/images/kiss-haunted-house-party-watch-back-performances.jpg: 320x320 4 persons, 31.4ms

image 22/28 /tmp/images/man-walking-1024x651.jpg: 320x320 2 persons, 30.1ms

image 24/28 /tmp/images/truck.webp: 320x320 1 truck, 29.6ms

image 22/28 /tmp/images/man-walking-1024x651.jpg: 320x320 2 persons, 30.9ms

image 22/28 /tmp/images/man-walking-1024x651.jpg: 320x320 2 persons, 30.0ms

image 23/28 /tmp/images/party-games.png: 320x320 6 persons, 30.2ms

image 25/28 /tmp/images/walking.jpg: 320x320 1 person, 29.6ms

image 23/28 /tmp/images/party-games.png: 320x320 6 persons, 29.9ms

image 23/28 /tmp/images/party-games.png: 320x320 6 persons, 30.4ms

image 24/28 /tmp/images/truck.webp: 320x320 1 truck, 30.0ms

image 24/28 /tmp/images/truck.webp: 320x320 1 truck, 36.3ms

image 24/28 /tmp/images/truck.webp: 320x320 1 truck, 39.0ms

image 26/28 /tmp/images/why-do-cats-have-whiskers-1.jpg: 320x320 (no detections), 31.1ms

image 25/28 /tmp/images/walking.jpg: 320x320 1 person, 32.9ms

image 25/28 /tmp/images/walking.jpg: 320x320 1 person, 29.7ms

image 25/28 /tmp/images/walking.jpg: 320x320 1 person, 29.8ms

image 27/28 /tmp/images/why-is-it-called-a-semi-truck.jpg: 320x320 1 truck, 31.9ms

image 26/28 /tmp/images/why-do-cats-have-whiskers-1.jpg: 320x320 (no detections), 31.1ms

image 26/28 /tmp/images/why-do-cats-have-whiskers-1.jpg: 320x320 (no detections), 31.5ms

image 26/28 /tmp/images/why-do-cats-have-whiskers-1.jpg: 320x320 (no detections), 29.8ms

image 28/28 /tmp/images/wild-dog.jpg: 320x320 1 sheep, 30.2ms

Speed: 7.2ms preprocess, 31.1ms inference, 6.3ms postprocess per image at shape (1, 3, 320, 320)

💡 Learn more at https://docs.ultralytics.com/modes/predict

image 27/28 /tmp/images/why-is-it-called-a-semi-truck.jpg: 320x320 1 truck, 30.5ms

image 27/28 /tmp/images/why-is-it-called-a-semi-truck.jpg: 320x320 1 truck, 29.6ms

image 27/28 /tmp/images/why-is-it-called-a-semi-truck.jpg: 320x320 1 truck, 29.8ms

image 28/28 /tmp/images/wild-dog.jpg: 320x320 1 sheep, 30.0ms

Speed: 6.7ms preprocess, 33.4ms inference, 6.7ms postprocess per image at shape (1, 3, 320, 320)

💡 Learn more at https://docs.ultralytics.com/modes/predict

image 28/28 /tmp/images/wild-dog.jpg: 320x320 1 sheep, 46.2ms

Speed: 6.7ms preprocess, 32.8ms inference, 6.4ms postprocess per image at shape (1, 3, 320, 320)

💡 Learn more at https://docs.ultralytics.com/modes/predict

image 28/28 /tmp/images/wild-dog.jpg: 320x320 1 sheep, 30.1ms

Speed: 6.9ms preprocess, 34.6ms inference, 7.4ms postprocess per image at shape (1, 3, 320, 320)

💡 Learn more at https://docs.ultralytics.com/modes/predict

It does with you but as you can clearly see it works for me just FPS is just not that great.

I’m not entirely sure what you’re trying to achieve here, but the rknn model conversion has completed successfully (‘done’) and it appears the code is failing at the inference side attempting to use yolov5 post processing, and it also appears to me it is attempting to run on your pc (‘Target is None, use simulator!’) rather than your rk3588 device. Have you tried your model on the device itself to see whether it works as expected using the toolkit lite?

Forget about this thread here.

You can have Yolov5, Yolov6, Yolov7, Yolov8, and a lot more, converted to and running with rknn using this: [https://github.com/airockchip/rknn_model_zoo]

It looks like a subset of the official Rockchip, i think…

@avaf Thats interesting also Yolo models using 640x640 which in frigate use 320x320 but actually mAP takes a nose dive with 320x320 likely due to training was 640x640.

I guess also when posting, mention if you have altered the DTB to enable https://github.com/rockchip-linux/rknpu2/blob/master/doc/RK3588_NPU_SRAM_usage.md as I think you will only ever hit rated Tops if enabled, but guess we will see

Yes, i have that enabled.

SRAM bitmap: "*" - used, "." - free (1bit = 4KB)

[000] [................................]

[001] [................................]

[002] [................................]

[003] [................................]

[004] [................................]

[005] [................................]

[006] [................................]

[007] [...............]

SRAM total size: 978944, used: 0, free: 978944

[ 7.722333] RKNPU fdab0000.npu: Adding to iommu group 0

[ 7.722504] RKNPU fdab0000.npu: RKNPU: rknpu iommu is enabled, using iommu mode

[ 7.722606] RKNPU fdab0000.npu: Looking up rknpu-supply from device tree

[ 7.723196] RKNPU fdab0000.npu: Looking up mem-supply from device tree

[ 7.723701] RKNPU fdab0000.npu: can't request region for resource [mem 0xfdab0000-0xfdabffff]

[ 7.723723] RKNPU fdab0000.npu: can't request region for resource [mem 0xfdac0000-0xfdacffff]

[ 7.723738] RKNPU fdab0000.npu: can't request region for resource [mem 0xfdad0000-0xfdadffff]

[ 7.724345] [drm] Initialized rknpu 0.8.2 20220829 for fdab0000.npu on minor 1

[ 7.726687] RKNPU fdab0000.npu: Looking up rknpu-supply from device tree

[ 7.727233] RKNPU fdab0000.npu: Looking up mem-supply from device tree

[ 7.727888] RKNPU fdab0000.npu: Looking up rknpu-supply from device tree

[ 7.730056] RKNPU fdab0000.npu: bin=0

[ 7.730232] RKNPU fdab0000.npu: leakage=8

[ 7.730257] RKNPU fdab0000.npu: Looking up rknpu-supply from device tree

[ 7.730273] debugfs: Directory 'fdab0000.npu-rknpu' with parent 'vdd_npu_s0' already present!

[ 7.737330] RKNPU fdab0000.npu: pvtm=850

[ 7.743361] RKNPU fdab0000.npu: pvtm-volt-sel=2

[ 7.743962] RKNPU fdab0000.npu: avs=0

[ 7.744080] RKNPU fdab0000.npu: l=10000 h=85000 hyst=5000 l_limit=0 h_limit=800000000 h_table=0

[ 7.758605] RKNPU fdab0000.npu: failed to find power_model node

[ 7.758625] RKNPU fdab0000.npu: RKNPU: failed to initialize power model

[ 7.758632] RKNPU fdab0000.npu: RKNPU: failed to get dynamic-coefficient

[ 7.758904] RKNPU fdab0000.npu: RKNPU: sram region: [0x00000000ff001000, 0x00000000ff0f0000), sram size: 0xef000

With the previous librknnrt.so runtime the results were something weird, not tested with the new one.

I was focused only on converting and running all examples on rk3588, there was only one problem that asked me to upgrade to API 1.6.1 to solve that (a python thing). But asked me to have an account in a Chinese kind of repository, so i could not download it.

I think because we have the onnx models that likely have had some love to convert to RKnpu as some models just don’t convert that well and leave so much outside to run on CPU that its far from good.

In the airockchip repo there is a yolov8 and I would be interested in benchmarks.

I did have my fingers crossed with kernel 6.7 that we could have Panthor drivers and vulkan as would also be interesting to run on ArmNN & NCNN that are cpu/gpu based and always thought its a shame the Android NNAPI didn’t have a Linux equivalent than each NPU have a different framework by its vendor.

I am open to run any bench you wish, i just don’t have any good ones.

Running yolov8n (NPU not stressed with SRAM - 3. Mixed designation):

Lot of info there but did it give inference total speed anywhere or did I just miss it?  Prob me but can not see it.

Prob me but can not see it.

It was just debug info…

i will add some total speed…

If you can get total inference speed over a few images or a FPS it would be great.

Looking at the debug there is still much that fails to quantise where yolov8 might not be all that great on a TPU as noticed Frigate has stuck with is it YoloV5 as prob quantises better than others?

There is https://github.com/Deci-AI/super-gradients/blob/master/YOLONAS.md as its booast is that it quantises well.

I think this is a meaningful test, inference with single image (bus.jpg):

Performance without SRAM

========================

color=0x72

rga_api version 1.10.0_[2]

rknn_run

inference run 25.691000 ms

person @ (211 241 282 506) 0.864

bus @ (96 136 549 449) 0.864

person @ (109 235 225 535) 0.860

person @ (477 226 560 522) 0.848

person @ (79 327 116 513) 0.306

Performance with SRAM

======================

color=0x72

rga_api version 1.10.0_[2]

rknn_run

inference run 21.678000 ms

person @ (211 241 282 506) 0.864

bus @ (96 136 549 449) 0.864

person @ (109 235 225 535) 0.860

person @ (477 226 560 522) 0.848

person @ (79 327 116 513) 0.306

SRAM total size: 978944, used: 786432, free: 192512

SRAM bitmap: "*" - used, "." - free (1bit = 4KB)

[000] [********************************]

[001] [********************************]

[002] [********************************]

[003] [********************************]

[004] [********************************]

[005] [********************************]

[006] [................................]Ps whats the cpu load like and did you try using all three npu cores?

Oh yeah that will be at 640x640 I guess which is much better.

The export I did with tflite was 320x320 so that is actually much faster than before.

Speed: 7.2ms preprocess, 31.1ms inference, 6.3ms postprocess per image at shape (1, 3, 320, 320)

Speed: 6.7ms preprocess, 33.4ms inference, 6.7ms postprocess per image at shape (1, 3, 320, 320)

Speed: 6.7ms preprocess, 32.8ms inference, 6.4ms postprocess per image at shape (1, 3, 320, 320)

Speed: 6.9ms preprocess, 34.6ms inference, 7.4ms postprocess per image at shape (1, 3, 320, 320)

That was running through about 20 images on all cores concurrent, to test yolov8 on cpu