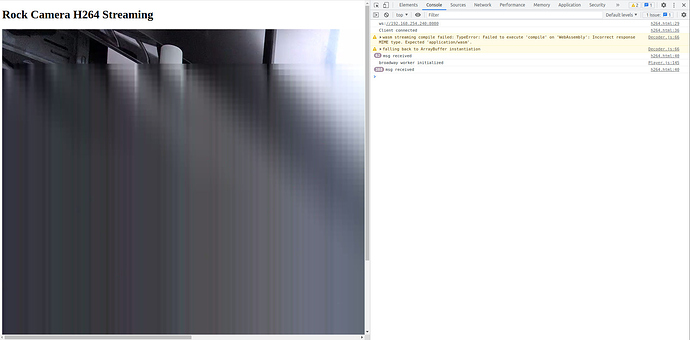

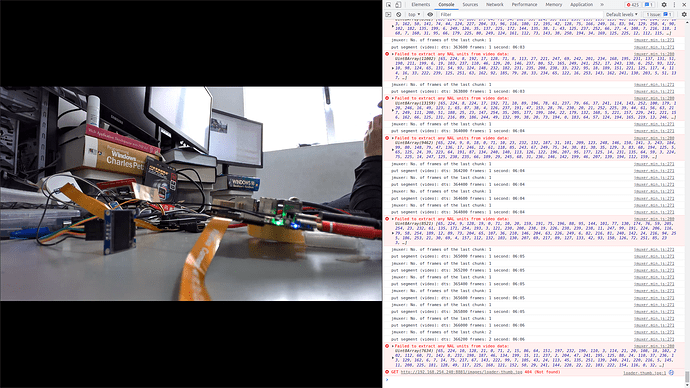

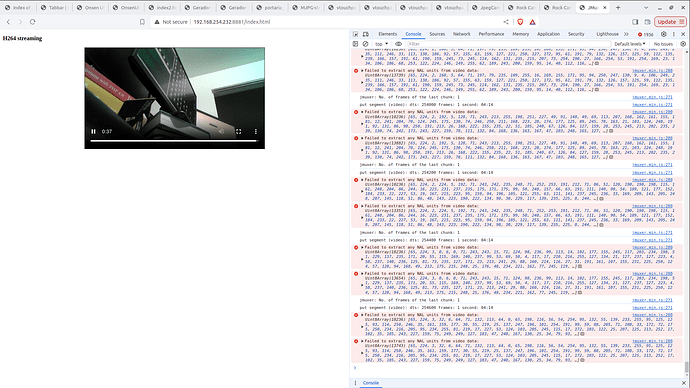

Just for the sake of information, and until i find out how to disable those advanced encoding features i tried a new player with better results, and good latency but still lots of artifacts.

BTW, these flags seem not available anymore:

-wpredp 0

-tune zerolatency

I want to try this one (https://videojs.com/) but i haven’t found a way to send/receive a websocket message that will start pushing the stream on my ws server.

If anyone has experience with the videojs and can point out how to use it with websocket i would appreciate it.

Here is the script i use for the WSAvcPlayer that works:

<!DOCTYPE html>

<html>

<head>

<title>h264-live-player web client demo</title>

</head>

<body>

<p id='frame_buffer'></p>

<br />

<canvas id='cam' style="width:300px; height:200px;">

<!-- provide WSAvcPlayer -->

<script type="text/javascript" src="WSAvcPlayer.js">

;

</script>

<script type="text/javascript">

var canvas = document.getElementById('cam')

var fb = document.getElementById('frame_buffer')

// Create h264 player

var wsavc = new WSAvcPlayer(canvas, "webgl", 1, 35);

//expose instance for button callbacks

window.wsavc = wsavc;

var uri = window.location.protocol.replace(/http/, 'ws') + '//' + window.location.hostname + ':8080'

console.log('uri', uri);

var result = wsavc.connect(uri);

wsavc.on('disconnected', () => console.log('WS Disconnected'))

wsavc.on('connected', () => wsavc.send('*play'))

wsavc.on('frame_shift', (fbl) => {

fb.innerText = 'fl: ' + fbl

})

wsavc.on('initalized', (payload) => {

console.log('Initialized', payload)

})

wsavc.on('stream_active', active => console.log('Stream is ', active ? 'active' : 'offline'))

wsavc.on('custom_event_from_server', event => console.log('got event from server', event))

</script>

</body>

</html>

Here is the videojs script that needs to be fixed to decode and display the stream.

<!DOCTYPE html>

<html>

<head>

<meta charset='utf-8'>

<link href="video-js.min.css" rel="stylesheet">

<script src="video.min.js"></script>

<script src="sockjs.min.js"></script>

<style>

/* change player background color */

#myVideo {

background-color: #1a535c;

}

</style>

</head>

<body>

<video id="myVideo" playsinline class="video-js vjs-default-skin"></video>

<script>

/* eslint-disable */

var options = {

controls: true,

width: 320,

height: 240,

fluid: false,

bigPlayButton: false,

controlBar: {

volumePanel: false

}

};

// apply some workarounds for opera browser

// applyVideoWorkaround();

var player = videojs('myVideo', options, function() {

// print version information at startup

var msg = 'Using video.js ' + videojs.VERSION;

videojs.log(msg);

// connect to websocket server

var wsUri = window.location.protocol.replace(/http/, 'ws') + '//' + window.location.hostname + ':8080'

console.log('connecting to websocket: ' + wsUri);

var ws = new WebSocket(wsUri)

ws.binaryType = 'arraybuffer'

ws.onopen = function(e) {

ws.send('*play');

ws.binaryType = 'arraybuffer'

console.log('Client connected')

}

ws.onmessage = function(msg) {

// decode stream

console.log('msg received')

// HOWTO

// window.player.decode(new Uint8Array(msg.data));

//

}

ws.onclose = function(e) {

console.log('Client disconnected')

}

});

// error handling

player.on('deviceError', function() {

console.warn('device error:', player.deviceErrorCode);

});

player.on('error', function(element, error) {

console.error(error);

});

// user clicked the record button and started recording

player.on('startRecord', function() {

console.log('started recording!');

});

// user completed recording and stream is available

player.on('finishRecord', function() {

// the blob object contains the recorded data that

// can be downloaded by the user, stored on server etc.

console.log('finished recording: ', player.recordedData);

});

</script>

</body>

</html>