Testing LVGL on Rock 5B.

Dependencies:

- SDL2 with HW accel (build and install my version or the latest sdl2 might work better)

- cmake up to date

Build demo, recipe:

mkdir -p lvgl

cd lvgl

git clone --recursive https://github.com/littlevgl/pc_simulator.git

cd pc_simulator

mkdir -p build

cd build

cmake ..

make -j8

Before you build you should enable, disable or change some settings:

diff --git a/lv_conf.h b/lv_conf.h

index 0b9a6dc..7cf0612 100644

--- a/lv_conf.h

+++ b/lv_conf.h

@@ -49,7 +49,7 @@

#define LV_MEM_CUSTOM 0

#if LV_MEM_CUSTOM == 0

/*Size of the memory available for `lv_mem_alloc()` in bytes (>= 2kB)*/

- #define LV_MEM_SIZE (128 * 1024U) /*[bytes]*/

+ #define LV_MEM_SIZE (896 * 1024U) /*[bytes]*/

/*Set an address for the memory pool instead of allocating it as a normal array. Can be in external SRAM too.*/

#define LV_MEM_ADR 0 /*0: unused*/

@@ -151,7 +151,7 @@

/*Maximum buffer size to allocate for rotation.

*Only used if software rotation is enabled in the display driver.*/

-#define LV_DISP_ROT_MAX_BUF (32*1024)

+#define LV_DISP_ROT_MAX_BUF (64*1024)

/*-------------

* GPU

@@ -184,7 +184,7 @@

#if LV_USE_GPU_SDL

#define LV_GPU_SDL_INCLUDE_PATH <SDL2/SDL.h>

/*Texture cache size, 8MB by default*/

- #define LV_GPU_SDL_LRU_SIZE (1024 * 1024 * 8)

+ #define LV_GPU_SDL_LRU_SIZE (1024 * 1024 * 64)

/*Custom blend mode for mask drawing, disable if you need to link with older SDL2 lib*/

#define LV_GPU_SDL_CUSTOM_BLEND_MODE (SDL_VERSION_ATLEAST(2, 0, 6))

#endif

diff --git a/lv_drivers b/lv_drivers

--- a/lv_drivers

+++ b/lv_drivers

@@ -1 +1 @@

-Subproject commit 1bd4368e71df5cafd68d1ad0a37ce0f92b8f6b88

+Subproject commit 1bd4368e71df5cafd68d1ad0a37ce0f92b8f6b88-dirty

diff --git a/lv_drv_conf.h b/lv_drv_conf.h

index 4f6a4e2..b40db57 100644

--- a/lv_drv_conf.h

+++ b/lv_drv_conf.h

@@ -95,8 +95,8 @@

#endif

#if USE_SDL || USE_SDL_GPU

-# define SDL_HOR_RES 480

-# define SDL_VER_RES 320

+# define SDL_HOR_RES 1920

+# define SDL_VER_RES 1080

/* Scale window by this factor (useful when simulating small screens) */

# define SDL_ZOOM 1

Tested with HDMI 1920x1080 and debug info. There is also a Wayland driver that may be a lot faster but as i don’t have Wayland i have not tested it.

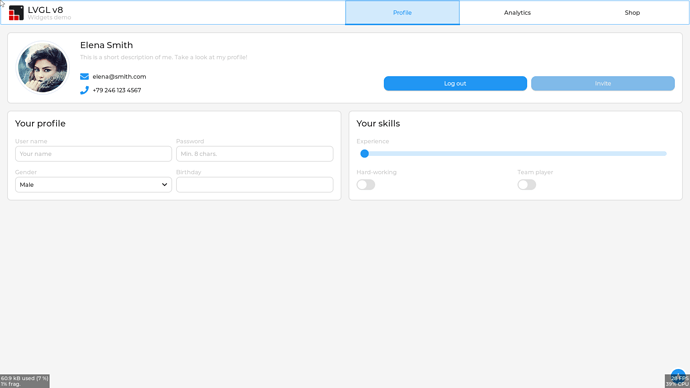

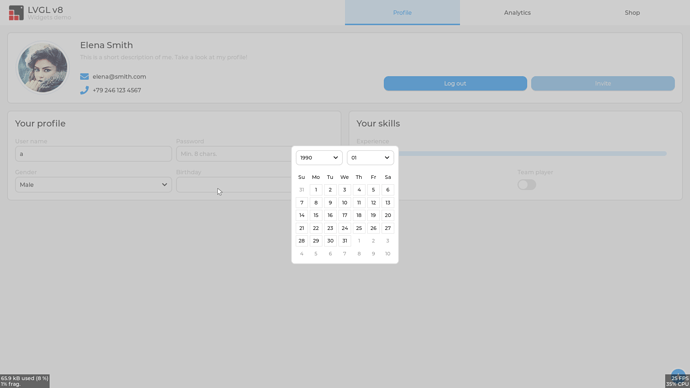

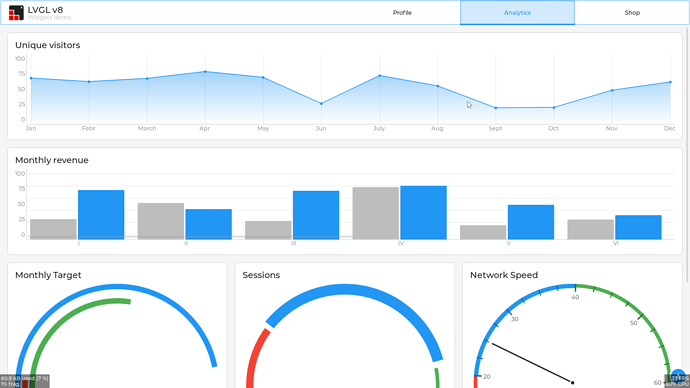

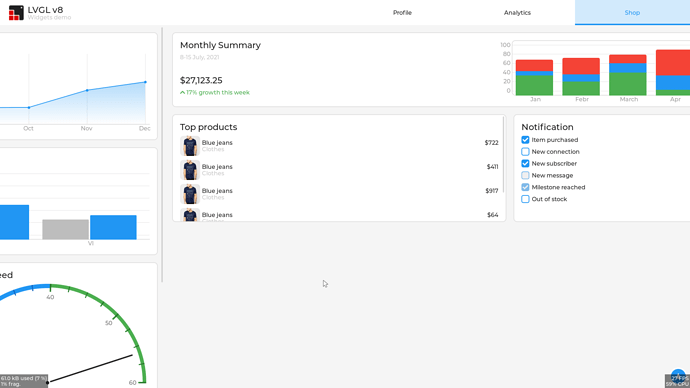

Screenshots: