In my little sbc-bench results collection only Apple M1 Pro, Jetson AGX Orin, NXP LX2xx0A (Honeycomb LX2) and Qualcomm Snapdragon 865 (QRB5165) perform similar or better.

ROCK 5B Debug Party Invitation

Yes I’m seeing 113ns on the LX2. This board has two 64-bit DDR4 channels, that must help a bit

As has been demonstrated 3 weeks ago after enabling dmc and defaulting to dmc_ondemand as governor by Radxa Rock5B’s idle consumption dropped by 500-600mW but also performance is harmed. See the Geekbench comparison above or compare sbc-bench scores. With dmc disabled or performance dmc governor performance is significantly higher than compared to dmc_ondemand.

Can this be changed? Of course.

There’s a few tunables below /sys/devices/platform/dmc/devfreq/dmc/ and upthreshold for example defaults to 40. This whole dmc thing (Dynamic Memory Interface) is all about clocking the RAM between 528 MHz and 2112 MHz based on ‘need’. But as we’ve seen defaults end up with RAM clocks not ramping up fast enough with benchmarks (and real-world tasks as well).

Quick loop with 7z b through 10-40 and listing the 7-ZIP MIPS for compression and decompression individually. Also measuring idle consumption for 3 minutes with each setting and reporting the averaged value:

upthreshold |

compression | decompression | idle |

|---|---|---|---|

| 40 | 12685 | 15492 | 1280mW |

| 30 | 15032 | 15111 | 1280mW |

| 20 | 14672 | 16297 | 1280mW |

| 10 | 15503 | 17252 | 1910mW |

| (performance) | 15370 | 17786 | 1920mW |

Looks like smart people (or smart distro maintainers) add this to some start script or service: echo 20 > /sys/devices/platform/dmc/devfreq/dmc/upthreshold

Full 7-zip MIPS list of all upthreshold values between 10 and 40:

| upthreshold | compression MIPS | decompression MIPS |

|---|---|---|

| 40 | 12685 | 15492 |

| 39 | 12327 | 15559 |

| 38 | 12349 | 15638 |

| 37 | 12356 | 15525 |

| 36 | 12362 | 15560 |

| 35 | 12183 | 15472 |

| 34 | 13809 | 15216 |

| 33 | 14373 | 15055 |

| 32 | 14978 | 14962 |

| 31 | 14658 | 15307 |

| 30 | 15032 | 15111 |

| 29 | 15127 | 14986 |

| 28 | 14960 | 14953 |

| 27 | 14888 | 15244 |

| 26 | 15190 | 15281 |

| 25 | 14727 | 15320 |

| 24 | 14819 | 15799 |

| 23 | 14710 | 14618 |

| 22 | 14847 | 16332 |

| 21 | 14964 | 16200 |

| 20 | 14672 | 16297 |

| 19 | 15125 | 16244 |

| 18 | 14972 | 16406 |

| 17 | 15373 | 16587 |

| 16 | 14985 | 16962 |

| 15 | 15372 | 17076 |

| 14 | 15403 | 17121 |

| 13 | 15282 | 17144 |

| 12 | 15139 | 17165 |

| 11 | 15185 | 17204 |

| 10 | 15503 | 17252 |

As can be seen each run needs to be repeated at least 5 times and then take an average value to get rid of result variation caused by side effects. But I’ll leave this for someone else as an excercise…

@hipboi to address the remaining performance problems (I/O performance with ondemand cpufreq governor and overall performance with dmc_ondemand memory governor) all that’s needed is adding some variables with values to a file below /etc/sysfs.d/. See at the very bottom here.

@willy @tkaiser I have my board cpu cluster1 pvtm sel at 4 and cluster2 pvtm sel at 5. I added a patch to show some log printed from kernel:

--- a/drivers/opp/of.c

+++ b/drivers/opp/of.c

@@ -459,7 +459,7 @@ static bool _opp_is_supported(struct device *dev, struct opp_table *opp_table,

}

versions = count / levels;

-

+ dev_dbg(dev, "%s: count is %d, levels is %d, versions is %d\n", __func__, count, levels, versions);

/* All levels in at least one of the versions should match */

for (i = 0; i < versions; i++) {

bool supported = true;

@@ -475,6 +475,7 @@ static bool _opp_is_supported(struct device *dev, struct opp_table *opp_table,

/* Check if the level is supported */

if (!(val & opp_table->supported_hw[j])) {

+ dev_warn(dev, "%s: val is %u, opp_table->supported_hw[j] is %u", __func__, val, opp_table->supported_hw[j]);

supported = false;

break;

}

@@ -767,7 +768,7 @@ static struct dev_pm_opp *_opp_add_static_v2(struct opp_table *opp_table,

/* Check if the OPP supports hardware's hierarchy of versions or not */

if (!_opp_is_supported(dev, opp_table, np)) {

- dev_dbg(dev, "OPP not supported by hardware: %lu\n",

+ dev_warn(dev, "OPP not supported by hardware: %lu\n",

new_opp->rate);

goto free_opp;

}

Here is the log I got:

[ 3.958433] cpu cpu4: _opp_is_supported: val is 36, opp_table->supported_hw[j] is 16

[ 3.958444] cpu cpu4: _opp_is_supported: val is 72, opp_table->supported_hw[j] is 16

[ 3.958455] cpu cpu4: _opp_is_supported: val is 128, opp_table->supported_hw[j] is 16

[ 3.959181] cpu cpu6: _opp_is_supported: val is 19, opp_table->supported_hw[j] is 32

[ 3.960056] cpu cpu6: _opp_is_supported: val is 72, opp_table->supported_hw[j] is 32

[ 3.960830] cpu cpu6: _opp_is_supported: val is 128, opp_table->supported_hw[j] is 32

The val value is deined in device tree opp-supported-hw. 19 is 0x13 from opp-2256000000, 36 is 0x24 from opp-2304000000, 72 is 0x48 from opp-2352000000, and 128 is 0x80 from opp-2400000000.

The kernel driver calculate the result of val & opp_table->supported_hw[j] to see if the opp rate is supported. It seems that 16=2^4, 32=2^5, and 4, 5 is the pvtm sel of that 2 clusters.

So if we change the opp-supported-hw of opp-2400000000, we may enable the 2.4GHz opp on the low pvtm boards. For example, use this patch:

diff --git a/arch/arm64/boot/dts/rockchip/rk3588s.dtsi b/arch/arm64/boot/dts/rockchip/rk3588s.dtsi

index bd88bc554..0471e960e 100644

--- a/arch/arm64/boot/dts/rockchip/rk3588s.dtsi

+++ b/arch/arm64/boot/dts/rockchip/rk3588s.dtsi

@@ -934,7 +934,7 @@

clock-latency-ns = <40000>;

};

opp-2400000000 {

- opp-supported-hw = <0xff 0x80>;

+ opp-supported-hw = <0xff 0x10>;

opp-hz = /bits/ 64 <2400000000>;

opp-microvolt = <1000000 1000000 1000000>,

<1000000 1000000 1000000>;

@@ -1143,7 +1143,7 @@

clock-latency-ns = <40000>;

};

opp-2400000000 {

- opp-supported-hw = <0xff 0x80>;

+ opp-supported-hw = <0xff 0x10>;

opp-hz = /bits/ 64 <2400000000>;

opp-microvolt = <1000000 1000000 1000000>,

<1000000 1000000 1000000>;

Nice findings! Three things come to my mind:

Me not qualified for testing due to my board allowing for the 2400 MHz cpufreq opp by default:

[ 6.662048] cpu cpu4: pvtm=1785

[ 6.665982] cpu cpu4: pvtm-volt-sel=7

[ 6.673723] cpu cpu6: pvtm=1782

[ 6.677675] cpu cpu6: pvtm-volt-sel=7

BTW: at least based on sbc-bench results collection my board is just one out of 21 that gets the 2400 MHz, the other 20 are limited to lower cpufreqs [1]. But as we’ve seen a lower cpufreq OPP can be compensated by the CPU cores clocking higher in reality. For example your board: cpu4-cpu5 get 2256 MHz as highest OPP but measured it’s ~2300 MHz.

Then I wonder whether these adjustment would be possible via a .dtbo as well?

And of course whether allowing higher cpufreqs without increasing supply voltage will not cause stability issues?

Back then when I was part of linux-sunxi community we used a special Linpack version for such testings since this benchmark was very sensitive to undervoltage. This way DVFS OPP for Allwinner A64 and H5 in mainline kernel were defined and I prepared some QA testing for Pinebook but to no avail (Pinebook owners not participating). At least the tools are here.

As a quick check all that should be needed is to install libmpich-dev, clone the repo, chdir inside and ./xhpl64.

[1] Filtered by SoC serial number these are results from 21 different RK3588/RK3588s:

| count of boards | A76 cpufreq OPP |

|---|---|

| 4 | 2256/2256 MHz |

| 5 | 2256/2304 MHz |

| 3 | 2304/2304 MHz |

| 1 | 2304/2352 MHz |

| 7 | 2352/2352 MHz |

| 1 | 2400/2400 MHz |

My board at home failed to reboot right now, so I will have further test after I get home. After verifying this patch I will provide a dts overlay.

In the early version of kernel code there is only clk 2.4GHz after 2.208GHz: https://github.com/Fruit-Pi/kernel/blob/develop-5.10/arch/arm64/boot/dts/rockchip/rk3588s.dtsi#L850. So there maybe some reason causing rk add more limit to the clk. So it’s better that @jack @hipboi can ask rockchip why the cpu clk is set to the current status.

Interesting to compare with most recent rk3588s.dtsi and the DVFS definitions there. Between 1416-2016 MHz the supply voltage is 25mV higher these days, 2208 MHz get an additional 12.5mV but everything exceeding 2208 MHz is fed with 1000mV just like the 2400 OPP from back then (again +12.5mV above the 2208 OPP).

Usually the highest DVFS OPP get a massive boost in supply voltage compared to the lower clocking ones. Strange!

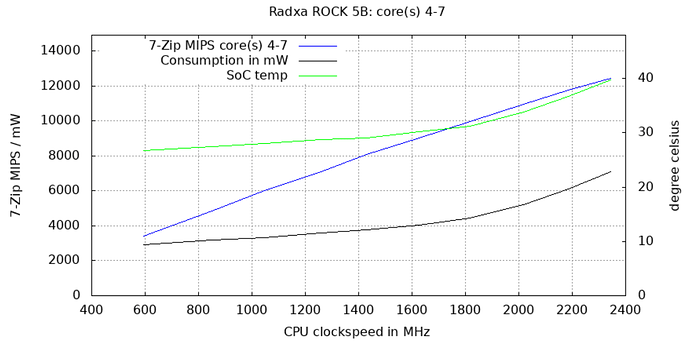

And it’s the same with RK3588 as well. Walking through all DVFS OPP between 408 and 2400 MHz with all four A76 the usual picture: 7-ZIP scores scale linearly while consumption/temperature rise the more the higher the clockspeed:

| MHz OPP / measured | 7-ZIP MIPS | Temp | consumption |

|---|---|---|---|

| 408 / 400 | 2320 | 25.9°C | 2673mW |

| 600 / 600 | 3423 | 26.8°C | 2913mW |

| 816 / 860 | 4843 | 27.5°C | 3200mW |

| 1008 / 1050 | 5995 | 28.1°C | 3336mW |

| 1200 / 1260 | 7083 | 28.7°C | 3586mW |

| 1416 / 1430 | 8062 | 29.0°C | 3780mW |

| 1608 / 1630 | 9030 | 30.2°C | 4036mW |

| 1800 / 1820 | 9960 | 31.2°C | 4450mW |

| 2016 / 2020 | 10943 | 33.9°C | 5183mW |

| 2208 / 2190 | 11733 | 36.7°C | 6106mW |

| 2400 / 2350 | 12412 | 39.8°C | 7096mW |

I tested the new dts, opp clk 2.4GHz is unlocked, but the real frequency is still lower: http://ix.io/4bBY. Maybe a higher volt is still needed.

Here is the dts overlay:

/dts-v1/;

/plugin/;

/ {

fragment@0 {

target = <&cluster1_opp_table>;

__overlay__ {

opp-2400000000 {

opp-supported-hw = <0xff 0xf0>;

};

};

};

fragment@1 {

target = <&cluster2_opp_table>;

__overlay__ {

opp-2400000000 {

opp-supported-hw = <0xff 0xf0>;

};

};

};

};

Yes, let’s compare with your sbc-results from July: http://ix.io/443u first looking at cpu4-cpu5:

Now:

Cpufreq OPP: 2400 Measured: 2280 (2280.722/2280.621/2280.520) (-5.0%)

Cpufreq OPP: 2256 Measured: 2280 (2280.470/2280.419/2280.268) (+1.1%)

Cpufreq OPP: 2208 Measured: 2211 (2211.061/2211.061/2211.014)

Cpufreq OPP: 2016 Measured: 2022 (2022.575/2022.525/2022.426)

Back then:

Cpufreq OPP: 2256 Measured: 2304 (2305.128/2304.785/2304.654) (+2.1%)

Cpufreq OPP: 2208 Measured: 2233 (2233.722/2233.648/2233.475) (+1.1%)

Cpufreq OPP: 2016 Measured: 2040 (2040.712/2040.506/2040.424) (+1.2%)

Back then even with lower cpufreq OPP this cluster was able to clock +20 MHz higher. With cluster2 it looks similar:

Now:

Cpufreq OPP: 2400 Measured: 2298 (2298.176/2298.176/2298.074) (-4.2%)

Cpufreq OPP: 2304 Measured: 2297 (2297.869/2297.818/2297.716)

Cpufreq OPP: 2208 Measured: 2203 (2203.609/2203.327/2203.280)

Cpufreq OPP: 2016 Measured: 2010 (2010.177/2010.128/2010.079)

Back then:

Cpufreq OPP: 2304 Measured: 2322 (2323.016/2322.989/2322.775)

Cpufreq OPP: 2208 Measured: 2225 (2225.739/2225.739/2225.714)

Cpufreq OPP: 2016 Measured: 2027 (2027.852/2027.730/2027.628)

So just enabling higher OPP doesn’t solve a problem (since there’s a nasty MCU inside RK3588 rejecting our changes) and the clockspeeds being higher back then most probably related to temperatures. In July the benchmark started with a board temperature of 31.5°C, now it was 46.2°C (my initial assumptions PVTM wouldn’t relate to temperatures were wrong, it has a minor influence on the real clockspeeds).

So indeed testing with higher supply voltages would be an idea, maybe we can convince the MCU to allow for higher clockspeeds then.

BTW: working now on OPP reporting with MODE=extensive sbc-bench mode. Looks like this with default settings on Rock 5B:

cluster0-opp-table:

408 MHz 675.0 mV

600 MHz 675.0 mV

816 MHz 675.0 mV

1008 MHz 675.0 mV

1200 MHz 712.5 mV

1416 MHz 762.5 mV

1608 MHz 850.0 mV

1800 MHz 950.0 mV

cluster1-opp-table:

408 MHz 675.0 mV

600 MHz 675.0 mV

816 MHz 675.0 mV

1008 MHz 675.0 mV

1200 MHz 675.0 mV

1416 MHz 725.0 mV

1608 MHz 762.5 mV

1800 MHz 850.0 mV

2016 MHz 925.0 mV

2208 MHz 987.5 mV

2256 MHz 1000.0 mV

2304 MHz 1000.0 mV

2352 MHz 1000.0 mV

2400 MHz 1000.0 mV

cluster2-opp-table:

408 MHz 675.0 mV

600 MHz 675.0 mV

816 MHz 675.0 mV

1008 MHz 675.0 mV

1200 MHz 675.0 mV

1416 MHz 725.0 mV

1608 MHz 762.5 mV

1800 MHz 850.0 mV

2016 MHz 925.0 mV

2208 MHz 987.5 mV

2256 MHz 1000.0 mV

2304 MHz 1000.0 mV

2352 MHz 1000.0 mV

2400 MHz 1000.0 mV

dmc-opp-table:

528 MHz 675.0 mV

1068 MHz 725.0 mV

1560 MHz 800.0 mV

2750 MHz 875.0 mV

gpu-opp-table:

300 MHz 675.0 mV

400 MHz 675.0 mV

500 MHz 675.0 mV

600 MHz 675.0 mV

700 MHz 700.0 mV

800 MHz 750.0 mV

900 MHz 800.0 mV

1000 MHz 850.0 mV

npu-opp-table:

300 MHz 700.0 mV

400 MHz 700.0 mV

500 MHz 700.0 mV

600 MHz 700.0 mV

700 MHz 700.0 mV

800 MHz 750.0 mV

900 MHz 800.0 mV

1000 MHz 850.0 mV

(dmc wrong)

Well, this is the memory OPP table as defined. Which is in conflict with /sys/devices/platform/dmc/devfreq/dmc/available_frequencies since there’s no 2750MHz entry but it ends at 2112 MHz: 528000000 1068000000 1560000000 2112000000

@willy: is there a way to determine from ramlat measurements DRAM clockspeeds? Every cpufreq governor switched to performance and then checking dmc’s available_frequencies:

root@rock-5b:/sys/devices/platform/dmc/devfreq/dmc# for i in $(<available_frequencies) ; do echo -e "\n$i\n"; echo $i >min_freq ; echo $i >max_freq ; taskset -c 5 /usr/local/src/ramspeed/ramlat -s -n 200 ; done

528000000

size: 1x32 2x32 1x64 2x64 1xPTR 2xPTR 4xPTR 8xPTR

4k: 1.706 1.706 1.706 1.706 1.706 1.706 1.706 3.234

8k: 1.706 1.706 1.706 1.706 1.706 1.706 1.706 3.325

16k: 1.706 1.706 1.706 1.706 1.706 1.706 1.706 3.325

32k: 1.706 1.706 1.706 1.706 1.706 1.706 1.706 3.329

64k: 1.707 1.707 1.707 1.707 1.707 1.707 1.707 3.328

128k: 5.149 5.146 5.144 5.146 5.144 5.853 7.185 12.92

256k: 5.982 6.163 5.970 6.165 5.965 5.991 7.497 12.90

512k: 8.734 8.199 8.603 8.201 8.626 8.408 9.332 14.92

1024k: 20.14 18.54 18.49 18.54 18.53 18.88 20.95 30.60

2048k: 25.87 20.83 21.94 20.82 21.96 22.34 25.41 38.82

4096k: 99.21 72.74 84.82 72.67 86.27 72.74 78.43 107.8

8192k: 178.6 158.7 173.2 158.9 172.7 153.4 159.3 182.4

16384k: 217.0 203.5 211.8 202.3 211.5 194.6 208.8 217.0

1068000000

size: 1x32 2x32 1x64 2x64 1xPTR 2xPTR 4xPTR 8xPTR

4k: 1.707 1.707 1.707 1.707 1.707 1.707 1.707 3.233

8k: 1.707 1.707 1.707 1.707 1.707 1.707 1.707 3.327

16k: 1.707 1.707 1.707 1.707 1.707 1.707 1.707 3.327

32k: 1.707 1.707 1.707 1.707 1.707 1.707 1.707 3.330

64k: 1.708 1.707 1.708 1.707 1.708 1.708 1.708 3.330

128k: 5.123 5.121 5.120 5.121 5.120 5.815 7.166 12.92

256k: 5.976 6.163 5.972 6.165 5.975 5.994 7.500 12.91

512k: 8.832 8.530 8.778 8.536 8.514 8.859 9.705 15.37

1024k: 18.62 18.56 18.51 18.56 18.68 18.90 20.98 30.61

2048k: 18.92 19.39 18.64 19.39 18.69 19.76 21.65 30.88

4096k: 71.60 52.61 62.12 52.40 63.17 54.66 58.07 77.36

8192k: 131.4 113.6 127.8 112.5 127.5 110.4 113.5 128.4

16384k: 160.9 147.5 157.4 147.3 157.4 142.9 147.7 152.7

1560000000

size: 1x32 2x32 1x64 2x64 1xPTR 2xPTR 4xPTR 8xPTR

4k: 1.707 1.708 1.707 1.707 1.707 1.707 1.708 3.237

8k: 1.707 1.708 1.707 1.708 1.707 1.707 1.708 3.328

16k: 1.707 1.708 1.707 1.708 1.707 1.708 1.708 3.329

32k: 1.708 1.708 1.707 1.708 1.707 1.708 1.708 3.332

64k: 1.709 1.708 1.708 1.708 1.708 1.708 1.709 3.331

128k: 5.152 5.150 5.149 5.150 5.149 5.824 7.197 12.93

256k: 6.011 5.996 6.004 5.996 6.004 6.008 7.518 12.92

512k: 8.634 8.240 8.814 8.265 8.105 8.374 9.551 15.25

1024k: 18.76 18.69 18.38 18.68 18.11 19.03 21.14 30.59

2048k: 18.73 19.26 18.50 19.26 18.55 19.55 21.59 30.85

4096k: 60.18 46.27 54.10 46.18 54.60 47.84 50.54 65.17

8192k: 108.3 92.29 102.9 91.68 103.6 91.60 94.70 105.9

16384k: 135.8 124.9 133.7 125.0 134.6 122.7 124.7 126.9

2112000000

size: 1x32 2x32 1x64 2x64 1xPTR 2xPTR 4xPTR 8xPTR

4k: 1.709 1.709 1.708 1.709 1.708 1.708 1.709 3.240

8k: 1.708 1.709 1.708 1.709 1.708 1.709 1.709 3.330

16k: 1.708 1.709 1.708 1.709 1.708 1.708 1.709 3.330

32k: 1.708 1.709 1.708 1.709 1.708 1.709 1.709 3.334

64k: 1.710 1.709 1.710 1.709 1.710 1.710 1.710 3.334

128k: 5.128 5.127 5.126 5.127 5.126 5.744 7.175 12.94

256k: 5.988 6.170 5.981 6.170 5.982 6.000 7.509 12.92

512k: 8.030 7.508 8.089 7.557 7.882 7.799 8.715 14.56

1024k: 18.44 18.58 18.36 18.57 18.96 18.89 20.95 30.58

2048k: 18.96 19.30 18.75 19.30 18.75 19.62 21.66 31.06

4096k: 54.45 42.41 49.72 42.30 49.29 43.93 46.23 59.35

8192k: 97.40 83.49 94.01 83.06 93.91 83.65 85.51 94.37

16384k: 123.9 115.1 122.0 115.1 122.1 112.8 113.8 115.5Yep, so it corresponds to what I observed and decoded, indicating that using 0xff would allow all pvtm values for the associated opp.

Pvtm indicates the silicon quality but that can be compensated using higher voltages. The next step is to create all combinations of opp in terms of combinations of (frequency, voltage), and select them based on pvtm values. That’s what I intend to do on my board to enable 2.4 GHz (and possibly try higher ones).

Not really. I tried a lot, but the measurements are influenced by the DRAM bus width, the memory controller, the L3 cache’s speed, etc. For example I hoped that measuring the time it took for one line and two adjacent lines would reflect the DRAM frequency, but it does not. I even tried to play with prefetch instructions just in case but that didn’t give me any interesting result. In the end memchr() remains one of the most effective one because it’s supposed to be almost insensitive to RAM latency but highly dependent on bandwidth (frequency * bus size).

I don’t believe in it a single second. That would be a design error on their side, because measuring that voltage etc is exactly what the PVTM is for, i.e. gauge what the silicon is capable of. No, if there’s an MCU, I guess that instead it’s just dampening the curve at the highest frequencies to defeat our measurements without being caught too easily. I would suspect that past a certain multiplier, they’re just cutting excess multipliers in half so that past the highest trusted point (2208 MHz), every 48 MHz only adds 24. But that’s pure guess, of course, even though it perfectly matches what you’ve measured

So it’s better that @jack @hipboi can ask rockchip why the cpu clk is set to the current status.

@Stephen Please follow up this issue.

@tkaiser @willy I increased the cpu voltage to 1.05V and now the 4 big cores can reach 2.4GHz: http://sprunge.us/k7rnJj

Here is the device tree overlay: https://gist.github.com/amazingfate/883baffc614f49c8089dafd4152e99f3

Great! I’m going to apply this on my board later trying to measure the consumption/temp difference this makes.

Also I think only adding some more mV to the last 2400 OPP isn’t enough since those intermediate steps also need a little bit more voltage since PVTM is sensitive to temperature contrary to my initial belief (need to test a ‘cold boot’ in really hot state with Rock 5B lying on a radiator or something like this to see whether I then end up with 2352 as highest OPP or even less) and the board might throttle under heavy load without a good heatsink or a fan, then decreasing clockspeeds and probably becoming unstable due to undervoltage at a frequency somewhere between 2208 and 2400.

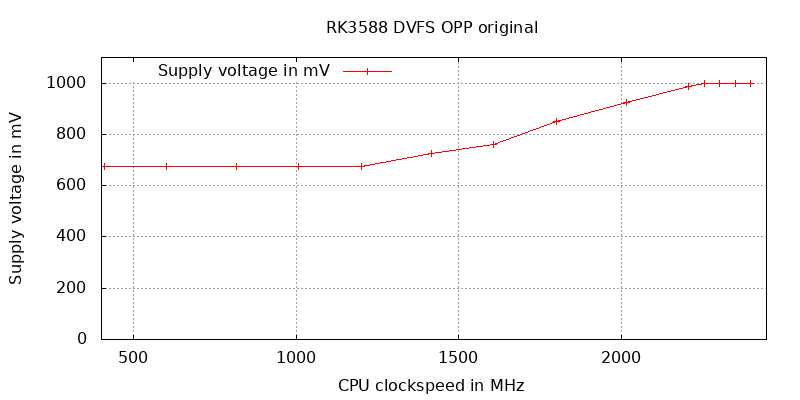

To elaborate on that… that’s how Rockchip’s defaults look like:

tk@rock-5b:~$ source sbc-bench.sh ; ParseOPPTables | grep -A15 cluster2-opp-table

cluster2-opp-table:

408 MHz 675.0 mV

600 MHz 675.0 mV

816 MHz 675.0 mV

1008 MHz 675.0 mV

1200 MHz 675.0 mV

1416 MHz 725.0 mV

1608 MHz 762.5 mV

1800 MHz 850.0 mV

2016 MHz 925.0 mV

2208 MHz 987.5 mV

2256 MHz 1000.0 mV

2304 MHz 1000.0 mV

2352 MHz 1000.0 mV

2400 MHz 1000.0 mV

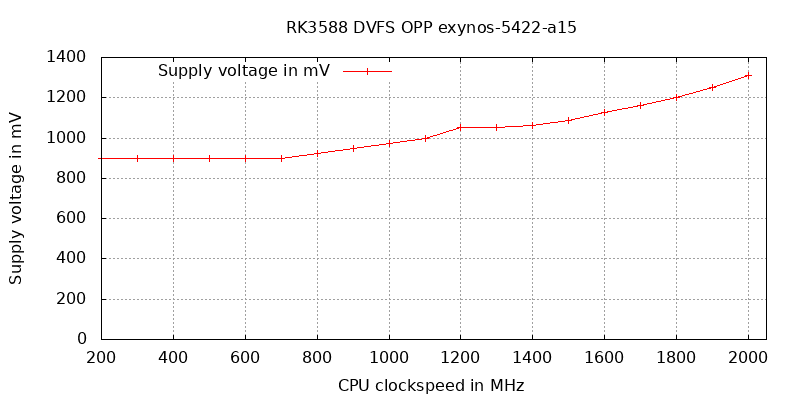

Or better as graph:

We clearly see that the curve goes flat above 2208 MHz since PVTM does the job limiting weak silicon to the respective maximum clockspeed while staying on 1000 mV supply voltage.

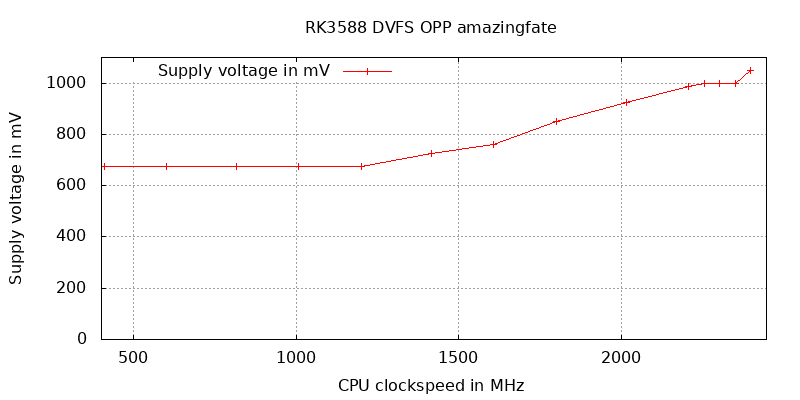

@amazingfate’s adoption only cares about the highest DVFS OPP and this looks like this then:

This obviously does not care about the intermediate OPP between 2208 and 2352 MHz that currently are ‘addressed’ by PVTM (simply by rejecting higher clockspeeds to weak silicon ends up with same voltage but lower clocks to avoid undervoltage).

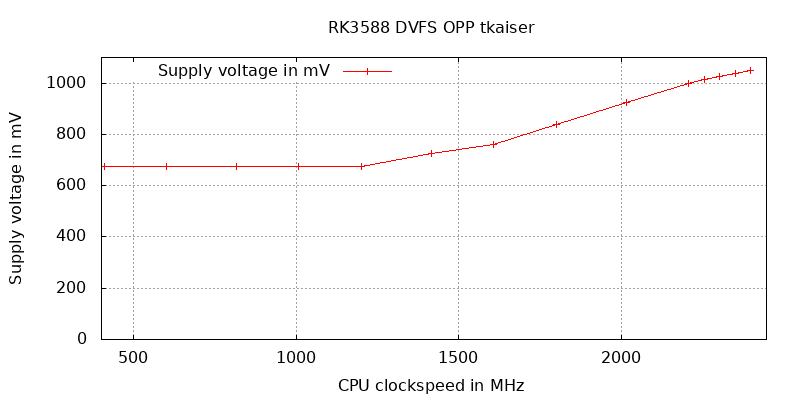

But if we want to overcome PVTM here and use the intermediate clockspeeds we need to address the clockspeed/voltage ratio. As such I would propose a slight increase of every voltage here (and lower the 1800 MHz OPP by 10mV since I doubt the buckling is by intention):

Or in numbers:

tk@rock-5b:~$ ParseOPPTables | grep -A15 cluster2-opp-table

cluster2-opp-table:

408 MHz 675.0 mV

600 MHz 675.0 mV

816 MHz 675.0 mV

1008 MHz 675.0 mV

1200 MHz 675.0 mV

1416 MHz 725.0 mV

1608 MHz 762.5 mV

1800 MHz 840.0 mV

2016 MHz 925.0 mV

2208 MHz 1000.0 mV

2256 MHz 1012.5 mV

2304 MHz 1025.0 mV

2352 MHz 1037.5 mV

2400 MHz 1050.0 mVAs a quick comparison how such DVFS curves usually look like:

The A15 cores in Exynos 5422 (ODROID-XU4) with Hardkernel’s 5.4 kernel:

Usually the highest DVFS OPP are a bit steeper and for whatever reason two OPP have the same voltage (1200 and 1300 MHz). Whether this is by accident or there is a specific weakness at 1.3 GHz I don’t know…

opp_table0:

200 MHz 900.0 mV

300 MHz 900.0 mV

400 MHz 900.0 mV

500 MHz 900.0 mV

600 MHz 900.0 mV

700 MHz 900.0 mV

800 MHz 925.0 mV

900 MHz 950.0 mV

1000 MHz 975.0 mV

1100 MHz 1000.0 mV

1200 MHz 1050.0 mV

1300 MHz 1050.0 mV

1400 MHz 1062.5 mV

1500 MHz 1087.5 mV

1600 MHz 1125.0 mV

1700 MHz 1162.5 mV

1800 MHz 1200.0 mV

1900 MHz 1250.0 mV

2000 MHz 1312.5 mV