I change the cpu supply to 1.5V, and now I can use volt higher than 1.05V. Here is the sbc-bench result of volt 1.15V at opp 2.4GHz: http://ix.io/4bL8

I failed to change cpu supply using device tree overlay, so I just edited the device tree in the kernel source code and compiled a new dtb package.

ROCK 5B Debug Party Invitation

@tkaiser I think clk over 2.4GHz is locked by scmi firmware like rk356x did. If you look into dmesg output you can see a lot of error saying “set clk failed”. So we have to only increase the microvolt of opp 2.4GHz if we want to over clock at this moment. If rockchip release the source code of ATF we may find other way to unlock the clk over 2.4GHz.

Well, frying the SoC at 1500mV (150% of the designed 1000mW supply voltage at ‘full CPU speed’) results in this:

Cpufreq OPP: 2400 Measured: 2530 (2530.882/2530.882/2530.572) (+5.4%)

Cpufreq OPP: 2400 Measured: 2547 (2548.049/2547.923/2547.860) (+6.1%)

That’s close to nothing

With only 50mV more instead of 500mV I was able to measure this:

That was just a 5% voltage increase and not 50%! At 1500mV consumption must be really ruined while performance only slightly benefits. Your 7-zip MIPS score today is lower than the one you had months ago with original DVFS settings even if now your CPU clockspeeds are 9% higher:

| cpufreq | dmc settings | 7-zip MIPS | openssl | memcpy | memset |

|---|---|---|---|---|---|

| ~2540 MHz | dmc_ondemand (upthreshold: 40) | 15090 | 1448890 | 10160 | 28770 |

| ~2420 MHz | dmc_ondemand (upthreshold: 20) | 16720 | 1387470 | 9710 | 29340 |

| ~2310 MHz | performance | 16290 | 1322410 | 10200 | 28610 |

The reason is simple: adjusting the dmc governor (dmc_ondemand with upthreshold=20 – my board in the middle) is the better choice than overvolting/overclocking since it gives you lower idle consumption and better performance at the same time, especially compared to ‘overclocking’ which is horrible from an energy efficient point of view. The higher the supply voltages the less efficient the CPU cores.

I change the dmc governor to performance and get higher result: http://ix.io/4bMe comparing to the default dmc_ondemand: http://ix.io/4bLQ, but higher temperature. BTW this is microvolt 1.25v to opp 2.4GHz.

Comparing 4 results, two times no overclocking (your and mine board at the bottom), two times overvolting/overclocking:

| cpufreq | dmc settings | 7-zip MIPS | openssl | memcpy | memset | idle | full load |

|---|---|---|---|---|---|---|---|

| ~2640 MHz | performance | 17350 | 1505860 | 9910 | 28750 | +600mW | unknown |

| ~2540 MHz | dmc_ondemand (40) | 15090 | 1448890 | 10160 | 28770 | - | unknown |

| ~2350 MHz | dmc_ondemand (20) | 16300 | 1327430 | 9550 | 29140 | - | - |

| ~2310 MHz | performance | 16290 | 1322410 | 10200 | 28610 | +600mW | ~7000mW |

performance dmc governor has the huge disadvantage of increased idle consumption for no other reason than unfortunate settings.

Overclocking requires overvolting which ends up with huge consumption increases at full CPU utilization. What I’ve measured above with just 1050 mV instead of 1000 mV was a whopping 15% consumption increase for a laughable 2.5% performance ‘boost’. Have you’ve been able to measure peak consumption when benchmarking? It’s not possible with a simple USB powermeter anyway at least not with 7-zip since too much fluctuation.

As such IMO key to better overall performance while keeping consumption low is better settings instead of ‘overclocking’.

I have to keep the fan running at full speed to do the overclocking, which is very noisy. I don’t have a powermeter so I don’t know about the power consumption, but it can be inferred from the temperature: 60°C with full speed running fan is too high. But for my low pvtm value, microvolt 1.05v can let my board reach 2.4GHz, which is valuable to me.

A little concern after having played around with DT overlays and overvolting: my board now consumes significantly more than before even without any DT overlay loaded or other DT manipulations.

@willy: have you already done some tests here? Since you’re able to measure maybe it’s a good idea to check consumption prior to any such tests and then compare.

Since I made only reboots all the time I disconnected it now from power for an hour but still same symptom: significantly higher idle consumption. Also the upthreshold=20 trick doesn’t work any more since now DRAM is all the time at 2112 MHz and not 528 MHz as before (having this already taken into account and measured with powersave dmc governor as well).

Maybe I’m just overlooking something but thought I write this as a little warning so others can measure before/after conducting any overvolting tests.

I think your extra consumption simply comes from the DMC running at full speed (for whatever reason), that’s the same difference of ~600mW you measured last month.

It does indeed happen to fry chips with voltage increases, but not by such small values. You’re definitely safe below the “absolute maximum ratings”, which usually are much higher because they don’t depend on temperature but the process node and technology used that impose hard limits on the voltage across a transistor, even in idle. I don’t know the value here but it might well be 1.5V or so. Regardless I’ve sometimes operated machines above the abs max ratings… But the gains on modern technologies are fairly limited, we’re not dealing with 486s anymore.

With that said, no I haven’t been running frequency tests yet (by pure lack of time, not curiosity). And yes, that’s definitely something for which I’ll watch the wattmeter. I’m even thinking that it could be nice to observe the total energy used by the test and compare it with the test duration. Usually the ratios are totally discouraging

Last week I measured 1280mW in idle, now it’s 1460mW. DMC not involved since switched to powersave before:

root@rock-5b:/tmp# echo powersave >/sys/devices/platform/dmc/devfreq/dmc/governor

root@rock-5b:/tmp# monit-rk3588.sh

CPU0-3 CPU4-5 CPU6-7 DDR DSU GPU NPU

408 408 408 528 396 200 200

408 408 408 528 396 200 200

408 408 408 528 396 200 200

408 408 408 528 396 200 200

408 408 408 528 396 200 200

408 408 408 528 396 200 200

408 408 408 528 396 200 200

408 408 408 528 396 200 200

408 408 408 528 396 200 200

408 408 408 528 396 200 200

408 408 408 528 396 200 200

408 408 408 528 396 200 200

408 408 408 528 396 200 200

408 408 408 528 396 200 200

408 408 408 528 396 200 200

408 408 408 528 396 200 200

^C

And as reported the upthreshold behaviour now differs since with 20 DRAM is at 2112 MHz all the time while it was at 528 MHz last week. This then still adds another ~600mW…

did you happen to change the kernel maybe since previous measurements ?

It was just a change in settings I had forgotten and the TL;DR version is as simple as this:

- consumption difference wrt ASPM with DRAM at lowest clock: 230 mW

- consumption difference wrt ASPM with DRAM at highest clock: 160 mW

Setting /sys/sys/module/pcie_aspm/parameters/policy to either default or performance makes no significant difference. The consumption delta is always compared to powersave (5.10 BSP kernel default).

Long story: I was still on ‘Linux 5.10.69-rockchip-rk3588’ yesterday. Now decided to start over with Armbian_22.08.0-trunk_Rock-5b_focal_legacy_5.10.69.img I built 4 weeks ago:

idle consumption: 1250mW

One apt install linux-image-legacy-rockchip-rk3588 linux-dtb-legacy-rockchip-rk3588 linux-u-boot-rock-5b-legacy and a reboot later I’m at 5.10.72 (Armbian’s version string cosmetics):

idle consumption: 1230mW

Seems neither kernel nor bootloader related. I had a bunch of userland packages also updated but then remembered that silly me recently adjusted relevant settings. Since I started over with a freshly built Ubuntu 20.04 Armbian image (to be able to directly compare with Radxa’s) I had tweaks missing for some time:

/sys/devices/platform/dmc/devfreq/dmc/upthreshold = 25

/sys/sys/module/pcie_aspm/parameters/policy = default

And yesterday I applied these settings again and then the numbers differed.

With ASPM set to powersave (the kernel default) I’m measuring the 3rd time: idle consumption: 1220mW (which hints at 1220-1250mW being a range of expected results variation. For the lower numbers: I’ve three RPi USB-C power bricks lying around and am using most probably now a different one than before).

Now switching to /sys/sys/module/pcie_aspm/parameters/policy = default again I’m measuring three times: 1530mW, 1480mW and 1480mW.

Then retesting with /sys/devices/platform/dmc/devfreq/dmc/governor = powersave to ensure DRAM keeps clocked at 528 MHz: 1470mW, 1460mW and 1470mW.

One more test with ASPM and dmc governor set to powersave: idle consumption: 1250mW.

Well, then switching ASPM between powersave and default makes up for a consumption difference of ~230mW (1470-1240). That’s a lot more than I measured weeks ago. But now at 50.10.72 and back then most probably on 5.10.66. Also back then there was no dmc governor enabled and as such the DRAM all the time at 2112 MHz (now I’m comparing the difference at 528 MHz).

So retesting with the older image that is at 5.10.69:

DRAM at 528 MHz:

- both ASPM and dmc governor set to

powersave: 1250mW - ASPM

defaultand dmc governorpowersave: 1510mW - ASPM

performanceand dmc governorpowersave: 1470mW

DRAM at 2112 MHz:

- ASPM

powersaveand dmc governorperformance: 1930mW - ASPM

defaultand dmc governorperformance: 2090mW - both ASPM and dmc governor set to

performance: 2100mW

BTW: when all CPU cores are at 408 MHz the scmi_clk_dsu clock jumps from 0 to 396 MHz (DSU). No idea how to interpret this…

Setting upthreshold = 20 ends up way too often with DRAM at 2112 MHz instead of 528 MHz as such I slightly increased upthreshold in my setup. Though need to get a quality M.2 SSD I can use for further tests. Right now every SSD that is not crappy is either in use somewhere else or a few hundred km away.

Cool that you found it! I remember that we’ve noted quite a number of times already that ASPM made a significant difference in power usage. This one is particularly important, maybe in part because the board features several lanes of PCIe 3.0, making it more visible than older boards with fewer, slower lanes.

Yeah, but until now I’ve not connected any PCIe device to any of the M.2 slots. So I really need to get a good SSD to test with since judging by reports from ODROID forum idle consumption once an NVMe SSD has been slapped into the M.2 slot rises to insane levels with RK’s BSP kernel (should be 4.19 over there, unfortunately users only sometimes post the relevant details).

Could be an indication that there are driver/settings issues with NVMe’s APST (Autonomous Power State Transition) asides ASPM.

If I can manage to arrange some time for this this week-end, I could run some tests with ASPM. I’m having M2->PCIE adapters and an M2 WIFI card, that should be sufficient.

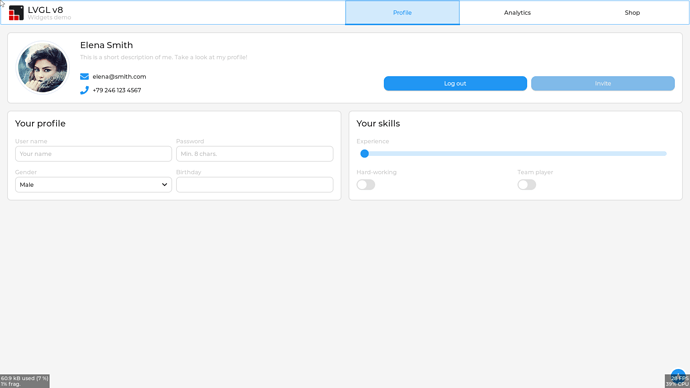

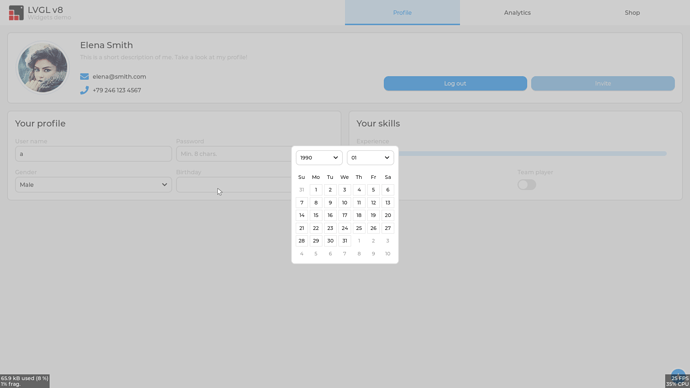

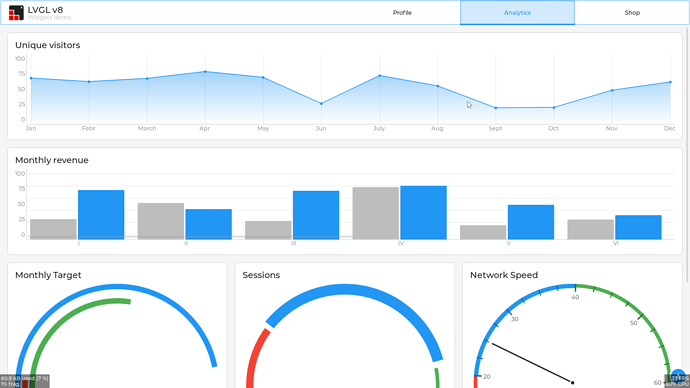

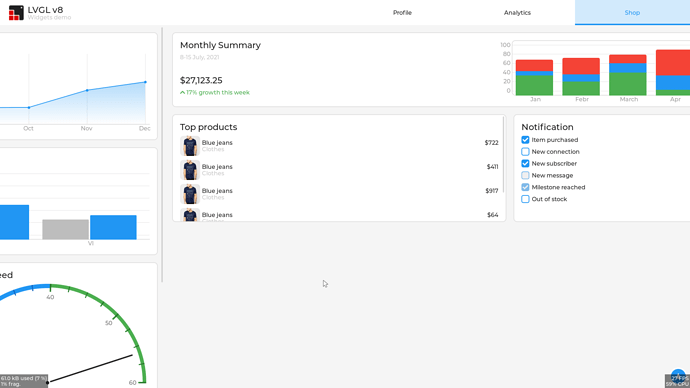

Testing LVGL on Rock 5B.

Dependencies:

- SDL2 with HW accel (build and install my version or the latest sdl2 might work better)

- cmake up to date

Build demo, recipe:

mkdir -p lvgl

cd lvgl

git clone --recursive https://github.com/littlevgl/pc_simulator.git

cd pc_simulator

mkdir -p build

cd build

cmake ..

make -j8

Before you build you should enable, disable or change some settings:

diff --git a/lv_conf.h b/lv_conf.h

index 0b9a6dc..7cf0612 100644

--- a/lv_conf.h

+++ b/lv_conf.h

@@ -49,7 +49,7 @@

#define LV_MEM_CUSTOM 0

#if LV_MEM_CUSTOM == 0

/*Size of the memory available for `lv_mem_alloc()` in bytes (>= 2kB)*/

- #define LV_MEM_SIZE (128 * 1024U) /*[bytes]*/

+ #define LV_MEM_SIZE (896 * 1024U) /*[bytes]*/

/*Set an address for the memory pool instead of allocating it as a normal array. Can be in external SRAM too.*/

#define LV_MEM_ADR 0 /*0: unused*/

@@ -151,7 +151,7 @@

/*Maximum buffer size to allocate for rotation.

*Only used if software rotation is enabled in the display driver.*/

-#define LV_DISP_ROT_MAX_BUF (32*1024)

+#define LV_DISP_ROT_MAX_BUF (64*1024)

/*-------------

* GPU

@@ -184,7 +184,7 @@

#if LV_USE_GPU_SDL

#define LV_GPU_SDL_INCLUDE_PATH <SDL2/SDL.h>

/*Texture cache size, 8MB by default*/

- #define LV_GPU_SDL_LRU_SIZE (1024 * 1024 * 8)

+ #define LV_GPU_SDL_LRU_SIZE (1024 * 1024 * 64)

/*Custom blend mode for mask drawing, disable if you need to link with older SDL2 lib*/

#define LV_GPU_SDL_CUSTOM_BLEND_MODE (SDL_VERSION_ATLEAST(2, 0, 6))

#endif

diff --git a/lv_drivers b/lv_drivers

--- a/lv_drivers

+++ b/lv_drivers

@@ -1 +1 @@

-Subproject commit 1bd4368e71df5cafd68d1ad0a37ce0f92b8f6b88

+Subproject commit 1bd4368e71df5cafd68d1ad0a37ce0f92b8f6b88-dirty

diff --git a/lv_drv_conf.h b/lv_drv_conf.h

index 4f6a4e2..b40db57 100644

--- a/lv_drv_conf.h

+++ b/lv_drv_conf.h

@@ -95,8 +95,8 @@

#endif

#if USE_SDL || USE_SDL_GPU

-# define SDL_HOR_RES 480

-# define SDL_VER_RES 320

+# define SDL_HOR_RES 1920

+# define SDL_VER_RES 1080

/* Scale window by this factor (useful when simulating small screens) */

# define SDL_ZOOM 1

Tested with HDMI 1920x1080 and debug info. There is also a Wayland driver that may be a lot faster but as i don’t have Wayland i have not tested it.

Screenshots:

Panfrost now almost works well enough that you might want to use it:

=======================================================

glmark2 2021.12

=======================================================

OpenGL Information

GL_VENDOR: Panfrost

GL_RENDERER: Mali-G610 (Panfrost)

GL_VERSION: OpenGL ES 3.1 Mesa 22.3.0-devel (git-7fce4e1bfd)

Surface Config: buf=32 r=8 g=8 b=8 a=8 depth=24 stencil=0

Surface Size: 800x600 windowed

=======================================================

[build] use-vbo=false: FPS: 481 FrameTime: 2.079 ms

[build] use-vbo=true: FPS: 453 FrameTime: 2.208 ms

[texture] texture-filter=nearest: FPS: 444 FrameTime: 2.252 ms

[texture] texture-filter=linear: FPS: 431 FrameTime: 2.320 ms

[texture] texture-filter=mipmap: FPS: 441 FrameTime: 2.268 ms

[shading] shading=gouraud: FPS: 423 FrameTime: 2.364 ms

[shading] shading=blinn-phong-inf: FPS: 8 FrameTime: 125.000 ms

[shading] shading=phong: FPS: 432 FrameTime: 2.315 ms

[shading] shading=cel: FPS: 427 FrameTime: 2.342 ms

[bump] bump-render=high-poly: FPS: 131 FrameTime: 7.634 ms

[bump] bump-render=normals: FPS: 449 FrameTime: 2.227 ms

[bump] bump-render=height: FPS: 8 FrameTime: 125.000 ms

[effect2d] kernel=0,1,0;1,-4,1;0,1,0;: FPS: 453 FrameTime: 2.208 ms

[effect2d] kernel=1,1,1,1,1;1,1,1,1,1;1,1,1,1,1;: FPS: 411 FrameTime: 2.433 ms

[pulsar] light=false:quads=5:texture=false: FPS: 484 FrameTime: 2.066 ms

[desktop] blur-radius=5:effect=blur:passes=1:separable=true:windows=4: FPS: 0 FrameTime: inf ms

[desktop] effect=shadow:windows=4: FPS: 151 FrameTime: 6.623 ms

[buffer] columns=200:interleave=false:update-dispersion=0.9:update-fraction=0.5:update-method=map: FPS: 228 FrameTime: 4.386 ms

[buffer] columns=200:interleave=false:update-dispersion=0.9:update-fraction=0.5:update-method=subdata: FPS: 217 FrameTime: 4.608 ms

[buffer] columns=200:interleave=true:update-dispersion=0.9:update-fraction=0.5:update-method=map: FPS: 174 FrameTime: 5.747 ms

[ideas] speed=duration: FPS: 162 FrameTime: 6.173 ms

[jellyfish] <default>: FPS: 8 FrameTime: 125.000 ms

[terrain] <default>: FPS: 0 FrameTime: inf ms

[shadow] <default>: FPS: 3 FrameTime: 333.333 ms

[refract] <default>: FPS: 0 FrameTime: inf ms

[conditionals] fragment-steps=0:vertex-steps=0: FPS: 402 FrameTime: 2.488 ms

[conditionals] fragment-steps=5:vertex-steps=0: FPS: 8 FrameTime: 125.000 ms

[conditionals] fragment-steps=0:vertex-steps=5: FPS: 447 FrameTime: 2.237 ms

[function] fragment-complexity=low:fragment-steps=5: FPS: 431 FrameTime: 2.320 ms

[function] fragment-complexity=medium:fragment-steps=5: FPS: 420 FrameTime: 2.381 ms

[loop] fragment-loop=false:fragment-steps=5:vertex-steps=5: FPS: 434 FrameTime: 2.304 ms

[loop] fragment-steps=5:fragment-uniform=false:vertex-steps=5: FPS: 436 FrameTime: 2.294 ms

[loop] fragment-steps=5:fragment-uniform=true:vertex-steps=5: FPS: 423 FrameTime: 2.364 ms

=======================================================

glmark2 Score: 285

=======================================================

The current code is in the csf branch of https://gitlab.com/panfork/mesa/.

Great work! Besides, is there any score comparison between arm blob driver?

There’s not much point in doing that yet… currently I wait for the GPU to power off between each frame so there’s an overhead of a few milliseconds each frame, so benchmarks will always be a lot worse. I think the blob is at least five times faster for things like glmark at the moment.

In terms of stability, it’s getting a lot better, and just a few minutes ago I fixed a bug with importing shared buffers, so now Weston, Sway and Mutter all work, and even Xwayland with acceleration can work to a degree, which the blob can’t do.

Also recently fixed is support for using the blob for clients when the compositor is using Panfrost, for non-wlroots compositors. This means that you can have (or will have, once I fix a few more issues) all the performance of the blob for Wayland GLES applications, but X11 can still be accelerated!

A few horrendous hacks later, and SuperTuxKart now runs, even with the fancy GLES3 renderer!

Which driver will win the race?

(Answer: They both run badly, because the real bottleneck is Weston’s screen capture. Maybe Sway+wf-recorder would work better, except that the blob doesn’t work with it unless I do some patching.)

Does this work with mainline kernel or do we still need Rockchip BSP?