@hipboi Is this really what you wanted?

ROCK 5B Debug Party Invitation

there are some parameters here /sys/devices/platform/fb000000.gpu/devfreq/fb000000.gpu too.

thank you so much for your very interesting feedback! it should be considered. we should use the good parameters for everyone.

I was planning to monitor this and npu.

Maybe create a very simple monitor (collect relevant info) and draw a chart in png at the final step, that’s the idea.

@tkaiser, i am following up on your findings, If i come up with something useful i push it to github.

i think @icecream95 started to reverse engineer the npu, maybe he can disclose some additional info about his findings.

I haven’t gotten very far with reversing the NPU, currently I’m still focusing on the GPU and it’s firmware.

(I’ve found that the MCU in the GPU runs at the same speed as the shader cores, so if anyone has a use for a Cortex-M7 clocked at 1 GHz which can access at least 1 GB of RAM through the GPU MMU…)

I think you mean the DMC governor, right? Before we move the PD voltage negotiation to the u-boot, we will have to enable DMC to save power to make sure we have enough power booting to kernel

PD voltage negotiation.

For Radxa (no idea whether they’re following this thread or just have a party in a hidden Discord channel) the results should be obvious especially when sending out review samples to those clueless YouTube clowns who will share performance numbers at least 10% less of what RK3588 is able to deliver.

We did not send any developer edition to any Youtubers, only developers.

Wow that’s insane… before this, the only chip with Cortex-M7 with such frequency I have ever seen is NXP’s i.mx rt1170.

You might get more power savings by CONFIG_CPU_FREQ_DEFAULT_GOV_POWERSAVE=y (spent a lot of time on this half a decade ago when still contributing to Armbian, ofc the distro then needs to switch back to schedutil or ondemand in a later stage e.g. by configuring cpufrequtils or some radxa-tune-hardware service).

Speaking of schedutil vs. ondemand and I/O performance the choice is rather obvious: https://github.com/radxa/kernel/commit/55f540ce97a3d19330abea8a0afc0052ab2644ef#commitcomment-79484235

I/O performance sucks without either performance or ondemand combined with io_is_busy (and to be honest: Radxa’s (lack of) feedback sucks too).

My older script code rotting unmaintained in some Armbian service won’t do it any more (and they won’t change anything about it since not giving a sh*t about low-level optimisations).

Yeah, but once you send out review samples IMHO you should’ve fixed the performance issues. Both.

Currently the only other RK3588(S) vendor affected by trashed memory performance is Firefly. All the others have not discovered/enabled the dmc device-tree node so far.

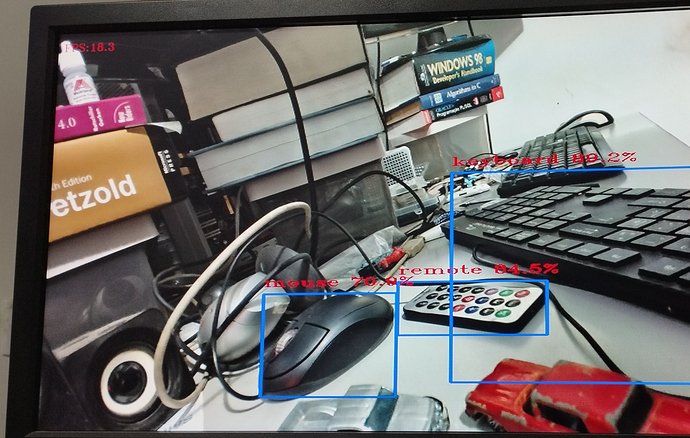

The following experiment was performed to test the effect of the “performance” governor in GPU and npu with the latest radxa kernel settings (ondemand without tkaiser’s tweaks) :

the board is idle (no GPU and npu activities):

root@rock5b:/sys# cat ./devices/platform/fdab0000.npu/devfreq/fdab0000.npu/available_frequencies

300000000 400000000 500000000 600000000 700000000 800000000 900000000 1000000000

root@rock5b:/sys# cat ./devices/platform/fdab0000.npu/devfreq/fdab0000.npu/cur_freq

1000000000

root@rock5b:/sys# cat ./devices/platform/fb000000.gpu/devfreq/fb000000.gpu/available_frequencies

1000000000 900000000 800000000 700000000 600000000 500000000 400000000 300000000

root@rock5b:/sys# cat ./devices/platform/fb000000.gpu/devfreq/fb000000.gpu/cur_freq

300000000

cat ./devices/platform/fb000000.gpu/devfreq/fb000000.gpu/governor

simple_ondemand

Running kmscube, glmark2-es-drm and the sdl2-cam (camera + npu +sdl) did not change cur_freq for gpu and npu.

Next:

root@rock5b:/home/rock# cat /sys/devices/platform/fb000000.gpu/devfreq/fb000000.gpu/governor

simple_ondemand

root@rock5b:/home/rock# cat /sys/devices/platform/fb000000.gpu/devfreq/fb000000.gpu/cur_freq

300000000

root@rock5b:/home/rock# echo performance > /sys/devices/platform/fb000000.gpu/devfreq/fb000000.gpu/governor

root@rock5b:/home/rock# cat /sys/devices/platform/fb000000.gpu/devfreq/fb000000.gpu/cur_freq

1000000000

root@rock5b:/home/rock# cat /sys/devices/platform/fdab0000.npu/devfreq/fdab0000.npu/governor

userspace

root@rock5b:/home/rock# echo performance > /sys/devices/platform/fdab0000.npu/devfreq/fdab0000.npu/governor

root@rock5b:/home/rock# cat /sys/devices/platform/fdab0000.npu/devfreq/fdab0000.npu/governor

performance

root@rock5b:/home/rock# cat /sys/devices/platform/fdab0000.npu/devfreq/fdab0000.npu/cur_freq

1000000000

Got a ~10% boost with sdl2-cam demo and i have the feeling that npu became much more responsive.

glmark2-es-drm and testgles2 are capped at 60 fps and kmscube does not report fps. What would be the best way to measure gpu with a possible gain?

I will test with “performance” governor and see what i get.

Applied:

echo 1 > /sys/devices/system/cpu/cpu4/cpufreq/ondemand/io_is_busy

echo 25 > /sys/devices/system/cpu/cpu4/cpufreq/ondemand/up_threshold

echo 10 > /sys/devices/system/cpu/cpu4/cpufreq/ondemand/sampling_down_factor

echo 200000 > /sys/devices/system/cpu/cpu4/cpufreq/ondemand/sampling_rate

echo 1 > /sys/devices/system/cpu/cpu0/cpufreq/ondemand/io_is_busy

echo 25 > /sys/devices/system/cpu/cpu0/cpufreq/ondemand/up_threshold

echo 10 > /sys/devices/system/cpu/cpu0/cpufreq/ondemand/sampling_down_factor

echo 200000 > /sys/devices/system/cpu/cpu0/cpufreq/ondemand/sampling_rate

Got ~20% boost overall.

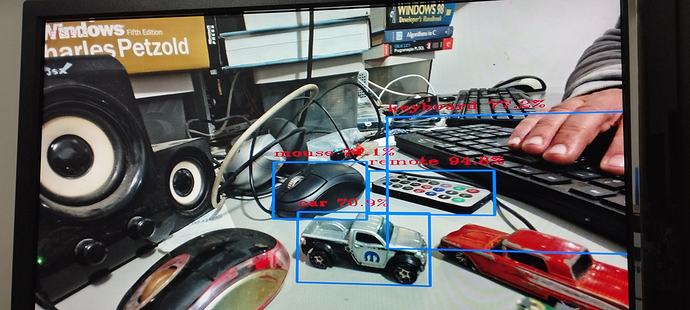

From 15 FPS to 18 FPS, again, that depends on object being detected, before i had 20 fps peaks, now 24 fps. I think the bottleneck is on opencv.

performance governor:

cat /sys/devices/system/cpu/cpu?/cpufreq/scaling_governor

performance

performance

performance

performance

performance

performance

performance

performance

24 fps:

Please keep in mind that we’ve 3 cpufreq policies on RK3588: 0, 4 and 6. And please also keep in mind that cpufreq is just one piece of the puzzle if the task is bottlenecked by CPU cores (maybe opencv). The scheduler and maybe also IRQ processing is another piece.

So I would suggest to switch to performance for all those tests [1] and then try to pin the CPU relevant stuff to the A76 cores either by prefixing calls with taskset -c 4-7 or using cgroups.

Also interesting how /proc/interrupts looks like prior and after such tests since if there’s an awful lot of IRQs and those remain on cpu0 this is another area of optimisation.

[1] Not just cpufreq governor but all of them. IMO it makes more sense to optimize for performance now and for powersavings later once max performance is known:

/sys/devices/platform/dmc/devfreq/dmc/governor

/sys/devices/platform/fb000000.gpu/devfreq/fb000000.gpu/governor

/sys/devices/platform/fdab0000.npu/devfreq/fdab0000.npu/governor

/sys/devices/system/cpu/cpufreq/policy0/scaling_governor

/sys/devices/system/cpu/cpufreq/policy4/scaling_governor

/sys/devices/system/cpu/cpufreq/policy6/scaling_governorBTW: There’s also debugfs to check frequencies:

root@rock-5b:~# cat /sys/kernel/debug/clk/clk_summary | grep scmi_clk_

scmi_clk_npu 0 3 0 200000000 0 0 50000

scmi_clk_gpu 0 2 0 200000000 0 0 50000

scmi_clk_ddr 0 0 0 528000000 0 0 50000

scmi_clk_cpub23 0 0 0 408000000 0 0 50000

scmi_clk_cpub01 0 0 0 408000000 0 0 50000

scmi_clk_dsu 0 0 0 0 0 0 50000

scmi_clk_cpul 0 0 0 408000000 0 0 50000

Now switch DRAM and CPU cores to performance:

root@rock-5b:~# echo performance >/sys/devices/platform/dmc/devfreq/dmc/governor

root@rock-5b:~# echo performance >/sys/devices/system/cpu/cpu0/cpufreq/scaling_governor

root@rock-5b:~# echo performance >/sys/devices/system/cpu/cpu4/cpufreq/scaling_governor

root@rock-5b:~# echo performance >/sys/devices/system/cpu/cpu6/cpufreq/scaling_governor

And confirm:

root@rock-5b:~# cat /sys/kernel/debug/clk/clk_summary | grep scmi_clk_

scmi_clk_npu 0 3 0 200000000 0 0 50000

scmi_clk_gpu 0 2 0 200000000 0 0 50000

scmi_clk_ddr 0 0 0 2112000000 0 0 50000

scmi_clk_cpub23 0 0 0 2400000000 0 0 50000

scmi_clk_cpub01 0 0 0 2400000000 0 0 50000

scmi_clk_dsu 0 0 0 0 0 0 50000

scmi_clk_cpul 0 0 0 1800000000 0 0 50000

For whatever reasons these clocks match the following cpufreq policies:

-

0-3-->cpul -

4-5-->cpub01 -

6-7-->cpub23

For q&d monitoring while running experiments:

#!/bin/bash

renice 19 $BASHPID >/dev/null 2>&1

i=0

printf "%7s %7s %7s %7s %7s %7s %7s\n" $(sed 's/cpul/cpua/' </sys/kernel/debug/clk/clk_summary | sort | awk -F" " '/scmi_clk_/ {print $1}' | sed -e 's/scmi_clk_//' -e 's/000000//' -e 's/cpub01/cpu4-5/' -e 's/cpub23/cpu6-7/' -e 's/cpua/cpu0-3/' | tr "\n" " " | tr '[:lower:]' '[:upper:]')

while true ; do

printf "%7s %7s %7s %7s %7s %7s %7s\n" $(sed 's/cpul/cpua/' </sys/kernel/debug/clk/clk_summary | sort | awk -F" " '/scmi_clk_/ {print $5}' | sed -e 's/000000//' | tr "\n" " ")

sleep 5

((i++))

if [ $i -eq 24 ]; then

i=0

printf "\n%7s %7s %7s %7s %7s %7s %7s\n" $(sed 's/cpul/cpua/' </sys/kernel/debug/clk/clk_summary | sort | awk -F" " '/scmi_clk_/ {print $1}' | sed -e 's/scmi_clk_//' -e 's/000000//' -e 's/cpub01/cpu4-5/' -e 's/cpub23/cpu6-7/' -e 's/cpua/cpu0-3/' | tr "\n" " " | tr '[:lower:]' '[:upper:]')

fi

done

Looks like this then:

root@rock-5b:/home/tk# check-rk3588-clocks

CPU0-3 CPU4-5 CPU6-7 DDR DSU GPU NPU

1800 600 1200 528 0 200 200

600 816 1200 528 396 200 200

816 1008 408 528 0 200 200

600 816 1416 1068 594 200 200

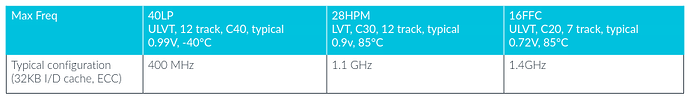

^CFrom the Cortex-M7 datasheet:

If it can do a gigahertz even at 28nm, then at 8nm it shouldn’t even be a challenge.

I’ve also benchmarked the command-stream interpreter (which is seperate from the MCU), and when using the instructions for loading and storing 64 bytes at a time (similar to AArch32 LDM/STM, but adjusted to work with the 96 available 32-bit registers) it can memcpy at about 310 MB/s. Probably the MCU is faster, but it uses a separate address space so mapping memory is harder.

Well, situation with RK3588 and its much more capable memory controller seems to differ

Using sbc-bench’s -g switch to do consumption measurements with this device: Power monitoring on socket 2 of powerbox-1 (Netio 4KF, FW v3.2.0, XML API v2.4, 233.43V @ 49.98Hz).

We’re talking about ~1740 mW when clocking the LPDDR4 in idle with 528 MHz vs. ~2420 mW when at 2112 MHz. A whopping 680 mW difference at least on my board with 16GB RAM.

All CPU cores and dmc governor set to powersave (only the mW value at the bottom counts since averaged from a few measurements before):

System health while idling for 4 minutes:

Time big.LITTLE load %cpu %sys %usr %nice %io %irq Temp mW

11:57:45: 408/ 408MHz 0.89 7% 0% 6% 0% 0% 0% 37.9°C 20

11:58:15: 408/ 408MHz 0.54 0% 0% 0% 0% 0% 0% 37.9°C 1740

11:58:45: 408/ 408MHz 0.33 0% 0% 0% 0% 0% 0% 37.0°C 1740

11:59:15: 408/ 408MHz 0.20 0% 0% 0% 0% 0% 0% 37.0°C 1750

11:59:45: 408/ 408MHz 0.12 1% 0% 0% 0% 0% 0% 37.0°C 1740

12:00:15: 408/ 408MHz 0.14 1% 0% 0% 0% 0% 0% 36.1°C 1750

12:00:46: 408/ 408MHz 0.08 0% 0% 0% 0% 0% 0% 37.0°C 1740

12:01:16: 408/ 408MHz 0.05 0% 0% 0% 0% 0% 0% 37.0°C 1740

Now only dmc governor set to performance:

System health while idling for 4 minutes:

Time big.LITTLE load %cpu %sys %usr %nice %io %irq Temp mW

12:07:21: 408/ 408MHz 1.02 12% 0% 11% 0% 0% 0% 40.7°C 20

12:07:51: 408/ 408MHz 0.62 0% 0% 0% 0% 0% 0% 39.8°C 2550

12:08:21: 408/ 408MHz 0.37 0% 0% 0% 0% 0% 0% 39.8°C 2480

12:08:51: 408/ 408MHz 0.29 0% 0% 0% 0% 0% 0% 39.8°C 2460

12:09:21: 408/ 408MHz 0.17 0% 0% 0% 0% 0% 0% 39.8°C 2450

12:09:51: 408/ 408MHz 0.10 0% 0% 0% 0% 0% 0% 39.8°C 2440

12:10:22: 408/ 408MHz 0.06 0% 0% 0% 0% 0% 0% 39.8°C 2430

12:10:52: 408/ 408MHz 0.18 0% 0% 0% 0% 0% 0% 39.8°C 2420

Quick check with dmc_ondemand results in numbers slightly above powersave:

System health while idling for 4 minutes:

Time big.LITTLE load %cpu %sys %usr %nice %io %irq Temp mW

12:17:08: 408/ 408MHz 1.03 15% 0% 14% 0% 0% 0% 38.8°C 10

12:17:38: 408/ 408MHz 0.62 0% 0% 0% 0% 0% 0% 37.9°C 1760

12:18:08: 408/ 408MHz 0.38 0% 0% 0% 0% 0% 0% 37.9°C 1800

12:18:38: 408/ 408MHz 0.23 0% 0% 0% 0% 0% 0% 37.9°C 1770

12:19:08: 408/ 408MHz 0.20 0% 0% 0% 0% 0% 0% 37.9°C 1760

12:19:39: 408/ 408MHz 0.12 0% 0% 0% 0% 0% 0% 37.0°C 1760

12:20:09: 408/ 408MHz 0.07 0% 0% 0% 0% 0% 0% 37.0°C 1740

12:20:39: 408/ 408MHz 0.04 0% 0% 0% 0% 0% 0% 37.0°C 1740

Edit: corrected values above from 700mW difference to 680mW. And tried to confirm with another PSU (this time 15W RPi USB-C power brick, before some 24W USB PD charger):

-

powersave/ 528 MHz: 35.2°C, idle consumption: 1280mW -

performance/ 2112 MHz: 37.9°C, idle consumption: 1910mW

A 630mW difference this time (the measurements include all losses by PSU and cable between PSU and board and those PSUs might differ in low load situations).

The only thing i did not change was the dmc/governor .

No visible improvement with (maybe 0.5 fps spikes):

Previous:

root@rock5b:/home/rock# cat /sys/kernel/debug/clk/clk_summary | grep scmi_clk_

scmi_clk_npu 0 3 0 200000000 0 0 50000

scmi_clk_gpu 0 2 0 200000000 0 0 50000

scmi_clk_ddr 0 0 0 528000000 0 0 50000

scmi_clk_cpub23 0 0 0 408000000 0 0 50000

scmi_clk_cpub01 0 0 0 408000000 0 0 50000

scmi_clk_dsu 0 0 0 0 0 0 50000

scmi_clk_cpul 0 0 0 1416000000 0 0 50000

performance:

echo performance >/sys/devices/platform/dmc/devfreq/dmc/governor

echo performance >/sys/devices/system/cpu/cpu0/cpufreq/scaling_governor

echo performance >/sys/devices/system/cpu/cpu4/cpufreq/scaling_governor

echo performance >/sys/devices/system/cpu/cpu6/cpufreq/scaling_governor

checking again:

root@rock5b:/home/rock# cat /sys/kernel/debug/clk/clk_summary | grep scmi_clk_

scmi_clk_npu 0 3 0 200000000 0 0 50000

scmi_clk_gpu 0 2 0 200000000 0 0 50000

scmi_clk_ddr 0 0 0 2112000000 0 0 50000

scmi_clk_cpub23 0 0 0 2256000000 0 0 50000

scmi_clk_cpub01 0 0 0 2256000000 0 0 50000

scmi_clk_dsu 0 0 0 0 0 0 50000

scmi_clk_cpul 0 0 0 1800000000 0 0 50000

and monitoring while running the demo:

root@rock5b:/home/rock# ./mon.sh

CPU0-3 CPU4-5 CPU6-7 DDR DSU GPU NPU

1800 2256 2256 2112 0 200 200

1800 2256 2256 2112 0 200 200

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

CPU0-3 CPU4-5 CPU6-7 DDR DSU GPU NPU

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

CPU0-3 CPU4-5 CPU6-7 DDR DSU GPU NPU

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

CPU0-3 CPU4-5 CPU6-7 DDR DSU GPU NPU

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 300 1000

1800 2256 2256 2112 0 200 1000

Update: forgot gpu and npu (1 fps improvement)

root@rock5b:/home/rock# ./mon.sh

CPU0-3 CPU4-5 CPU6-7 DDR DSU GPU NPU

1800 2256 2256 2112 0 1000 1000

1800 2256 2256 2112 0 1000 1000

1800 2256 2256 2112 0 1000 1000

1800 2256 2256 2112 0 1000 1000

1800 2256 2256 2112 0 1000 1000

1800 2256 2256 2112 0 1000 1000

1800 2256 2256 2112 0 1000 1000

1800 2256 2256 2112 0 1000 1000

1800 2256 2256 2112 0 1000 1000

1800 2256 2256 2112 0 1000 1000

1800 2256 2256 2112 0 1000 1000

1800 2256 2256 2112 0 1000 1000

1800 2256 2256 2112 0 1000 1000So adjusting the cpufreq settings brought the biggest gain. Still interested in SMP/IRQ affinity… do both interrupts and tasks end up on big cores? Can you please post /proc/interrupts contents after such a test run and check with htop/atop where the tasks run and whether there are CPU bottlenecks present?

This may be a naive question, but what kernel are you using to have access to the dmc governor settings ? I pulled the latest one from yesterday on the radxa github repo and am still not seeing the entry under /sys. Did you change any config ? I would have liked to test here to take measurements as well. The debug/clk entries say I’m at 2112 MHz for the DDR, and idling around 1.6W (mesured at the USB plug).

check:

https://github.com/radxa/kernel/commit/4ce9a743b253c0c344686085213de1c4059b9d59

CONFIG_ARM_ROCKCHIP_DMC_DEVFREQ=y

Thanks! So I’m having the right option:

$ zgrep DMC_DEVFREQ /proc/config.gz

CONFIG_ARM_ROCKCHIP_DMC_DEVFREQ=y

I checked the DTB and found the matching dmc node:

$ dtc -I dtb -O dts < /boot/dtbs/5.10.66-21-rockchip-gbbe5dbfb385d/rockchip/rk3588-rock-5b.dtb

dmc {

compatible = "rockchip,rk3588-dmc";

interrupts = <0x00 0x49 0x04>;

interrupt-names = "complete";

devfreq-events = <0x37>;

clocks = <0x0e 0x04>;

clock-names = "dmc_clk";

operating-points-v2 = <0x38>;

upthreshold = <0x28>;

downdifferential = <0x14>;

system-status-level = <0x01 0x04 0x08 0x08 0x02 0x01 0x10 0x04 0x10000 0x04 0x1000 0x08 0x4000 0x08 0x2000 0x08 0xc00 0x08>;

auto-freq-en = <0x01>;

status = "okay";

center-supply = <0x39>;

mem-supply = <0x3a>;

phandle = <0x1d8>;

};

One possibility could be that I’m running on rock5b-v1.1.dtb instead, as it doesn’t have this entry, but I’m seeing regulator-name “vcc3v3_pcie2x1l2” which isn’t in that one, so I’m still digging.

Edit: here’s what I’m seeing there:

$ ll /sys/devices/platform/dmc

ls: cannot access '/sys/devices/platform/dmc': No such file or directory

$ sudo find /sys -name '*dmc*

'

/sys/bus/platform/drivers/rockchip-dmc

/sys/firmware/devicetree/base/dmc

/sys/firmware/devicetree/base/__symbols__/dmc

/sys/firmware/devicetree/base/__symbols__/dmc_opp_table

/sys/firmware/devicetree/base/dmc-opp-table

$ ll /sys/bus/platform/drivers/rockchip-dmc

total 0

drwxr-xr-x 2 root root 0 Sep 4 17:58 .

drwxr-xr-x 180 root root 0 Sep 4 17:58 ..

--w------- 1 root root 4096 Sep 4 18:10 bind

--w------- 1 root root 4096 Sep 4 18:10 uevent

--w------- 1 root root 4096 Sep 4 18:10 unbind

But I do see the opp:

$ ll /sys/firmware/devicetree/base/dmc-opp-table

total 0

drwxr-xr-x 6 root root 0 Sep 4 17:58 .

drwxr-xr-x 373 root root 0 Sep 4 17:58 ..

-r--r--r-- 1 root root 20 Sep 4 18:12 compatible

-r--r--r-- 1 root root 14 Sep 4 18:12 name

-r--r--r-- 1 root root 8 Sep 4 18:12 nvmem-cell-names

-r--r--r-- 1 root root 4 Sep 4 18:12 nvmem-cells

drwxr-xr-x 2 root root 0 Sep 4 17:58 opp-1068000000

drwxr-xr-x 2 root root 0 Sep 4 17:58 opp-1560000000

drwxr-xr-x 2 root root 0 Sep 4 17:58 opp-2750000000

drwxr-xr-x 2 root root 0 Sep 4 17:58 opp-528000000

-r--r--r-- 1 root root 4 Sep 4 18:12 phandle

-r--r--r-- 1 root root 48 Sep 4 18:12 rockchip,leakage-voltage-sel

-r--r--r-- 1 root root 4 Sep 4 18:12 rockchip,low-temp

-r--r--r-- 1 root root 4 Sep 4 18:12 rockchip,low-temp-min-volt

-r--r--r-- 1 root root 4 Sep 4 18:12 rockchip,temp-hysteresis