I have a new 64GB eMMC installed and will rebuild everything. If that is what happened (and looks like), i will update here.

Building kernel natively … Pass OK

Ambient Temp… 12 ºC

I have a new 64GB eMMC installed and will rebuild everything. If that is what happened (and looks like), i will update here.

Building kernel natively … Pass OK

Ambient Temp… 12 ºC

Hm, completely forgot about this one.

root@rock-5b:/mnt/md127# cat /sys/module/pcie_aspm/parameters/policy [default] performance powersave powersupersave

Not like it helped in this case, but maybe it will solve my another problem

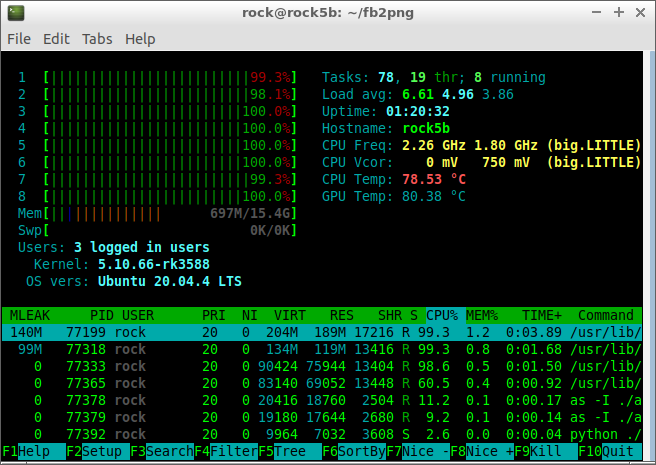

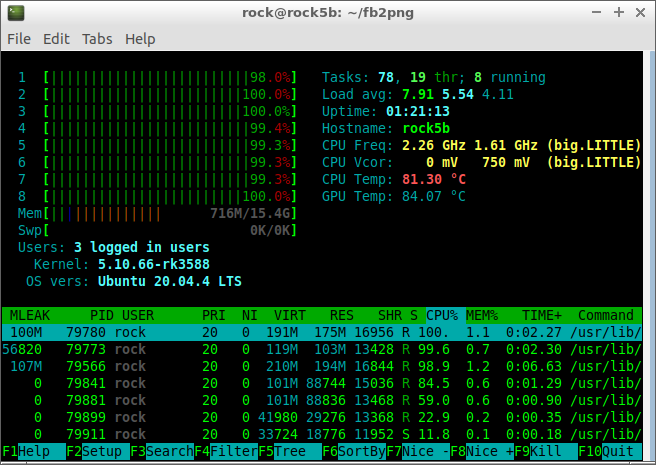

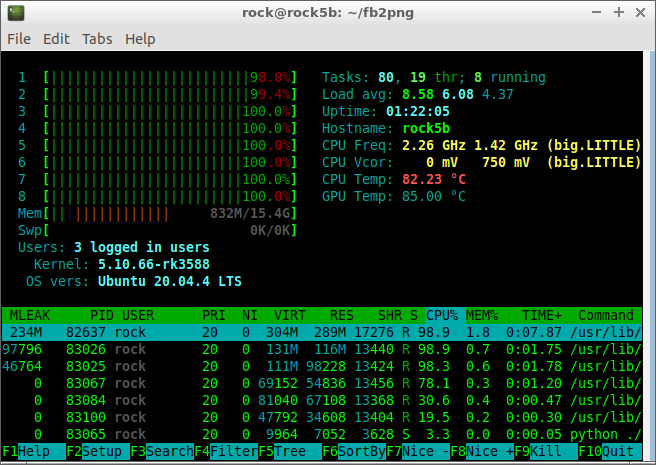

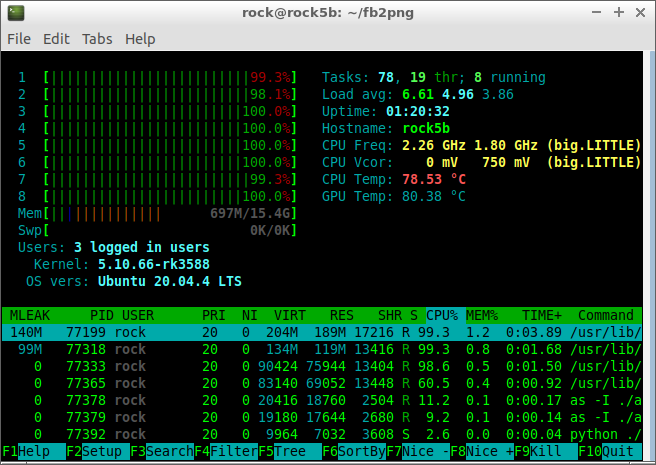

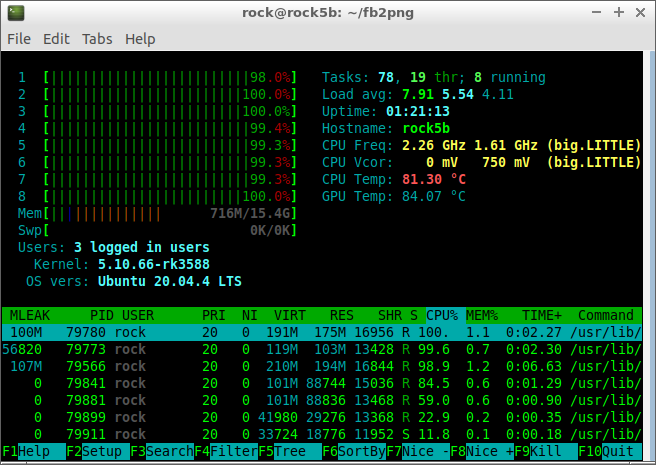

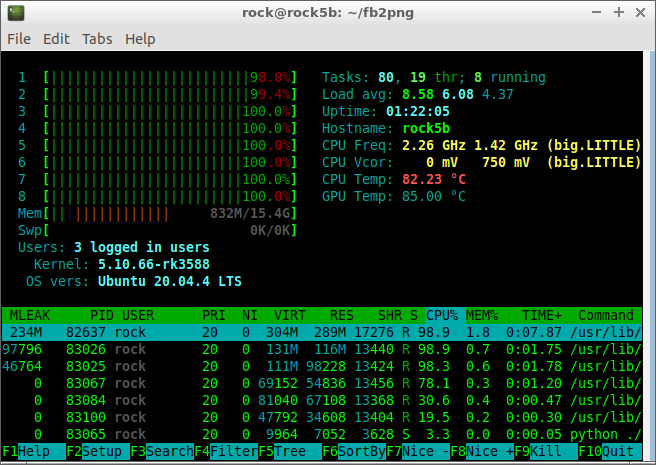

Sooo, you are not gonna cool the memory modules or power module or at least use fan cooler? You just use small passive heatsink for 12W cpu, and receive 85 degree on CPU\GPU temp. HMMMM, totally not overheat

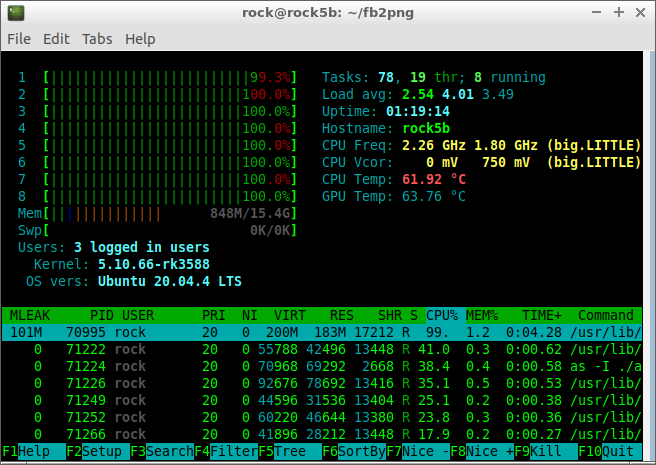

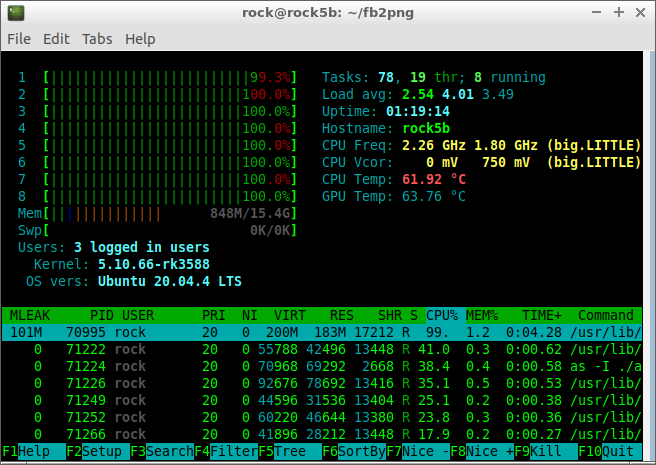

For example - that is my setup for building kernel. And I still get 61 degree on it.

root@rock-5b:/mnt/md127# cat /sys/class/thermal/thermal_zone*/temp 65615 68384 68384 66538 62846 61000 62846

I measured 2.35 Gbps even with iperf3 pinned to a little core as such I would propose to repeat the test this time still with the PC as server and using iperf3 -R -c 192.168.1.38 on Rock 5B. This way retransmits are reported. If there are plenty of them I would check/replace the cable (TX/RX use different pairs).

Also removing the switch would be an idea. Just install avahi-autoipd avahi-daemon prior to this and access the other host not via an IP address but by it’s name (if your PC’s hostname is foobar then it can be accessed as foobar.local).

I did not expect it would heat up like that, but it was one-time only to make sure eMMC would survive. I am glad it survived.

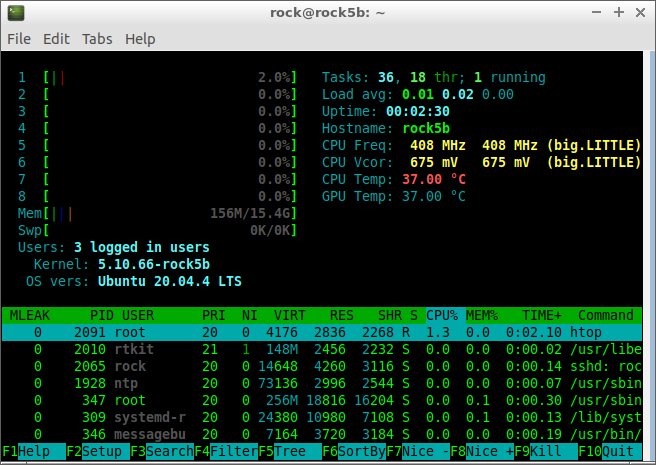

Ok, I think VCore is fixed.

cat /sys/class/regulator/regulator.??/name |grep vdd_cpu

vdd_cpu_lit_s0

vdd_cpu_big0_s0

vdd_cpu_big1_s0

root@rock5b:/home/rock# cat /sys/devices/platform/fd880000.i2c/i2c-0/0-0042/regulator/regulator.30/name

vdd_cpu_big0_s0

root@rock5b:/home/rock# cat /sys/devices/platform/fd880000.i2c/i2c-0/0-0043/regulator/regulator.31/name

vdd_cpu_big1_s0

cat /sys/kernel/debug/regulator/vdd_cpu_lit_s0/voltage

675000

Welp, switched from windows to ubuntu. 14k retransmission surely explain why speed so low. I guess the problem with my setup

Not really possible, since I don’t have any 2.5G nic with me. That’s why I was asking for results from someone else.

Yes. So to be clear your PC has a 10GbE NIC and your switch is Nbase-T capable?

2.35 Gbit/sec in both directions even on a little core.

BTW: RealTek RTL8156B USB3 dongles work pretty well. With macOS even RTL8156 (without the B) but numerous people report problems with Linux (no idea about Windows, almost no use for this OS).

But as usual: ‘buy cheap, buy twice’.

Yes, that’s correct. I guess the transceiver is to blame here. Since I get the same picture even if I force it to 1G with PC to PC. Mikrotik with S+RJ10 is not best choice for 2.5G Ethernet

This is great news. Means its getting closer for us that pre-ordered months and months ago. Looking forward to the new chip and retiring my RK3399’s

More Ethernet basics (but I’ll leave that up to @willy to explain).

Anyway: it’s not an Rock 5B / RTL8125BG issue.

BTW: the amount of feedback from Radxa is overwhelming. But maybe all the important stuff happens in some closed/hidden Discord channel and they (together with those great Armbian guys babbling in their armbian-devel Discord crap channel that is not logged anywhere) simply laugh about everything that happens here ‘in the open’?

Yes, it’s not.

Btw, there should be link towards this closed channel somewhere?

Are you hinting at something special or can we expect that Rock 5B’s M.2 implementation supports ‘bifurcation’ including clocks to the point where aforementioned PCIe splitters or this ODROID H2 net card work flawlessly?

Currently we have reserved two PCIe clock on the M.2 M key of ROCK 5B, which means if splitting the PCIe x4 to two PCIe x2 is possible, without additional PCIe switch.

it needs pipewire-pulse. you may try this: https://pipewire-debian.github.io/pipewire-debian/

Ooh, I wonder if the Optane H10 (which uses x2x2 bifurcation) would work…

Why ask the Radxa team to support an obsolete Intel technology that only works on Microsoft Windows?

It is neither obsolete nor intel-proprietary. You’re thinking of Intel’s “Optane Memory” consumer-focused software product, which is just a fairly mediocre implementation of a tiered cache for an SSD.

The Optane H10 drive is just a 16/32GB PCIe 3.0 x2 Optane SSD and a 512/1024/2048GB QLC NAND SSD on a single card. It doesn’t have to be used with Intel’s shitty software, you can put them in whatever system (as long as it supports x2x2 bifurcation on the M.2 slot) and use the two drives as independent storage volumes.

They work very well as a combined ZIL SLOG (Optane) and L2ARC (NAND) drive in a 2x10Gbps ZFS storage server/NAS - Optane is nearly unmatched when it comes to ZIL SLOG performance, only RAM-based drives beat it - but of course Intel never bothered to market it that way.

Off-topic:

Intel barely even bothered to market Optane at all - it’s not an inherently bad technology, it has many major advantages over NAND flash that do actually make it worth the money, but the only thing they really bothered to market was “Optane Memory”, which isn’t even really a thing - it’s just intel’s Rapid Storage Technology with an Optane cache drive and a brand name - and is atrocious. But that’s what people think of when you say Optane. Stupid.

Then they went all-in on Optane DCPMM (Optane chips on a DDR4 DIMM, and yes, it’s fast enough to almost keep up with DDR4), seeing it as a chance to get customers hooked on something that AMD couldn’t provide - but it’s an incredibly niche technology that’s not very useful outside of a few very specific use cases, and their decision to focus on it is what lead to Micron quitting the 3D XPoint (generic term for Optane) joint venture.

And now they’ve dropped 3D XPoint entirely just before PCIe 5.0 and CXL 2.0 would’ve given it a new lease on life. Another genuinely innovative, highly promising, and potentially revolutionary technology driven into the dirt by Intel’s obsession with finding ways to lock customers into their platforms, rather than just producing a product good enough that nobody wants to go with anyone else.

Anyway yeah that’s wildly off-topic, but the tl;dr is that “Optane Memory” is just shitty software, it is not representative of Optane as a whole, and an SBC with a 32/512 H10 drive in it could be good for quite a few things.

While using Rock5 as ZFS storage may be interesting idea (which I’m gonna test when I will get necessary parts) - don’t forget, that you don’t have ecc memory, which kinda defeats point of ZFS (unless you are going for compression&dedup). And without O_direct patch (3.0) - nvme performance is reaaaly bad

Nope, this is a long debunked myth originating from FreeNAS/TrueNAS forums: https://jrs-s.net/2015/02/03/will-zfs-and-non-ecc-ram-kill-your-data/

Quoting one of the ZFS designers, Matthew Ahrens: