Update from my side, decoding is working with ffplay, i tested the following files:

bbb_sunflower_1080p_30fps_normal.mp4

jellyfish-20-mbps-hd-hevc-10bit.mkv

jellyfish-120-mbps-4k-uhd-h264.mkv

jellyfish-20-mbps-hd-hevc.mkv

jellyfish-20-mbps-hd-h264.mkv

OPS, i realized ffplay is using software decoding:

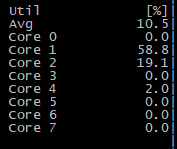

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

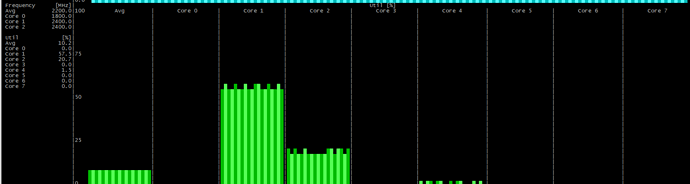

5823 rock 20 0 1861860 200304 63700 S 195.0 1.2 0:43.55 ffplay

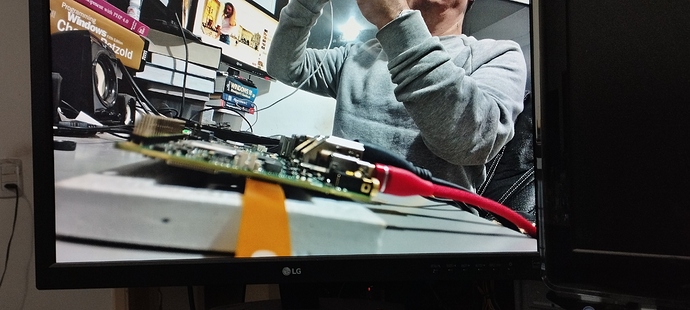

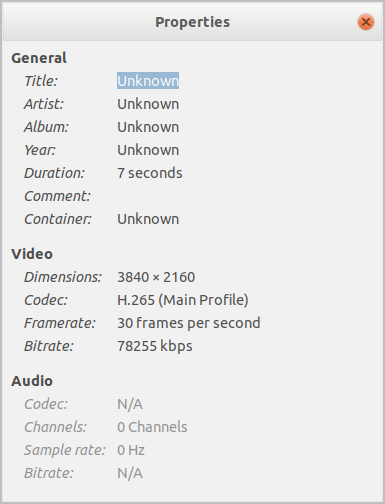

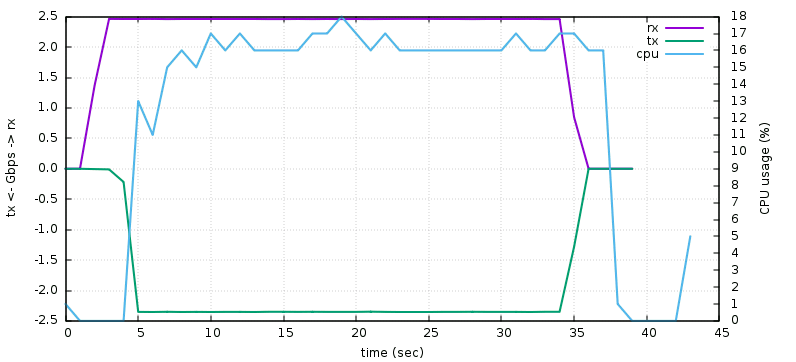

Encoding with gstreamer also works, but encoding h264 from a camera stream (3840x2160) results in 5 fps (playback on my old intel box).

H264:

gst-launch-1.0 v4l2src device=/dev/video11 io-mode=dmabuf ! 'video/x-raw,format=NV12,width=3840,height=2160,framerate=30/1' ! mpph264enc ! filesink location=test_3840x2160_30fps_h264.mp4

H265:

gst-launch-1.0 v4l2src device=/dev/video11 io-mode=dmabuf ! 'video/x-raw,format=NV12,width=3840,height=2160,framerate=30/1' ! mpph265enc ! filesink location=test_3840x2160_30fps_h265.mkv

For some reason, my intel box (ancient) displays it at 3 fps on 1080p. So maybe my intel box could not handle the 4k h264/h265 files properly.

Playing the h265 file in Rock5B with 1080p is just fine:

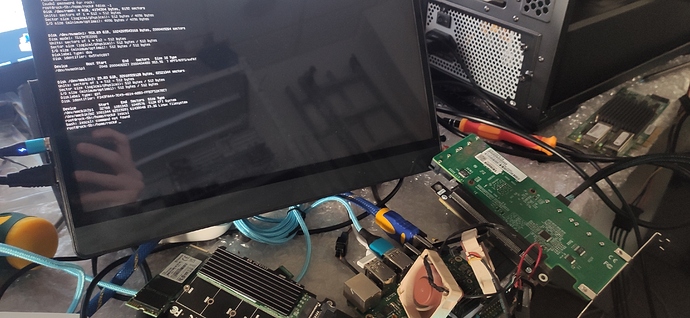

ffplay -i test_3840x2160_30fps_h265.mkv

ffplay version git-2022-05-25-73d7bc2 Copyright (c) 2003-2021 the FFmpeg developers

built with gcc 9 (Ubuntu 9.4.0-1ubuntu1~20.04.1)

configuration: --prefix=/usr --disable-libopenh264 --disable-vaapi --disable-vdpau --disable-decoder=h264_v4l2m2m --disable-decoder=vp8_v4l2m2m --disable-decoder=mpeg2_v4l2m2m --disable-decoder=mpeg4_v4l2m2m --disable-libxvid --disable-libx264 --disable-libx265 --enable-librga --enable-rkmpp --enable-nonfree --enable-gpl --enable-version3 --enable-libmp3lame --enable-libpulse --enable-libv4l2 --enable-libdrm --enable-libxml2 --enable-librtmp --enable-libfreetype --enable-openssl --enable-opengl --enable-libopus --enable-libvorbis --enable-shared --enable-decoder='aac,ac3,flac' --extra-cflags=-I/usr/src/linux-headers-5.10.66-rk3588/include

libavutil 57. 7.100 / 57. 7.100

libavcodec 59. 12.100 / 59. 12.100

libavformat 59. 8.100 / 59. 8.100

libavdevice 59. 0.101 / 59. 0.101

libavfilter 8. 16.100 / 8. 16.100

libswscale 6. 1.100 / 6. 1.100

libswresample 4. 0.100 / 4. 0.100

libpostproc 56. 0.100 / 56. 0.100

arm_release_ver of this libmali is 'g6p0-01eac0', rk_so_ver is '5'.

arm_release_ver of this libmali is 'g6p0-01eac0', rk_so_ver is '5'.

[hevc @ 0x7f94000c10] Stream #0: not enough frames to estimate rate; consider increasing probesize

Input #0, hevc, from 'test_3840x2160_30fps_h265.mkv':

Duration: N/A, bitrate: N/A

Stream #0:0: Video: hevc (Main), yuv420p(tv), 3840x2160, 30 fps, 30 tbr, 1200k tbn

nan M-V: nan fd= 9 aq= 0KB vq= 0KB sq= 0B f=0/0