@geerlingguy Which USB PD power supply did you use? Another user reported same issue on the wechat group, using the 65W PD power supply from Lenovo can power off the O6 but the 140W Legion PD power supply power off will reboot.

Orion O6 Debug Party Invitation

I was originally testing with an Apple 61W USB-C PD supply, but have switched to testing with an Anker Nano II 65W GaN supply (which I haven’t yet tested with a boot/shutdown loop… I will try that later).

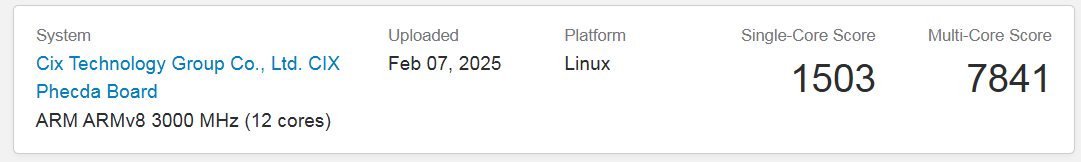

It looks like CIX pushed the cpu freq up to 3.0 GHz on their EVBs. This brings quite a performance improvement. https://browser.geekbench.com/v6/cpu/10375547

It’s 2.9 GHz (Geekbench is notoriously bad in reporting correct numbers) and this might be the CP8180 and not the CD8180 SBC version we get on the O6

Whats the diference?

Between CD8180 and CP8180? All I know is that different variants exist and I suspect CP8180 getting higher clocks (at the price of way higher peak / fully-loaded consumption)

“more flexible on the voltage/frequency” implies that the CD8180 found in O6 has more potential than CP8180.

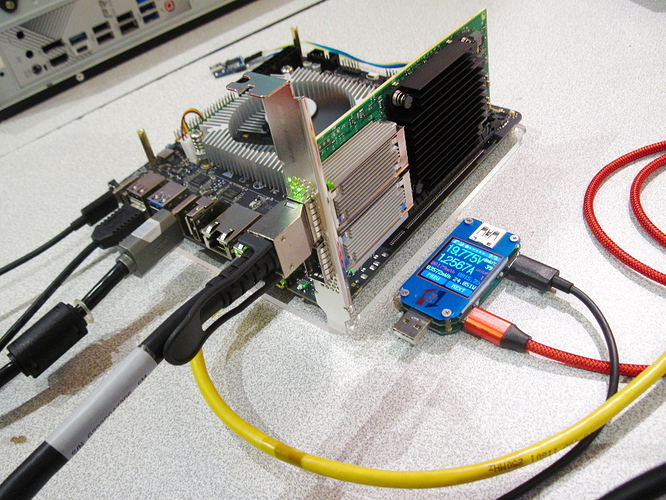

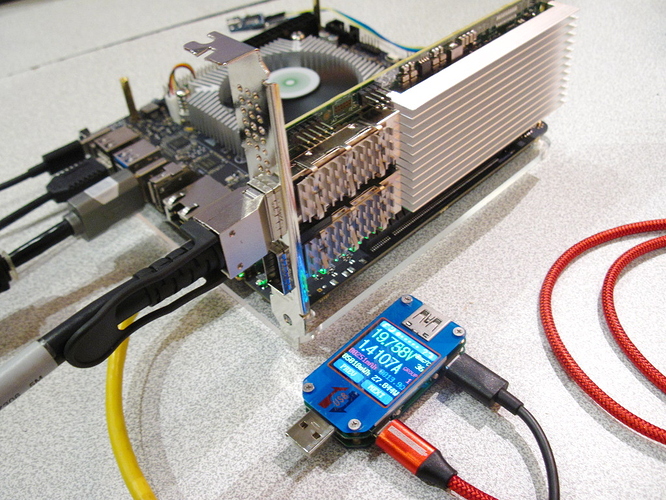

I could finally gather all my results of the quick 100 Gbps tests I’ve run with HAProxy this week. I first plugged a Mellanox ConnectX-5 dual-100G NIC. It was properly recognized and used a x8. The problem is that the card is a bit old now and limited to PCIe Gen3, so tests were severely limited, and I replaced it. Here’s a photo and the output of lspci for the device:

0001:c1:00.0 Ethernet controller [0200]: Mellanox Technologies MT27800 Family [ConnectX-5] [15b3:1017]

Subsystem: Mellanox Technologies Mellanox ConnectX-5 MCX516A-CCAT [15b3:0007]

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0

Interrupt: pin A routed to IRQ 136

Region 0: Memory at 1800000000 (64-bit, prefetchable) [size=32M]

Expansion ROM at 60300000 [disabled] [size=1M]

Capabilities: [60] Express (v2) Endpoint, MSI 00

DevCap: MaxPayload 512 bytes, PhantFunc 0, Latency L0s unlimited, L1 unlimited

ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset+ SlotPowerLimit 0W

DevCtl: CorrErr+ NonFatalErr+ FatalErr+ UnsupReq+

RlxdOrd+ ExtTag+ PhantFunc- AuxPwr- NoSnoop+ FLReset-

MaxPayload 128 bytes, MaxReadReq 512 bytes

DevSta: CorrErr+ NonFatalErr- FatalErr- UnsupReq+ AuxPwr- TransPend-

LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM not supported

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 8GT/s, Width x8 (downgraded)

TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

DevCap2: Completion Timeout: Range ABCD, TimeoutDis+ NROPrPrP- LTR-

10BitTagComp- 10BitTagReq- OBFF Not Supported, ExtFmt- EETLPPrefix-

EmergencyPowerReduction Not Supported, EmergencyPowerReductionInit-

FRS- TPHComp- ExtTPHComp-

AtomicOpsCap: 32bit- 64bit- 128bitCAS-

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis- LTR- 10BitTagReq- OBFF Disabled,

AtomicOpsCtl: ReqEn+

LnkCap2: Supported Link Speeds: 2.5-8GT/s, Crosslink- Retimer- 2Retimers- DRS-

LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance Preset/De-emphasis: -6dB de-emphasis, 0dB preshoot

LnkSta2: Current De-emphasis Level: -6dB, EqualizationComplete+ EqualizationPhase1+

EqualizationPhase2+ EqualizationPhase3+ LinkEqualizationRequest-

Retimer- 2Retimers- CrosslinkRes: unsupported

Capabilities: [48] Vital Product Data

Product Name: CX516A - ConnectX-5 QSFP28

Read-only fields:

[PN] Part number: MCX516A-CCAT

[EC] Engineering changes: A8

[V2] Vendor specific: MCX516A-CCAT

[SN] Serial number: MT1913K00843

[V3] Vendor specific: 7a98d2353b50e911800098039bcc0f74

[VA] Vendor specific: MLX:MODL=CX516A:MN=MLNX:CSKU=V2:UUID=V3:PCI=V0

[V0] Vendor specific: PCIeGen3 x16

[RV] Reserved: checksum good, 2 byte(s) reserved

End

Capabilities: [9c] MSI-X: Enable+ Count=64 Masked-

Vector table: BAR=0 offset=00002000

PBA: BAR=0 offset=00003000

Capabilities: [c0] Vendor Specific Information: Len=18 <?>

Capabilities: [40] Power Management version 3

Flags: PMEClk- DSI- D1- D2- AuxCurrent=375mA PME(D0-,D1-,D2-,D3hot-,D3cold+)

Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=0 PME-

Capabilities: [100 v1] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UESvrt: DLP+ SDES- TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

AERCap: First Error Pointer: 04, ECRCGenCap+ ECRCGenEn- ECRCChkCap+ ECRCChkEn-

MultHdrRecCap- MultHdrRecEn- TLPPfxPres- HdrLogCap-

HeaderLog: 00000000 00000000 00000000 00000000

Capabilities: [150 v1] Alternative Routing-ID Interpretation (ARI)

ARICap: MFVC- ACS-, Next Function: 1

ARICtl: MFVC- ACS-, Function Group: 0

Capabilities: [1c0 v1] Secondary PCI Express

LnkCtl3: LnkEquIntrruptEn- PerformEqu-

LaneErrStat: 0

Capabilities: [230 v1] Access Control Services

ACSCap: SrcValid- TransBlk- ReqRedir- CmpltRedir- UpstreamFwd- EgressCtrl- DirectTrans-

ACSCtl: SrcValid- TransBlk- ReqRedir- CmpltRedir- UpstreamFwd- EgressCtrl- DirectTrans-

Kernel driver in use: mlx5_core

Kernel modules: mlx5_core

Next I replaced it with an intel i810 (dual 100G as well):

It consumes more than the Mellanox in idle, the power jumped by 3 more watts (24.8 to 27.8). This time it negotiates PCIe Gen4, thus the theoretical bus limit is 126 Gbps (16GT/s * 128/130 *8), here’s the lspci output:

0001:c1:00.0 Ethernet controller [0200]: Intel Corporation Ethernet Controller E810-C for QSFP [8086:1592] (rev 02)

Subsystem: Intel Corporation Ethernet Network Adapter E810-C-Q2 [8086:0002]

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0

Interrupt: pin A routed to IRQ 136

Region 0: Memory at 1800000000 (64-bit, prefetchable) [size=32M]

Region 3: Memory at 1806000000 (64-bit, prefetchable) [size=64K]

Expansion ROM at 60300000 [virtual] [disabled] [size=1M]

Capabilities: [40] Power Management version 3

Flags: PMEClk- DSI+ D1- D2- AuxCurrent=0mA PME(D0-,D1-,D2-,D3hot-,D3cold-)

Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=1 PME-

Capabilities: [50] MSI: Enable- Count=1/1 Maskable+ 64bit+

Address: 0000000000000000 Data: 0000

Masking: 00000000 Pending: 00000000

Capabilities: [70] MSI-X: Enable+ Count=1024 Masked-

Vector table: BAR=3 offset=00000000

PBA: BAR=3 offset=00008000

Capabilities: [a0] Express (v2) Endpoint, MSI 00

DevCap: MaxPayload 512 bytes, PhantFunc 0, Latency L0s <512ns, L1 <64us

ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset+ SlotPowerLimit 0W

DevCtl: CorrErr+ NonFatalErr+ FatalErr+ UnsupReq+

RlxdOrd+ ExtTag+ PhantFunc- AuxPwr- NoSnoop- FLReset-

MaxPayload 128 bytes, MaxReadReq 512 bytes

DevSta: CorrErr+ NonFatalErr- FatalErr- UnsupReq+ AuxPwr+ TransPend-

LnkCap: Port #0, Speed 16GT/s, Width x16, ASPM not supported

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk-

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 16GT/s, Width x8 (downgraded)

TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

DevCap2: Completion Timeout: Range AB, TimeoutDis+ NROPrPrP- LTR-

10BitTagComp+ 10BitTagReq- OBFF Not Supported, ExtFmt+ EETLPPrefix+, MaxEETLPPrefixes 1

EmergencyPowerReduction Not Supported, EmergencyPowerReductionInit-

FRS- TPHComp- ExtTPHComp-

AtomicOpsCap: 32bit- 64bit- 128bitCAS-

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis- LTR- 10BitTagReq- OBFF Disabled,

AtomicOpsCtl: ReqEn-

LnkCap2: Supported Link Speeds: 2.5-16GT/s, Crosslink- Retimer+ 2Retimers+ DRS-

LnkCtl2: Target Link Speed: 16GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance Preset/De-emphasis: -6dB de-emphasis, 0dB preshoot

LnkSta2: Current De-emphasis Level: -6dB, EqualizationComplete+ EqualizationPhase1+

EqualizationPhase2+ EqualizationPhase3+ LinkEqualizationRequest-

Retimer- 2Retimers- CrosslinkRes: unsupported

Capabilities: [e0] Vital Product Data

Product Name: Intel(R) Ethernet Network Adapter E810-CQDA2

Read-only fields:

[V1] Vendor specific: Intel(R) Ethernet Network Adapter E810-CQDA2

[PN] Part number: K91258-006

[SN] Serial number: B49691B3CA78

[V2] Vendor specific: 0621

[RV] Reserved: checksum good, 1 byte(s) reserved

End

Capabilities: [100 v2] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UESvrt: DLP+ SDES- TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

AERCap: First Error Pointer: 00, ECRCGenCap+ ECRCGenEn- ECRCChkCap+ ECRCChkEn-

MultHdrRecCap- MultHdrRecEn- TLPPfxPres- HdrLogCap-

HeaderLog: 00000000 00000000 00000000 00000000

Capabilities: [148 v1] Alternative Routing-ID Interpretation (ARI)

ARICap: MFVC- ACS-, Next Function: 1

ARICtl: MFVC- ACS-, Function Group: 0

Capabilities: [150 v1] Device Serial Number b4-96-91-ff-ff-b3-ca-78

Capabilities: [160 v1] Single Root I/O Virtualization (SR-IOV)

IOVCap: Migration- 10BitTagReq- Interrupt Message Number: 000

IOVCtl: Enable- Migration- Interrupt- MSE- ARIHierarchy- 10BitTagReq-

IOVSta: Migration-

Initial VFs: 128, Total VFs: 128, Number of VFs: 0, Function Dependency Link: 00

VF offset: 256, stride: 1, Device ID: 1889

Supported Page Size: 00000553, System Page Size: 00000001

Region 0: Memory at 0000001804000000 (64-bit, prefetchable)

Region 3: Memory at 0000001806020000 (64-bit, prefetchable)

VF Migration: offset: 00000000, BIR: 0

Capabilities: [1a0 v1] Transaction Processing Hints

Device specific mode supported

No steering table available

Capabilities: [1b0 v1] Access Control Services

ACSCap: SrcValid- TransBlk- ReqRedir- CmpltRedir- UpstreamFwd- EgressCtrl- DirectTrans-

ACSCtl: SrcValid- TransBlk- ReqRedir- CmpltRedir- UpstreamFwd- EgressCtrl- DirectTrans-

Capabilities: [1d0 v1] Secondary PCI Express

LnkCtl3: LnkEquIntrruptEn- PerformEqu-

LaneErrStat: 0

Capabilities: [200 v1] Data Link Feature <?>

Capabilities: [210 v1] Physical Layer 16.0 GT/s <?>

Capabilities: [250 v1] Lane Margining at the Receiver <?>

Kernel driver in use: ice

Kernel modules: ice

The tuning was not trivial at all, I tried many combination attempts of numbers of queues, core bindings etc. In the end what worked best was:

- when mostly sending traffic (i.e. data coming from the cache):

- using only the 4 biggest cores for the NIC

- using the 4 medium cores for haproxy

- when forwarding traffic (rx then tx):

- using all a720 cores for IRQs

- using all a720 cores for haproxy as well

The thing is, i810 has always been quite hard to tune CPU-wise, its Rx path is super expensive and can quickly end up in polling mode with a few spinning ksoftirqd. So in case of high Rx traffic, it’s better to spread the load on all 8 cores even if it means competing with user land, because that’s still the best way to avoid triggering polling mode.

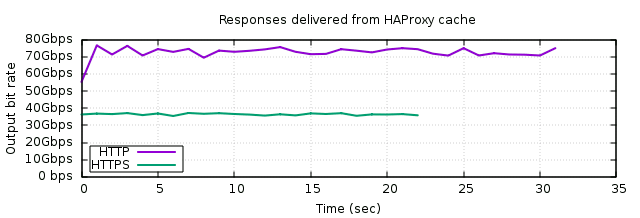

By doing this, I could reach:

- 73-78 Gbps of HTTP responses retrieved from the cache.

- 37 Gbps of HTTPS responses retrieved from the cache

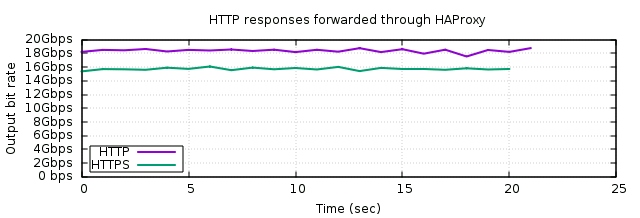

- 18.2 Gbps of forwarded HTTP traffic

- 15.5 Gbps for forwarded HTTPS traffic

For the cache (4 cores haproxy, 4 cores NIC):

The CPU is not full (~15-20% idle in HTTP), which indicates saturation on the PCIe side. It’s not surprising, we’re dealing with 6.5 Mpps, and i810 descriptors are large, so that requires quite a bunch of extra bandwidth on the PCIe and number of transactions. Also the MaxPayload is only 128 bytes, which is super small for large transfers like this. All of this represents significant overhead, and passing 80G at the network level for 126G on the PCIe side seems reasonable to me. Here’s a graph of one of these short tests:

Most of the CPU usage is in memory copies from userland to the NIC:

Overhead Shared Object Symbol

9.47% [kernel] [k] __arch_copy_from_user

8.40% [ice] [k] ice_clean_rx_irq

8.24% [ice] [k] ice_process_skb_fields

8.09% [kernel] [k] dcache_clean_poc

5.58% [kernel] [k] cpuidle_enter_state

3.84% libc.so.6 [.] 0x00000000000a1f08

3.30% [kernel] [k] _raw_spin_unlock_irqrestore

2.63% libc.so.6 [.] 0x00000000000a1f14

2.25% [ice] [k] ice_napi_poll

1.29% [kernel] [k] skb_release_data

1.27% libc.so.6 [.] 0x00000000000a1f10

1.15% [kernel] [k] __irqentry_text_start

1.14% [ice] [k] ice_start_xmit

1.05% [kernel] [k] ip_finish_output2

Now for forwarded traffic

As mentioned above, Rx costs a lot of CPU, so the bandwidth is much lower, even when we sum in+out.

This time the CPU is almost entirely used (2-4% total idle over 8 cores). There are still a few dead times due to the competition between userland and driver which can cause rings to fill up and stop receiving, but if you increase rings, the performance decreases (less cache efficiency). Here’s what we’re seeing:

Here’s an example of CPU usage during the HTTP transfer:

top - 16:45:16 up 29 min, 0 user, load average: 11.65, 9.06, 6.59

Tasks: 347 total, 5 running, 340 sleeping, 2 stopped, 0 zombie

%Cpu0 : 10.7 us, 63.1 sy, 0.0 ni, 4.9 id, 0.0 wa, 1.9 hi, 19.4 si, 0.0 st

%Cpu1 : 0.0 us, 2.9 sy, 0.0 ni, 96.1 id, 0.0 wa, 0.0 hi, 1.0 si, 0.0 st

%Cpu2 : 0.0 us, 0.0 sy, 0.0 ni,100.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

%Cpu3 : 0.0 us, 1.0 sy, 0.0 ni, 99.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

%Cpu4 : 0.0 us, 0.0 sy, 0.0 ni,100.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

%Cpu5 : 0.0 us, 1.9 sy, 0.0 ni, 1.0 id, 0.0 wa, 0.0 hi, 97.1 si, 0.0 st

%Cpu6 : 1.0 us, 5.8 sy, 0.0 ni, 0.0 id, 0.0 wa, 0.0 hi, 93.2 si, 0.0 st

%Cpu7 : 6.7 us, 51.0 sy, 0.0 ni, 11.5 id, 0.0 wa, 1.0 hi, 29.8 si, 0.0 st

%Cpu8 : 1.9 us, 20.4 sy, 0.0 ni, 5.8 id, 0.0 wa, 1.0 hi, 70.9 si, 0.0 st

%Cpu9 : 0.0 us, 0.0 sy, 0.0 ni, 0.0 id, 0.0 wa, 0.0 hi,100.0 si, 0.0 st

%Cpu10 : 1.0 us, 1.0 sy, 0.0 ni, 0.0 id, 0.0 wa, 0.0 hi, 98.1 si, 0.0 st

%Cpu11 : 1.0 us, 55.3 sy, 0.0 ni, 5.8 id, 0.0 wa, 2.9 hi, 35.0 si, 0.0 st

MiB Mem : 15222.3 total, 12697.0 free, 1469.3 used, 1291.2 buff/cache

MiB Swap: 0.0 total, 0.0 free, 0.0 used. 13753.1 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

31668 willy 20 0 675156 85028 13800 S 211.5 0.5 4:36.38 haproxy

60 root 20 0 0 0 0 R 99.0 0.0 2:42.46 ksoftirqd/9

65 root 20 0 0 0 0 R 97.1 0.0 2:39.53 ksoftirqd/10

40 root 20 0 0 0 0 R 96.2 0.0 0:36.26 ksoftirqd/5

45 root 20 0 0 0 0 R 90.4 0.0 1:17.32 ksoftirqd/6

55 root 20 0 0 0 0 S 56.7 0.0 1:00.98 ksoftirqd/8

50 root 20 0 0 0 0 S 5.8 0.0 0:52.94 ksoftirqd/7

The driver is indeed suffering and the load varies quickly between 0 and 100% for each queue, which is also reflected in the unstable %si (softirq, mostly rx) vs %sy (system, mostly tx) at the top.

As expected, perf top now shows more CPU on the Rx path (more than 50% for the first 4 functions used on the Rx path):

Overhead Shared Object Symbol

27.28% [kernel] [k] __arch_copy_to_user

9.97% [ice] [k] ice_process_skb_fields

9.18% [ice] [k] ice_clean_rx_irq

4.87% [kernel] [k] dcache_clean_poc

3.98% [kernel] [k] __arch_copy_from_user

2.22% [kernel] [k] _raw_spin_unlock_irqrestore

1.92% [kernel] [k] __irqentry_text_start

1.23% [ice] [k] ice_start_xmit

1.19% [kernel] [k] el0_svc_common.constprop.0

1.03% haproxy [.] h1_fastfwd

0.99% [kernel] [k] arch_local_irq_restore

0.88% [kernel] [k] __skb_datagram_iter

0.81% [ice] [k] ice_napi_poll

That’s the best I could get, and it’s very good, I’d even say excellent for a machine of this size and power usage.

Speaking of power usage, the total consumption reached up to 37W max during large transfers. The NIC doesn’t consume much more when working (a few extra watts), I think that the 10 extra watts were for 2/3 in the CPU and 1/3 in the NIC. By the way the consumption was about the same in SSL while the network traffic is lower and CPU used more.

I might retest once we get good support for mainline and we can also make the CPU cores run at their target speed. But overall I’m pretty impressed by what this little device can achieve for now. It looks like CIX is taking the notion of CPU performance seriously, and contrary to many in the past, is not focusing on outdated inexpensive cores. I can easily imagine server variants of this CPU with 16 big cores at 2.8-3.0G doing marvels in the low-consumption area and self-hosting (NAS etc).

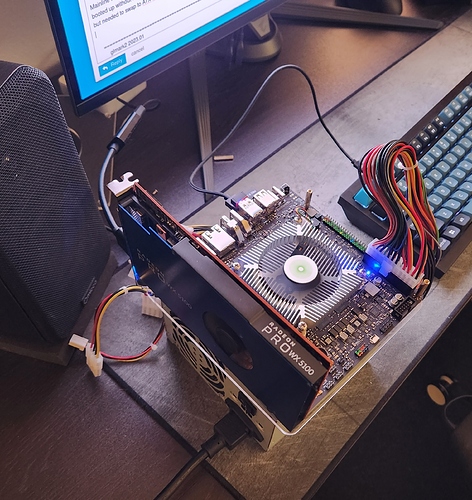

Mainline Fedora 41 with an AMD WX 5100 (Low Power Workstation Polaris 10 card) booted up without issue and shows the UEFI. Seems pretty stable. It booted with USB-C but needed to swap to ATX to complete benchmarks.

Linux fedora 6.12.11-200.fc41.aarch64 #1 SMP PREEMPT_DYNAMIC Fri Jan 24 05:21:03 UTC 2025 aarch64 GNU/Linux

=======================================================

glmark2 2023.01

=======================================================

OpenGL Information

GL_VENDOR: AMD

GL_RENDERER: AMD Radeon Pro WX 5100 Graphics (radeonsi, polaris10, LLVM 19.1.7, DRM 3.59, 6.12.11-200.fc41.aarch64)

GL_VERSION: 4.6 (Compatibility Profile) Mesa 24.3.4

Surface Config: buf=32 r=8 g=8 b=8 a=8 depth=24 stencil=0 samples=0

Surface Size: 800x600 windowed

=======================================================

[build] use-vbo=false: FPS: 3028 FrameTime: 0.330 ms

[build] use-vbo=true: FPS: 3954 FrameTime: 0.253 ms

[texture] texture-filter=nearest: FPS: 3787 FrameTime: 0.264 ms

[texture] texture-filter=linear: FPS: 3708 FrameTime: 0.270 ms

[texture] texture-filter=mipmap: FPS: 3436 FrameTime: 0.291 ms

[shading] shading=gouraud: FPS: 3199 FrameTime: 0.313 ms

[shading] shading=blinn-phong-inf: FPS: 3540 FrameTime: 0.283 ms

[shading] shading=phong: FPS: 3876 FrameTime: 0.258 ms

[shading] shading=cel: FPS: 3634 FrameTime: 0.275 ms

[bump] bump-render=high-poly: FPS: 3977 FrameTime: 0.252 ms

[bump] bump-render=normals: FPS: 3519 FrameTime: 0.284 ms

[bump] bump-render=height: FPS: 4011 FrameTime: 0.249 ms

[effect2d] kernel=0,1,0;1,-4,1;0,1,0;: FPS: 3744 FrameTime: 0.267 ms

[effect2d] kernel=1,1,1,1,1;1,1,1,1,1;1,1,1,1,1;: FPS: 3312 FrameTime: 0.302 ms

[pulsar] light=false:quads=5:texture=false: FPS: 3814 FrameTime: 0.262 ms

[desktop] blur-radius=5:effect=blur:passes=1:separable=true:windows=4: FPS: 2688 FrameTime: 0.372 ms

[desktop] effect=shadow:windows=4: FPS: 2912 FrameTime: 0.343 ms

[buffer] columns=200:interleave=false:update-dispersion=0.9:update-fraction=0.5:update-method=map: FPS: 1007 FrameTime: 0.994 ms

[buffer] columns=200:interleave=false:update-dispersion=0.9:update-fraction=0.5:update-method=subdata: FPS: 1766 FrameTime: 0.566 ms

[buffer] columns=200:interleave=true:update-dispersion=0.9:update-fraction=0.5:update-method=map: FPS: 1081 FrameTime: 0.926 ms

[ideas] speed=duration: FPS: 1551 FrameTime: 0.645 ms

[jellyfish] <default>: FPS: 3700 FrameTime: 0.270 ms

[terrain] <default>: FPS: 693 FrameTime: 1.444 ms

[shadow] <default>: FPS: 3604 FrameTime: 0.277 ms

[refract] <default>: FPS: 1633 FrameTime: 0.612 ms

[conditionals] fragment-steps=0:vertex-steps=0: FPS: 3753 FrameTime: 0.266 ms

[conditionals] fragment-steps=5:vertex-steps=0: FPS: 3590 FrameTime: 0.279 ms

[conditionals] fragment-steps=0:vertex-steps=5: FPS: 3125 FrameTime: 0.320 ms

[function] fragment-complexity=low:fragment-steps=5: FPS: 4039 FrameTime: 0.248 ms

[function] fragment-complexity=medium:fragment-steps=5: FPS: 3248 FrameTime: 0.308 ms

[loop] fragment-loop=false:fragment-steps=5:vertex-steps=5: FPS: 3091 FrameTime: 0.324 ms

[loop] fragment-steps=5:fragment-uniform=false:vertex-steps=5: FPS: 3275 FrameTime: 0.305 ms

[loop] fragment-steps=5:fragment-uniform=true:vertex-steps=5: FPS: 4083 FrameTime: 0.245 ms

=======================================================

glmark2 Score: 3131

=======================================================

=======================================================

vkmark 2017.08

=======================================================

Vendor ID: 0x1002

Device ID: 0x67C7

Device Name: AMD Radeon Pro WX 5100 Graphics (RADV POLARIS10)

Driver Version: 100675588

Device UUID: 826fa47eb82102297ca50e9690d2b3c0

=======================================================

[vertex] device-local=true: FPS: 12413 FrameTime: 0.081 ms

[vertex] device-local=false: FPS: 5290 FrameTime: 0.189 ms

[texture] anisotropy=0: FPS: 11155 FrameTime: 0.090 ms

[texture] anisotropy=16: FPS: 11092 FrameTime: 0.090 ms

[shading] shading=gouraud: FPS: 10706 FrameTime: 0.093 ms

[shading] shading=blinn-phong-inf: FPS: 10385 FrameTime: 0.096 ms

[shading] shading=phong: FPS: 10085 FrameTime: 0.099 ms

[shading] shading=cel: FPS: 10058 FrameTime: 0.099 ms

[effect2d] kernel=edge: FPS: 9622 FrameTime: 0.104 ms

[effect2d] kernel=blur: FPS: 4667 FrameTime: 0.214 ms

[desktop] <default>: FPS: 8118 FrameTime: 0.123 ms

[cube] <default>: FPS: 11941 FrameTime: 0.084 ms

[clear] <default>: FPS: 10372 FrameTime: 0.096 ms

=======================================================

vkmark Score: 9684

=======================================================Oh nice! I tried to boot a mainline 6.12 on it but failed. As usual in the Arm world, it could be due to any missing option. I’ll download an fc41 image and try again.

So actually I was partially wrong. Your post made me want to try again. I only tested with the provided DTB since I initially thought it was mandatory. But if a default fedora works, it doesn’t have the DTB. So I tried again, removing the DTB and removing acpi=off efi=noruntime, and guess what ? My generic kernel that I normally use for the rock5 boots straight out of the box with PCIe support:

Debian GNU/Linux comes with ABSOLUTELY NO WARRANTY, to the extent

permitted by applicable law.

root@orion-o6:~# uname -a

Linux orion-o6 6.12.6-rk-1 #2 SMP Fri Dec 20 10:48:07 CET 2024 aarch64 GNU/Linux

root@orion-o6:~# cat /proc/cmdline

BOOT_IMAGE=/image-6.12.6-rk-1 loglevel=0 console=ttyAMA2,115200 earlycon=pl011,0x040d0000 arm-smmu-v3.disable_bypass=0 root=/dev/nvme0n1p2 rootwait rw

root@orion-o6:~# lspci

00:00.0 PCI bridge: Cadence Design Systems, Inc. Device 0100

01:00.0 Ethernet controller: Realtek Semiconductor Co., Ltd. Device 8126 (rev 01)

30:00.0 PCI bridge: Cadence Design Systems, Inc. Device 0100

31:00.0 Ethernet controller: Realtek Semiconductor Co., Ltd. Device 8126 (rev 01)

90:00.0 PCI bridge: Cadence Design Systems, Inc. Device 0100

91:00.0 Non-Volatile memory controller: MAXIO Technology (Hangzhou) Ltd. NVMe SSD Controller MAP1202 (rev 01)

This is extremely promising!

There are still important missing points:

- all cores run at 1800 MHz (no cpufreq is recognized, though I could be missing some modules)

- I have not seen boot messages. I suspect the earlycon is at fault but I could be wrong. However the console is OK once reaching userland.

- the fan doesn’t spin. Most likely I have not enabled the suitable hwmon driver. Will check.

Edit: fixed the reported CPU frequency, I was wrong, I used the patched “mhz” utility to measure the FPU speed.

Can someone provide dumps from Debian or Fedora 6.12 kernel?

dmesg, lspci -vvvnn, ACPI tables

Also logs from running BSA ACS in EFI shell?

- BSA ACS ACPI

- BSA ACS DT (I never used this one)

Run as Bsa.efi and also as Bsa.efi -v 1 for verbose output. Board will require hardware reboot after each run.

Is CONFIG_ACPI_CPPC_CPUFREQ enabled?

Try earlycon=pl011,mmio32,0x40d0000 for serial console(UART2).

EDIT: loglevel=0 ?

Hi Naoki!

Yes, but it’s in module:

$ grep CPPC .config

CONFIG_ACPI_CPPC_CPUFREQ=m

CONFIG_ACPI_CPPC_CPUFREQ_FIE=y

CONFIG_ACPI_CPPC_LIB=y

I had tried to load it and it reported “no such device” ot something like this, so I didn’t insist. But I could rebuild the kernel with it set to “y” just in case it matters. I remember keeping it as a module for the Altra board that is a bit capricious and occasionally switches to 1 GHz until either a reboot or a reload of the module

OK will try that, thank you! I suspected something around this without knowing what to enter, even though I did see that the UART was indeed pl011.

Regarding loglevel=0, I was about to say “no I don’t have this” but looking at the cmdline, I’m indeed seeing it on my alt kernel now! I don’t know how I managed to get that one by hacking on the grub.cfg but it certainly does not help  Ah it comes from my attempt to boot the fedora kernel, where it’s present. I’ll drop it. Good catch, thank you!

Ah it comes from my attempt to boot the fedora kernel, where it’s present. I’ll drop it. Good catch, thank you!

@RadxaNaoki I can confirm that the console is now OK, but CPPC… still nothing, even once hard and updated to 6.12.13. There isn’t even a message about it during boot, so I suspect it’s not enumerated in the ACPI tables. I looked a bit for it but I’m not familiar with ACPI tables so I’m not sure what to look for.

Please try vanilla defconfig.

$ uname -a

Linux orion-o6 6.12.13 #1 SMP PREEMPT Thu Feb 13 00:53:55 UTC 2025 aarch64 aarch64 aarch64 GNU/Linux

$ lsmod | grep cppc

cppc_cpufreq 12288 0

$ ls /sys/devices/system/cpu/cpu0/acpi_cppc/

feedback_ctrs lowest_freq nominal_freq wraparound_time

guaranteed_perf lowest_nonlinear_perf nominal_perf

highest_perf lowest_perf reference_perf

$ cat /sys/devices/system/cpu/cpufreq/policy0/scaling_{min,max}_freq

800000

2500000

Please check /sys/firmware/acpi/tables/SSDT2.

It seems that Windows 24H2 has some issues in supporting different PE architectures. You can try disabling all the little cores (core 0-3) to see if it works.

SSDT2 is properly there. I’ll then try defconfig, thank you for the test!

OK now I can confirm that cppc_cpufreq in defconfig works properly, that’s perfect, thanks! I’ll diff the configs to figure what makes the difference, but for now it looks like mainline is working fine for me.

Here’s from Debian (Linux debian 6.12.9+bpo-arm64 #1 SMP Debian 6.12.9-1~bpo12+1 (2025-01-19) aarch64 GNU/Linux)

Can’t get bsa.efi to log to file. I run Bsa.efi -v 1 -f bsa.out, a bunch of tests look to pass and then the screen goes corrupt, but nothing in the file on reboot. I’ll try again at some point

For BSA ACS it is better to connect serial console and log it’s output rather than trying to log into file.

ACS leaves system in a state where reboot is required.