Can you please point to the kernel and initial build/instructions?

Orion O6 Debug Party Invitation

@hipboi is the debug party revision the final revision that you guys know of or is it one with a known HW bug but that’s not so critical that the board can still be used for debug?

Hello, I’m a developer who builds AMR robots using Radxa X4. Recent project published on Hackster featured Radxa X4, interested to publish a project featuring Orion O6 for robotics building https://www.hackster.io/mxlfrbt/build-a-radxa-x4-ros2-car-robot-using-intel-robotics-sdk-2e1778

You said that UEFI+ACPI will come at some point in future as you went UEFI+DT for now.

I hope that dump of ACPI tables and results of BSA/SBSA/PC-BSA ACS will be available when UEFI+ACPI code drop happen.

Used ACS tests during work on SBSA Reference Platform in QEMU/EDK2/TF-A and those help catching hardware/firmware issues. Some hw ones cannot be easily fixed, some can be hidden by firmware.

Planning to buy 64GB one if reviews convince me to spend money on a board.

Picking up the suggestion here I want to ask about CD8180’s PCIe ‘bifurcation’ capabilities.

As I may have understood correctly it’s not only about the SoC’s possibilities how to ‘spread’ PCIe lanes but also board layout (with RK3588 the four Gen3 lanes could be used in a 4 x x1 config but the clocks must also be considered and as such with Rock 5B only a 2 x x2 config for the M.2 slot is possible, right?)

So speaking about the PCIe x8 and the M.2 x4 slot: is it possible to use them in other configs, e.g. the PCIe slot in a 4 x x2 and the M.2 slot in a 4 x x1 or 2 x x2 setup?

TRM will released by Q2, 2025. The Cix team is short of engineering resource at the moment since ACPI on arm is not yet matured.

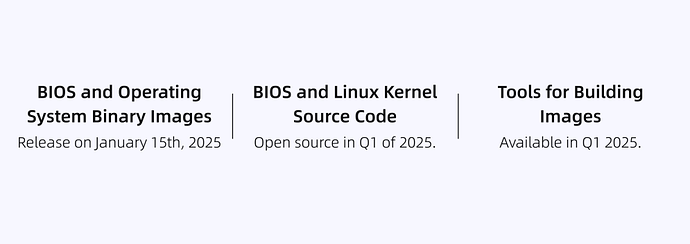

Beta 1 image release will be UEFI + DT and it will be available on 15th, Jan and Beta 2 image release will be UEFI + ACPI, the timeline is expected before Feb.

I hope that dump of ACPI tables and results of BSA/SBSA/PC-BSA ACS will be available when UEFI+ACPI code drop happen.

The EDK2 source code will be available, so you can just edit it.

IIRC bifurcation is not possible on this platform.

More benchmarks are slowly popping up https://browser.geekbench.com/v6/cpu/9535176

“Model Cix Technology Group Co., Ltd. CIX AI PC”

1209

Single-Core Score

6052

Multi-Core Score

Too bad Geekbench doesn’t report which kernel was used etc.

Looks like a downstream kernel then

Let’s see how it is in the end

@hipboi explained CIX kernel roadmap over here.

And by looking at the available scores (and the metadata using the ’.gb6 trick’) we can expect that the scores will improve (most likely a lot): https://browser.geekbench.com/search?q=cix

Unfortunately Geekbench 6 is crap for ‘measuring’ multi-threaded performance and by not measuring memory bandwidth and latency we always have exactly no idea why the scores are as they are. In SoC bring-up stage often something goes wrong with DRAM initialization and then benchmark scores will drop by a large margin.

Yep. The file compression test at least shows MB/s and what we can see is that in the MT test it delivers twice the performance of Rock 5B+, which is quite decent. Also such tools are never able to measure the performance on big cores only (or by excluding the little cores cluster which is not there for performance purposes). This would significantly help and even stabilize measurements: it’s even possible that some tests are not finishing at the same time on all cores and affect the overall measurement!

Long time (20y+) linux user/sys admin/network admin here that would love to hack on general Debian support as well as testing various NICs with this board and isn’t afraid to use an SPI Flasher and recompile some kernels. I would also try to get DPDK/VPP up and running and do some benchmarks.

BredOS developer here, we develop an archlinux based os for many SBCs, we think BredOS supporting the Orion O6 would be good since there’s already a thread called “Archlinux for the Orion O6”

I mainly work on development related to the Linux kernel and DRM. If I have a O6, I might be able to help with some display upstream-related work.

The day has come ;D

Looking forward to see code while waiting for benchmarks and reviews.

Here is the output from running sbc-bench: https://0x0.st/8opg.bin

EDIT: it was run on official Debian 12

Thank you Naoki. I’m very pleased to see that it looks pretty good out of the box, with measured CPU frequencies matching the advertised OPP, and excellent RAM timings for tests from the A720 cores! The CPU cores ordering is very strange however, I don’t know if it’s caused by the cores declaration in the DTB or any such thing:

CPU cluster policy speed speed core type

0 0 0 800 2500 Cortex-A720 / r0p1

1 0 1 800 1800 Cortex-A520 / r0p1

2 0 1 800 1800 Cortex-A520 / r0p1

3 0 1 800 1800 Cortex-A520 / r0p1

4 0 1 800 1800 Cortex-A520 / r0p1

5 0 5 800 2300 Cortex-A720 / r0p1

6 0 5 800 2300 Cortex-A720 / r0p1

7 0 7 800 2200 Cortex-A720 / r0p1

8 0 7 800 2200 Cortex-A720 / r0p1

9 0 9 800 2400 Cortex-A720 / r0p1

10 0 9 800 2400 Cortex-A720 / r0p1

11 0 9 800 2400 Cortex-A720 / r0p1

=> 1 2.5G big core, 4 1.8G small cores, 2 big 2.3G cores, 2 big 2.2G cores, 3 big 2.4G cores. That’s 5 different clusters, compared to the expected 3 clusters which should be each made of 4 identical cores.

Regardless, despite the many different frequencies, these frequencies are reasonably good already (which explains the performance gains you measured on the kernel build).

In fact, I suspect that for the little cores (A520), where the DRAM timings are particularly bad, that’s exactly the same issue as on the rock5 where the DMC doesn’t leave powersave mode when only little cores are running, and very likely that the timings will be better if you change the DMC to performance mode. But for tests involving all cores at once, it should not change anything, since the big cores will turn the DMC to high performance mode anyway. On the rock5, running this significantly improves the little cores performance for me:

echo performance > /sys/devices/platform/dmc/devfreq/dmc/governor

I suspect you’ll see the same here. But again it will only affect workloads running exclusively on small cores, so nothing very important for your general tests.