Hi,

I followed below instructions to increase NVME speed:

https://wiki.radxa.com/Rockpi4/install/NVME#Step_4_Enable_PCIe_Gen2_mode_to_get_max_speed

https://wiki.radxa.com/Rockpi4/hardware/devtree_overlays

https://wiki.radxa.com/Rockpi4/dev/common-interface-with-kernel-5.10

But in the end the changes has no effect at all. I am using Ubuntu Server. System runs on NVME(no SD/eMMC).

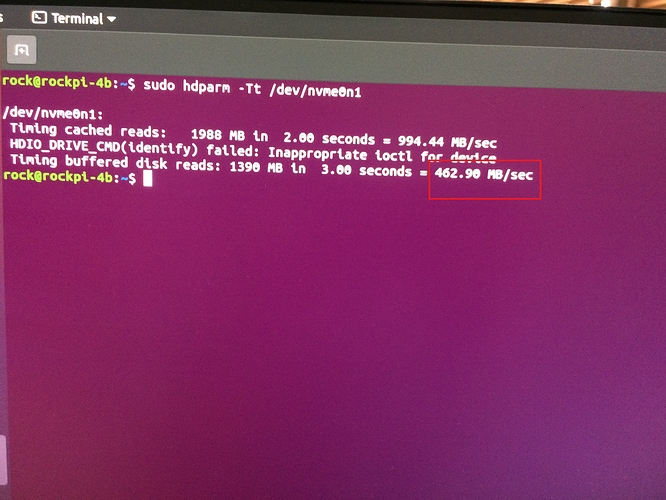

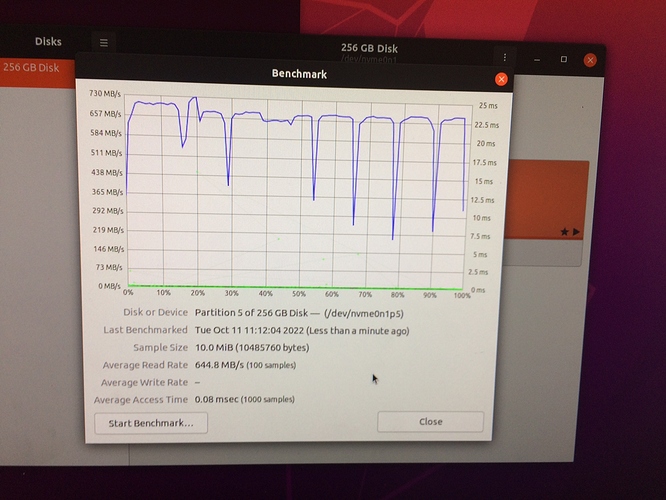

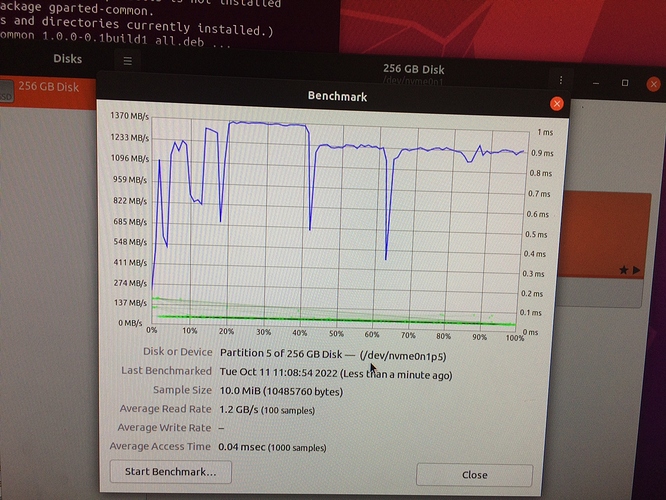

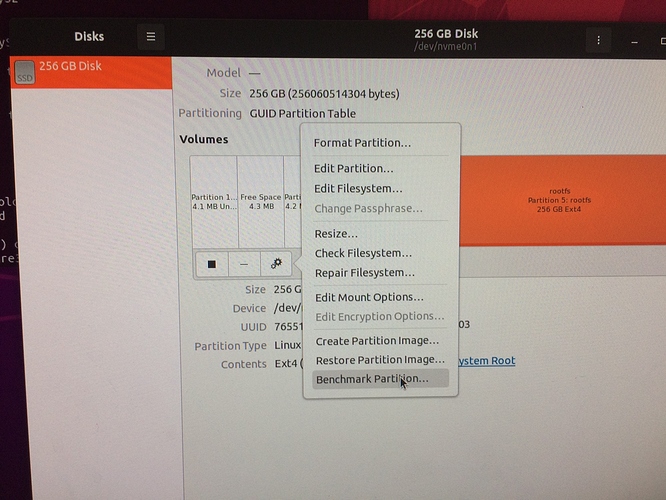

Please see the screenshots below. As you can see, benchmark speed before and after the changes is the same.

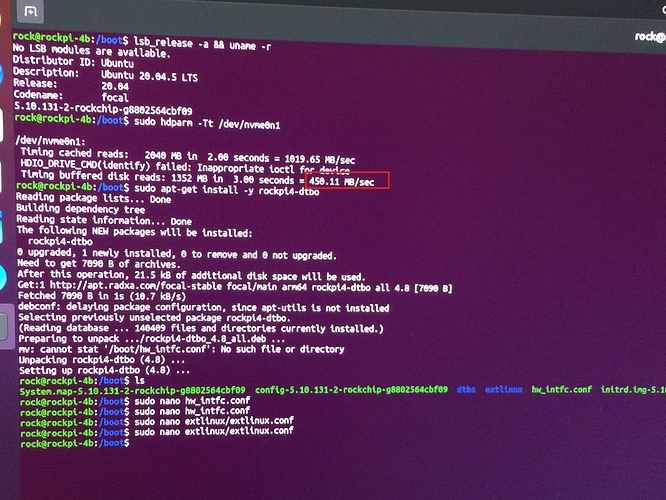

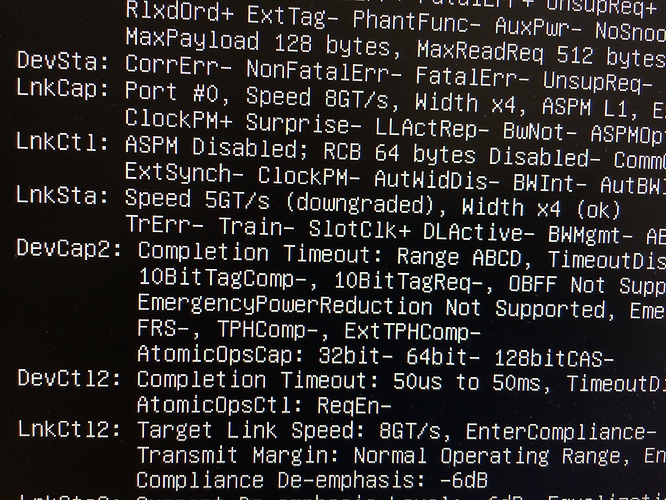

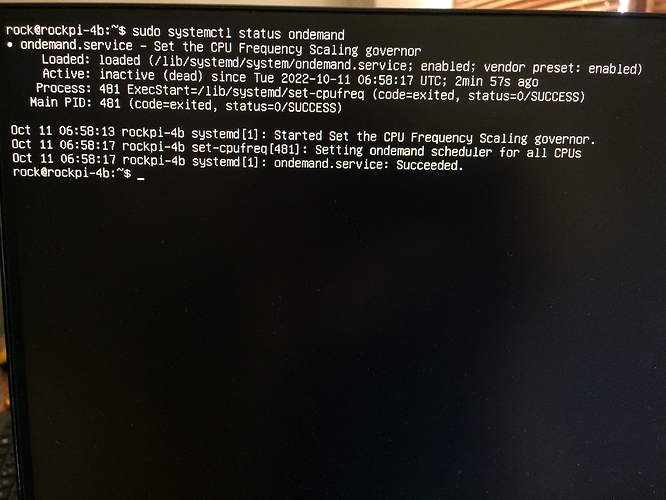

Initial benchmark with PCIE1 and the steps to change to PCIE2

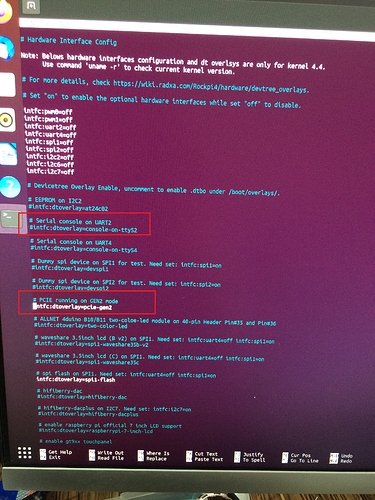

Modification of /boot/hw_intfc.conf

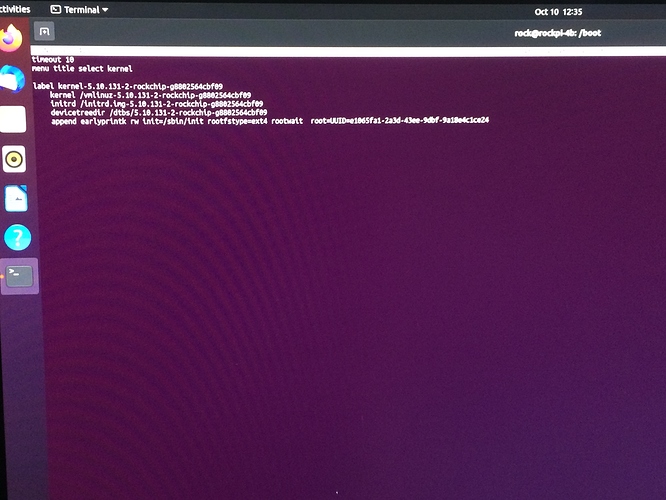

Modification of /boot/extlinux/extlinux.conf

Benchmark after changes & reboot

I also tested it on Debian couple months ago, and as far as I remember there was no effect there as well.

Is it normal?