Hello,

Before anything I apologize for any grammar errors, English is not my first language. Also, I’m no expert so please forgive any technical mistakes on my part, any suggestions are more than welcome.

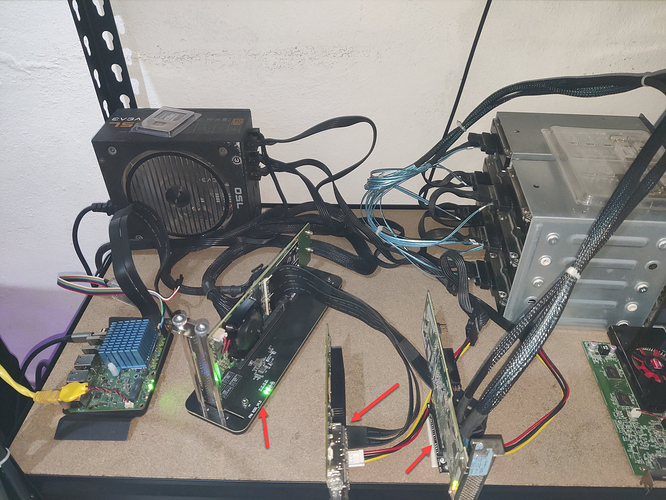

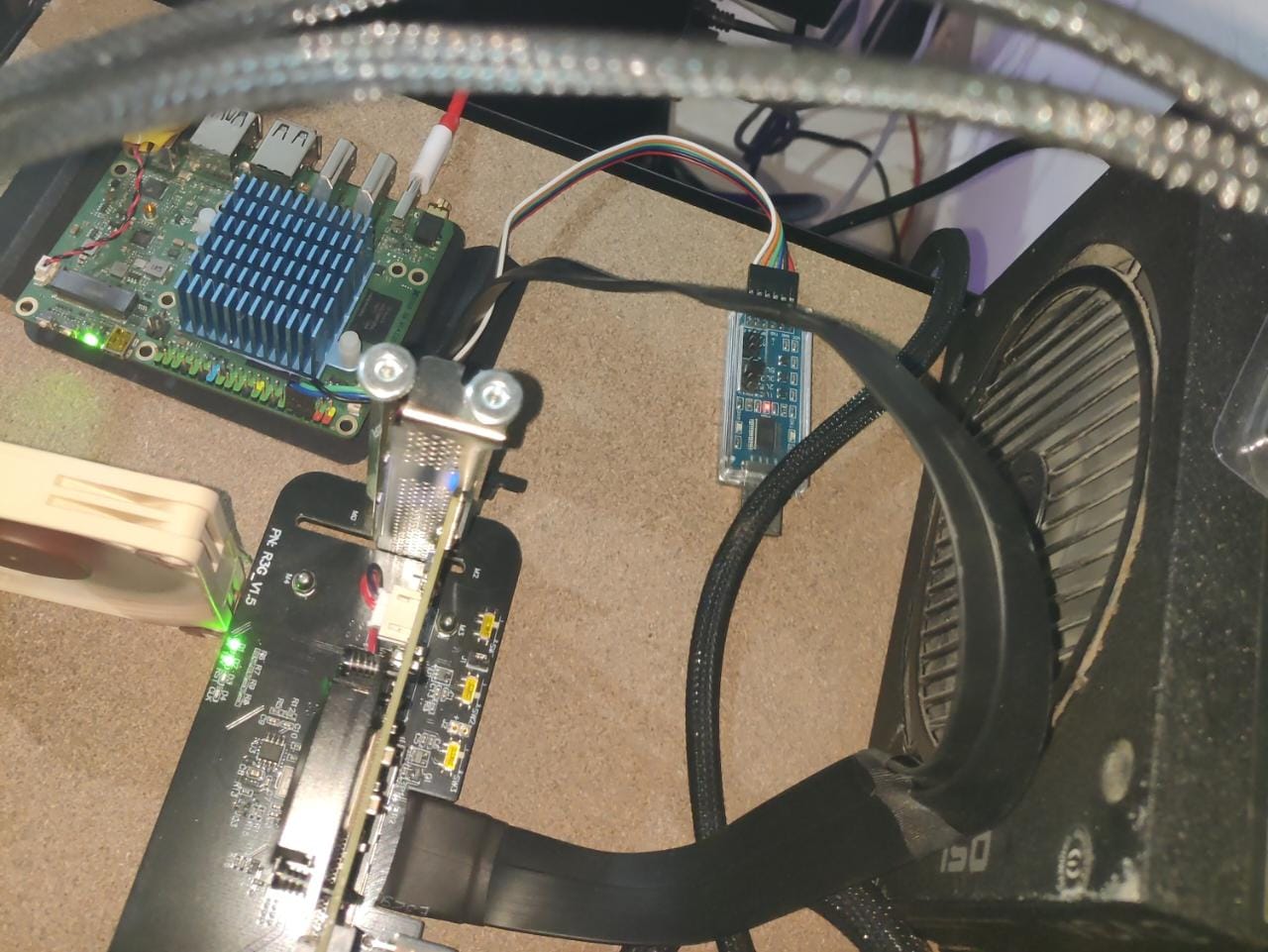

I’ve been waiting for months to test the rock 5b as a NAS and I finally got all the hardware. My current hardware is the following:

- A PLX8747 based card I got from aliexpress.

- A couple of P1600X optanes.

- 2x M.2 to PCIe X4 adapters

- A M.2 to PCIe x16 adapter with ATX PSU 24pin connector

- A Mellanox Connectx-3 Infiniband

- A LSI SAS2008 card

- 6x 2TB SAS hard drives

I had most of these things laying around (Except the PLX and Mellanox cards) and decided to give it a try and see what the hardware is capable of.

The PLX switch worked right out of the box, I even tested it with 4 nvme drives and all got recognized right away, so things were looking good.

The problems started when I tried connecting the SAS card, I first tried with an HP H240, that one somehow got recognized as an Ethernet adapter, I found the right driver for it in the kernel but the device was being recognized with the wrong vendor and device IDs and no matter what I couldn’t get the driver load, after a bunch of troubleshooting I gave up on that one.

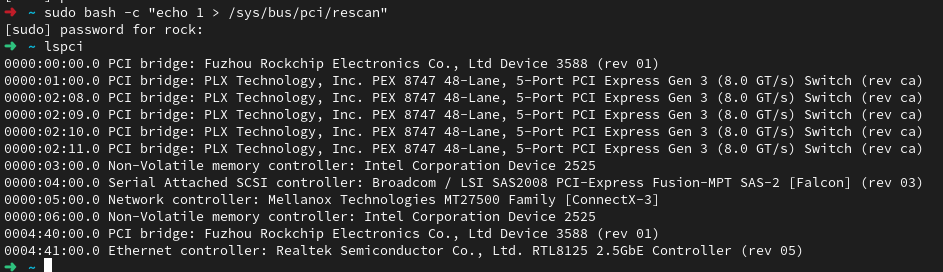

Then I tried the LSI one, at first, it didn’t even show up when running lspci. It turns out that, apparently, the firmware on that card takes longer to initialize than the Rock 5b to boot up so all I had to do was rescan the PCI bus after some time and:

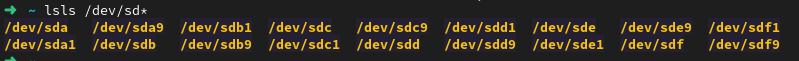

After recompiling the kernel and adding the correct drivers all disks were up and running:

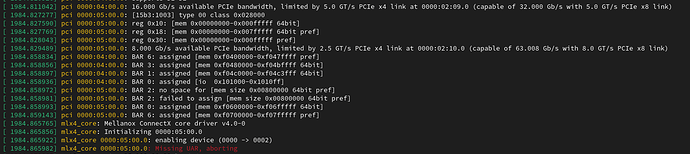

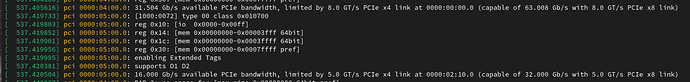

So far things were looking good, then I tried the Mellanox Card, and to my surprise, it just worked (I only had to add the kernel drivers and add some PCIe clock delay on the M.2 to x16 adapter), but then I messed up, my desktop didn’t want to recognize the other Mellanox card so I had to update the firmware of the cards. Now my desktop detects it but when trying to load the drivers I get a BAR error in the Rock 5b, I believe it can probably be fixed by looking into the dtb files but honestly I have no idea how to do so. And dumb me didn’t make a backup of the original firmware…

(pci 05:00.0)

I also tried an HP NC523 adapter but it was the same as the H240 sas card.

The saddest part of all this is that even if I got the hardware to work, apparently it would be really difficult to run ZFS on this Kernel, I tried but got no luck with that too.

Although I haven’t gotten it to work yet, I’m really surprised by what the hardware seems capable of, sure the software side is still lacking, but hey this has been out for a short time, so I’m sure this will improve over time and I’m really excited about that.

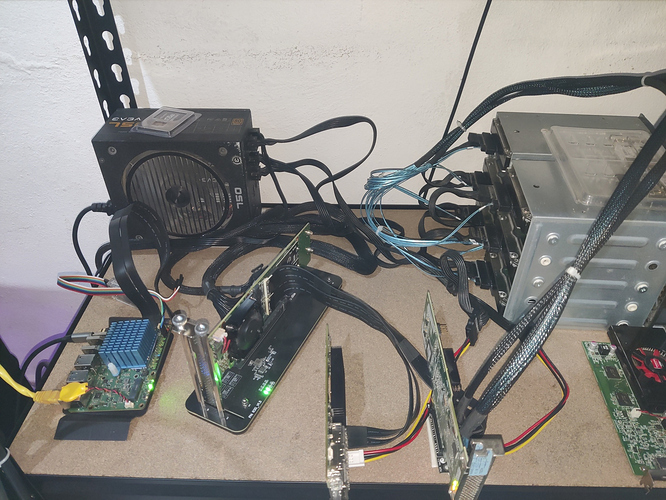

Lastly here is a photo of my current setup, sorry it’s messy I’m just doing testing for now maybe later I’ll do some nice enclosure for it.