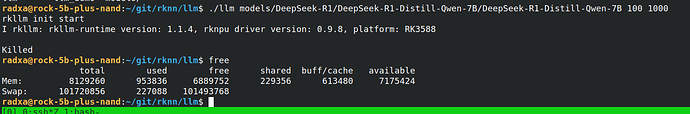

Anyone sucessful running DeepSeek-R1 medium models? I know that probably these board are not meant to be able to process a real big Large lanuage model, but just out of curiosity I wanted to check how it perfomes I couldn’t run even 7B model running(only 1.5B works) I constantly get this error:

I assume the model is too large to fit into the chip’s memory. On a normal CPU, swap helps to run the model, but apparently, for an NPU, it cannot run at all if it doesn’t fit in memory. I understand that even if it were possible, it would be too slow, but out of curiosity, I’d like to know if running it from scratch is feasible(On NPU). Any subtle hints would be appreciated as well.