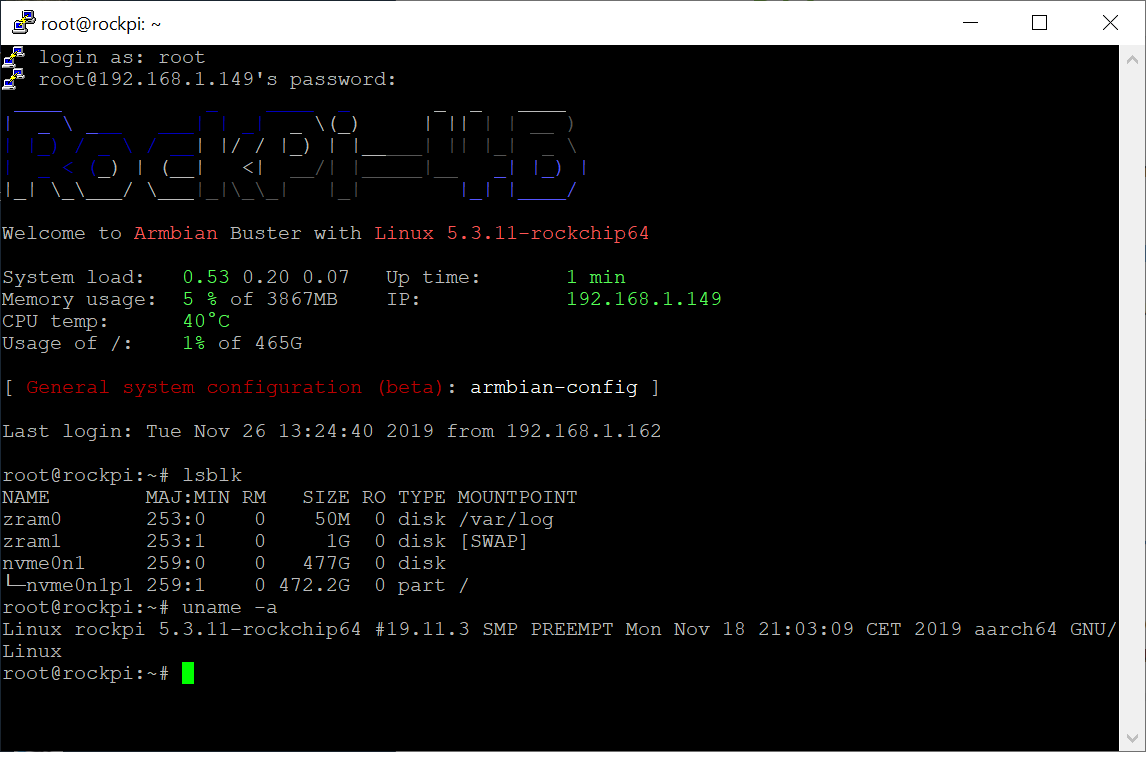

XPG GAMMIX 512GB S5 works and boot SPI without eMMC/SDcard

Armbian_19.11.3_Rockpi-4b_buster_current_5.3.11.img

official radxa 4b-u-boot

tested RockPi4a v1.4

Kernel 5.x and nvme

out of the box or with step 5?

sorry my bad english, what you mean “out of the box or with step 5”

what is out the box?

Out of the box means something that is in its default state, ie, untouched or unchanged.

He means if you changed /boot/armbianEnv.txt as specified in step 5 of guide by Darp, or it worked without changing anything.

Armbian_19.11.3_Rockpi-4b_buster_current_5.3.11.img , i DL it from armbian site and do nothing.

- flash to sdcard and from sdcard same …img to NVME

- boot with sdcard, (need connect pin 23 and 25), when “Starting kernel …” remove sdcard, it use NVME

- add to armbianEnv.txt “fdtfile=rockchip/rk3399-rock-pi-4.dtb”

-SPI flash with prommer direct to rockpi pins,19, 21, 23, 24

or radxa linaro sdcard xxxx rockpi4b_upgrade_bootloader.sh

or rkdeveloptool

all of them works

sorry

image is virgin Ambian, not my build

5. only to armbianEnv.txt “fdtfile=rockchip/rk3399-rock-pi-4.dtb”

Thanks for the info.

Again why do you need to bypass SPI (shorting 23 and 25) to be able to boot from the sdcard? Seems odd that its not possible to boot directly from SPI into the SD card

So, I finally made it to boot from the nve with the latest buster server armbian (changed with step 5).

Issues:

- It takes ages to boot (someone already reported here I guess)

- when I issue “hdparm -t --direct”,

- I get an error “HDIO_DRIVE_CMD (identify) failed: Inappropriate ioctl for device”

- the reported speed is too slow (around 190MBps (I remember having smth around 500 MBps in both radxa and arbian stretch ) )

might there be an issue with the driver in the 5.3 kernel?

@darp, would also step 5 at some point integrated into the build for the rockpi4b?

Any reason for this not to be in place?

Do you know where the armbianEnv.txt is created?

If SPI is empty, both, radxa and armbian boot, not need to connect pins, otherwise only official boot from the sdcard

With this PR merged into master there is no need for step 5. anymore - as long as one builds an image from master themselves.

This will take some time to propagate to downloadable images though.

so then only the boot speed and nvme speed issues to get solved (I hope) to get it somewhat stable

As for nvme speed…

Did you use the gen2 overlay which is needed to enable, officially unsupported, Gen2 link speed?

You need to add the following line to /boot/armbianEnv.txt or adjust existing one.

You can do it from within the booted OS:

overlays=pcie-gen2

For boot speed I have some hacky patch from Ezequiel Garcia lying around my userpatches folder which makes boot fast again. I will try to merge it soon.

Hi @piter75

I tried to use the gen2 overlay configuration but had no effect …

Only then I realised that the branch you mentioned above disappeared (so i imagine its not merged).

in previous kernels i had around 400-500MBps for the supported gen 1 and 700-900 for gen2 (radxa stretch)

for context: I’m trying to achieve a somewhat stable system on top of a supported official image, as it will stay remote from me. so I rather have the supported gen1, but at the decent 400-500Mbps speed (the main purpose of the upgrade from the current Raspberrypi3)

about the boot speed, cool. I’ll wait for that patch to be merged (following here)

Its been merged. Found in pull requests.

The slower speed I cant say, @piter75 and other knowledge person can tell.

Well, I am not an expert on NVMe with Rock Pi 4 as I simply don’t have neither PCIe extension board nor standalone NVMe drive

I merely helped to include the tweaks that @darp described into Armbian.

I think I must acquire myself another Rock Pi 4 with PCIe extension board solely for NVMe tests in Armbian

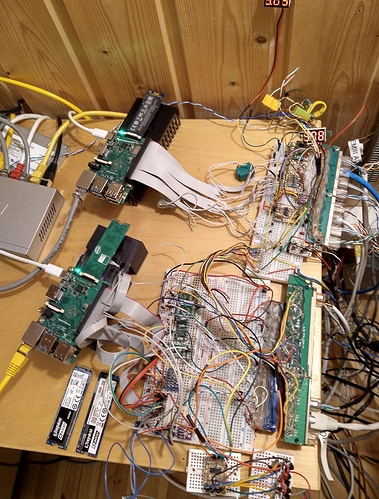

Is that…router?

no, one of them is home automation, 1-wire, measurement, control, solar system over-production control, etc. another test/in reserve

I did modify my U-boot slightly (see here Fixing U-boot to support boot from NVMEs) to support some of the SSDs that I have. With this change the boot is pretty fast. Under 30 seconds to see the login prompt.

As for the speed… here is one run that seems to be typical using the following command

fio --randrepeat=1 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=test --filename=test --bs=4k --iodepth=64 --size=4G --readwrite=randrw --rwmixread=75

DataRam

-----------

test: (g=0): rw=randrw, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=64

fio-3.1

Starting 1 process

test: Laying out IO file (1 file / 4096MiB)

Jobs: 1 (f=1): [m(1)][100.0%][r=178MiB/s,w=58.8MiB/s][r=45.6k,w=15.1k IOPS][eta 00m:00s]

test: (groupid=0, jobs=1): err= 0: pid=3478: Sat Nov 16 07:05:02 2019

read: IOPS=45.2k, BW=176MiB/s (185MB/s)(3070MiB/17403msec)

bw ( KiB/s): min=165576, max=184704, per=100.00%, avg=180934.65, stdev=3336.51, samples=34

iops : min=41396, max=46176, avg=45233.62, stdev=833.94, samples=34

write: IOPS=15.1k, BW=58.0MiB/s (61.8MB/s)(1026MiB/17403msec)

bw ( KiB/s): min=55032, max=62108, per=100.00%, avg=60473.24, stdev=1275.30, samples=34

iops : min=13758, max=15527, avg=15118.35, stdev=318.85, samples=34

cpu : usr=24.37%, sys=69.26%, ctx=16876, majf=0, minf=8

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.1%, >=64=0.0%

issued rwt: total=785920,262656,0, short=0,0,0, dropped=0,0,0

latency : target=0, window=0, percentile=100.00%, depth=64

Run status group 0 (all jobs):

READ: bw=176MiB/s (185MB/s), 176MiB/s-176MiB/s (185MB/s-185MB/s), io=3070MiB (3219MB), run=17403-17403msec

WRITE: bw=58.0MiB/s (61.8MB/s), 58.0MiB/s-58.0MiB/s (61.8MB/s-61.8MB/s), io=1026MiB (1076MB), run=17403-17403msec

Disk stats (read/write):

nvme0n1: ios=778690/260197, merge=0/0, ticks=565565/252813, in_queue=12484, util=99.35%

Hi

So I’m running now armbian 19.11.7 buster with kernel 5.4.8

I used to have transfer nvme speeds around 400-500 MBps with radxa stretch linux 4.4 (and 700ish on pcie gen 2)

In armbian it seems that the transfer rate is around half:

raleonardo@rockpi:~$ sudo hdparm -t --direct /dev/nvme0n1

/dev/nvme0n1:

HDIO_DRIVE_CMD(identify) failed: Inappropriate ioctl for device

Timing O_DIRECT disk reads: 590 MB in 3.01 seconds = 196.11 MB/sec

with overlays=pcie-gen2:

raleonardo@rockpi:~$ sudo hdparm -t --direct /dev/nvme0n1

/dev/nvme0n1:

HDIO_DRIVE_CMD(identify) failed: Inappropriate ioctl for device

Timing O_DIRECT disk reads: 1140 MB in 3.00 seconds = 379.61 MB/sec

I’m using a HP EX900 250GB NVMe

Do you have similar issues?