I have RockPI 4GB with M.2 extend board (new one).

But connecting Samsung EVO860 256GB or WD Blue 500GB (M.2 2280) does not show any block device

Issue wtith SSD

Dunno with Armbian as long as those are nvme and not sata m.2 just ask in the Armbian forum.

To be honest slightly confused to why sata m.2 don’t work and sure its just a matter of a kernel module as it don’t make much sense but hey its got to be nvme.

Yes, those are NVME.

Ok, I will check first with image taken from here. Then eventually I will communicate that to Armbian.

@hijax_pl Is your wd ssd the SN500? I have the same drive right now (nvm, not the same drive, see below) but my board is still shipping. I also have the radxa’s ubuntu server image flashed to a microsd card ready, and I can report back as soon as I get my hands on my unit.

@tonyunreal , exactly it is WD Blue 3D Nand SSD 500 GB M.2 (WDS500G2B0B)

Works with my lap (Win10) while connecting via USB3 adapter.

You can try lspci if pciutils is installed and dmesg | grep pcie.

If its not showing up as a blk device its likely its not there but dmesg might give you some clues.

I really don’t know what is enabled in the armbian image and try the debian one from radxa after a apt-get update apt-get upgrade

@hijax_pl I don’t think we have the same drive. I googled the model number of yours (WDS500G2B0B) and found the Amazon page for it, it says SATA III instead of NVME:

My drive is the WDS500G1B0C and “NVME SSD” is written on the disk and on Amazon:

According to the FAQ, SATA drives are not supported by the Rock Pi 4B.

Has to be NVMe protocol not the earlier Sata protocol M.2 ssd, like I say dunno why but has to be nvme.

@anon77784915 Thank you very much for this comprehensive picture. Now it is clear I was fooled by a clerk. But what is more importantly thanks to you I have learned how to distinguish those modules visually.

I will use already bought WD SSD as external drive, and look for proper NVMe module (most of the web shops shows wrong picture as B-key is present and description clearly states “NVMe SSD”.

Having said that, could anybody verify that link?

https://www.wd.com/products/internal-ssd/wd-blue-sn500-nvme-ssd.html#WDS250G1B0C

NVMe as stated but with B-key?

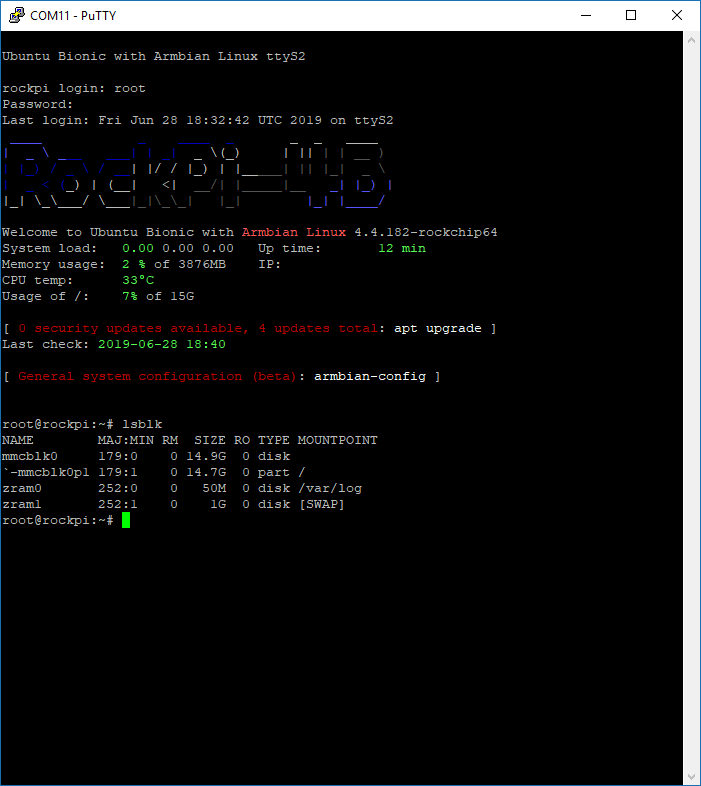

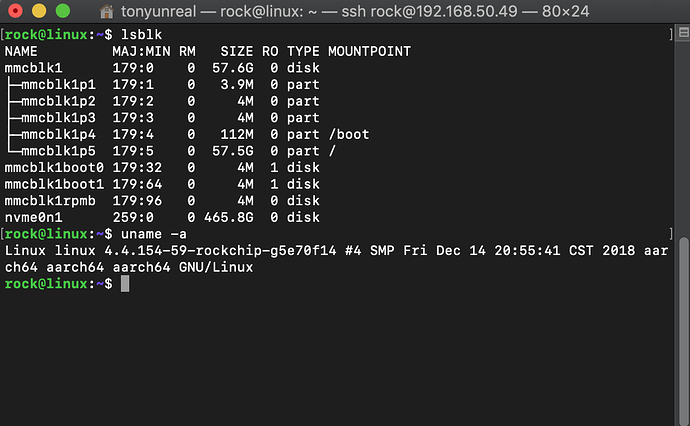

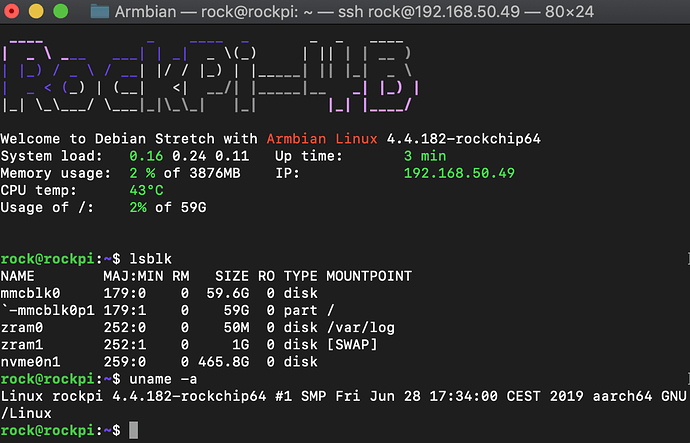

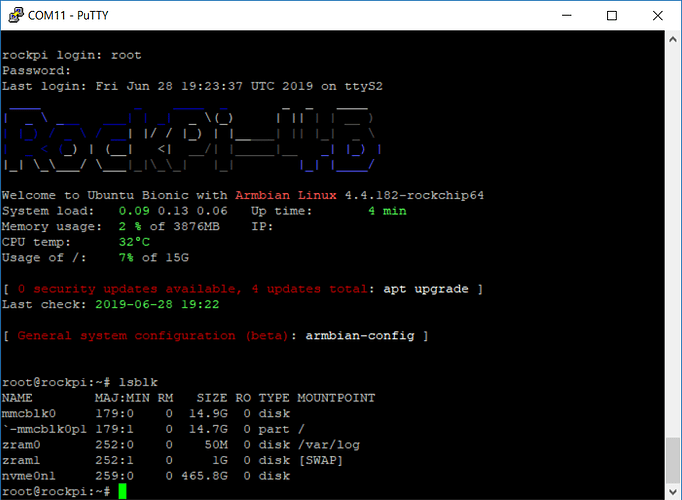

I got my board today. My WDS500G1B0C is recognized under both radxa Ubuntu and Armbian, both images are the 64-bit 4.4 kernel ones. See below for screenshots.

(took me some time with the Armbian image because of this booting bug.)

Well I am even more confused now and its great that you got that working.

Obviously has nothing to do with m only keys which was confusing the hell out of me as why is my b+m 4 port sata adapter working?

I think it gets really confusing as there is legacy sata m2, nvme sata & just nvme.

But how to tell the difference between the first 2 I am completely confused.

Check this as I have purchased one for the hell of it.

https://www.mymemory.co.uk/integral-120gb-m-2-2280-pcie-nvme-ssd-drive.html

Its a pcie nvme sata m.2

Aint a clue as usual…

Remember to edit /boot/hw_intfc.conf. and remove the hash from intfc:dtoverlay=pcie-gen2

Makes a huge diff in performance after a reboot.

Then sudo apt-get install iozone3

iozone -e -I -a -s 100M -r 4k -r 16k -r 512k -r 1024k -r 16384k -i 0 -i 1 -i 2

Then share the results with us

Depending on rev of board v1.3/v1.4 you may have to buy a $1 flash chip and get the soldering iron out.

https://wiki.radxa.com/Rockpi4/Linux_system_runs_on_M.2_NVME_SSD

Hi,

My armbian do not have /boot/hw_intfc.con file.

lspci

00:00.0 PCI bridge: Fuzhou Rockchip Electronics Co., Ltd Device 0100

01:00.0 Non-Volatile memory controller: Sandisk Corp Device 5003 (rev 01)

And the test results

kB reclen write rewrite read reread read write read rewrite read fwrite frewrite fread freread

102400 4 70378 95856 69563 70056 32566 72206

102400 16 136427 178523 169004 169452 112963 177023

102400 512 333038 343379 318082 318938 309229 343023

102400 1024 354563 362899 336900 337804 327674 366237

102400 16384 365499 371415 360670 361154 360321 371168

Thats a problem then as you are prob at gen 1.0 speed how does armbian do the overlays as expect its in there?

The Integral 120GB M.2 2280 PCIE NVME works not the fastest but for £25 its OK.

It is set to gen2.0

Command line used: iozone -e -I -a -s 100M -r 4k -r 16k -r 512k -r 1024k -r 16384k -i 0 -i 1 -i 2

Output is in kBytes/sec

Time Resolution = 0.000001 seconds.

Processor cache size set to 1024 kBytes.

Processor cache line size set to 32 bytes.

File stride size set to 17 * record size.

random random bkwd record stride

kB reclen write rewrite read reread read write read rewrite read fwrite frewrite fread freread

102400 4 32351 39073 56177 52657 28777 40784

102400 16 102608 131789 156747 140973 47277 115072

102400 512 424581 450976 376851 379936 364507 427022

102400 1024 438553 432823 407448 411767 405919 437925

102400 16384 426185 432095 621528 632342 630287 440617

@stuartiannaylor, I have pasted wrong data, i.e. no sudo rights

Now is the correct printout:

$ sudo lspci -vv | grep -E 'PCI bridge|LnkCap'

00:00.0 PCI bridge: Fuzhou Rockchip Electronics Co., Ltd Device 0100 (prog-if 00 [Normal decode])

LnkCap: Port #0, Speed 2.5GT/s, Width x4, ASPM L1, Exit Latency L0s <256ns, L1 <8us

LnkCap: Port #0, Speed 8GT/s, Width x2, ASPM L1, Exit Latency L0s <256ns, L1 <8us

Its bit of a mixed bag your WD 250gb if you don’t mind me saying.

Its the small numbers that you need to look at as 99% of the time the bloack size will be 4k

Prob in effect will be faster than the integral even if the integral does boast the biggest numbers.

The Integral is far from brilliant but for a £25 that is quite a good match to the price of a RockPi4 its approx £35 after you purchase the M.2 extender which I think is well worth it.

You can try this for a ‘server’ more style as nothing really just works on a singular file or thread in reality.

cat >> google-compute.sh <<EOL

# Change this variable to the file path of the device you want to test

block_dev=\$file

# sh google-compute.sh to run

# install dependencies

sudo apt-get -y update

sudo apt-get install -y fio

# full write pass

sudo fio --name=writefile --size=10G --filesize=10G \

--filename=\$block_dev --bs=1M --nrfiles=1 \

--direct=1 --sync=0 --randrepeat=0 --rw=write --refill_buffers --end_fsync=1 \

--iodepth=200 --ioengine=libaio

# rand read

sudo fio --time_based --name=benchmark --size=10G --runtime=30 \

--filename=\$block_dev --ioengine=libaio --randrepeat=0 \

--iodepth=128 --direct=1 --invalidate=1 --verify=0 --verify_fatal=0 \

--numjobs=4 --rw=randread --blocksize=4k --group_reporting

# rand write

sudo fio --time_based --name=benchmark --size=10G --runtime=30 \

--filename=\$block_dev --ioengine=libaio --randrepeat=0 \

--iodepth=128 --direct=1 --invalidate=1 --verify=0 --verify_fatal=0 \

--numjobs=4 --rw=randwrite --blocksize=4k --group_reporting

EOL

chmod a+x google-compute.sh

Just copy and paste and it will create the test file for you just edit the file path

I am not really sure with the RockPi4 is the numjobs should be 2 or 6 IE number of CPUs.

I am going to hazard a guess at 6 and maybe this would be a reasonable overall test

maybe

cat >> rockpi4-nvme.sh <<EOL

# Change this variable to the file path of the device you want to test

block_dev=\$file

# sh rockpi4-nvme.sh to run

# install dependencies

sudo apt-get -y update

sudo apt-get install -y fio

# rand write

sudo fio --name fio_test_file --direct=1 --rw=randwrite --bs=4k --size=1G --numjobs=6 --runtime=60 --filename=\$block_dev --group_reporting

# rand read

sudo fio --name fio_test_file --direct=1 --rw=randread --bs=4k --size=1G --numjobs=6 --runtime=60 --filename=\$block_dev --group_reporting

EOL

chmod a+x rockpi4-nvme.sh

Anyway the 2nd one gives me this and its prob the 2nd command that sort of simulates boot

rock@rockpi4:~$ sh rockpi4-nvme.sh

fio_test_file: (g=0): rw=randwrite, bs=4K-4K/4K-4K/4K-4K, ioengine=psync, iodepth=1

...

fio-2.16

Starting 6 processes

Jobs: 6 (f=6): [w(6)] [100.0% done] [0KB/28228KB/0KB /s] [0/7057/0 iops] [eta 00m:00s]

fio_test_file: (groupid=0, jobs=6): err= 0: pid=27300: Thu Jul 11 00:09:28 2019

write: io=1670.4MB, bw=28505KB/s, iops=7126, runt= 60004msec

clat (usec): min=81, max=68859, avg=831.29, stdev=1778.60

lat (usec): min=82, max=68861, avg=832.63, stdev=1778.77

clat percentiles (usec):

| 1.00th=[ 96], 5.00th=[ 105], 10.00th=[ 108], 20.00th=[ 122],

| 30.00th=[ 151], 40.00th=[ 764], 50.00th=[ 788], 60.00th=[ 820],

| 70.00th=[ 900], 80.00th=[ 972], 90.00th=[ 1144], 95.00th=[ 1432],

| 99.00th=[ 8256], 99.50th=[15936], 99.90th=[23936], 99.95th=[29312],

| 99.99th=[40192]

lat (usec) : 100=2.26%, 250=34.26%, 500=0.35%, 750=2.10%, 1000=42.78%

lat (msec) : 2=15.50%, 4=1.44%, 10=0.47%, 20=0.68%, 50=0.17%

lat (msec) : 100=0.01%

cpu : usr=1.74%, sys=9.68%, ctx=901635, majf=0, minf=115

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued : total=r=0/w=427600/d=0, short=r=0/w=0/d=0, drop=r=0/w=0/d=0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

WRITE: io=1670.4MB, aggrb=28504KB/s, minb=28504KB/s, maxb=28504KB/s, mint=60004msec, maxt=60004msec

Disk stats (read/write):

nvme0n1: ios=0/426674, merge=0/0, ticks=0/31624, in_queue=31264, util=52.24%

fio_test_file: (g=0): rw=randread, bs=4K-4K/4K-4K/4K-4K, ioengine=psync, iodepth=1

...

fio-2.16

Starting 6 processes

Jobs: 3 (f=3): [r(2),_(3),r(1)] [100.0% done] [111.4MB/0KB/0KB /s] [28.6K/0/0 iops] [eta 00m:00s]

fio_test_file: (groupid=0, jobs=6): err= 0: pid=27398: Thu Jul 11 00:10:14 2019

read : io=6144.0MB, bw=140824KB/s, iops=35206, runt= 44676msec

clat (usec): min=6, max=14780, avg=160.37, stdev=53.59

lat (usec): min=6, max=14783, avg=161.03, stdev=53.66

clat percentiles (usec):

| 1.00th=[ 114], 5.00th=[ 120], 10.00th=[ 124], 20.00th=[ 133],

| 30.00th=[ 139], 40.00th=[ 143], 50.00th=[ 151], 60.00th=[ 159],

| 70.00th=[ 171], 80.00th=[ 183], 90.00th=[ 207], 95.00th=[ 231],

| 99.00th=[ 298], 99.50th=[ 330], 99.90th=[ 422], 99.95th=[ 470],

| 99.99th=[ 732]

lat (usec) : 10=0.13%, 20=0.07%, 50=0.04%, 100=0.01%, 250=96.66%

lat (usec) : 500=3.07%, 750=0.03%, 1000=0.01%

lat (msec) : 2=0.01%, 4=0.01%, 10=0.01%, 20=0.01%

cpu : usr=6.33%, sys=19.27%, ctx=1583266, majf=0, minf=104

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued : total=r=1572864/w=0/d=0, short=r=0/w=0/d=0, drop=r=0/w=0/d=0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

READ: io=6144.0MB, aggrb=140824KB/s, minb=140824KB/s, maxb=140824KB/s, mint=44676msec, maxt=44676msec

Disk stats (read/write):

nvme0n1: ios=1568715/4, merge=0/0, ticks=178224/0, in_queue=177908, util=99.78%