I am running the latest Radxa Debian b39 release, plus updates. My Rock 5B is running Docker with a few extra sysctl tweaks:

# Quic/UDP tweaks to increase connection counts for GO

net.core.rmem_max=2500000

net.core.wmem_max=2500000

# Uncomment the next line to enable packet forwarding for IPv4

net.ipv4.ip_forward=1

# Uncomment the next line to enable packet forwarding for IPv6

# Enabling this option disables Stateless Address Autoconfiguration

# based on Router Advertisements for this host

net.ipv6.conf.all.forwarding=1

# Allowing IPv6 forwarding breaks SLAAC. This allows it again:

net.ipv6.conf.all.accept_ra=2

# Use BBR

net.core.default_qdisc=fq

net.ipv4.tcp_congestion_control=bbr

# Allow TCP listen socket reuse if safe from a protocol POV

net.ipv4.tcp_tw_reuse=1

# Enable TCP fastopen to reduce network latency

net.ipv4.tcp_fastopen=3

# Reduce swap usage

vm.swappiness=2

# Allow Docker containers to bind to privileged ports

net.ipv4.ip_unprivileged_port_start=53

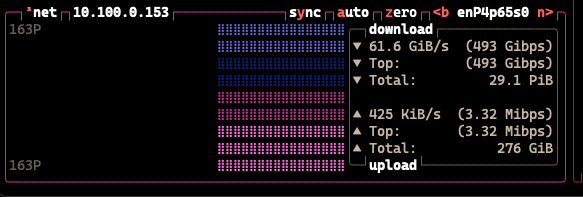

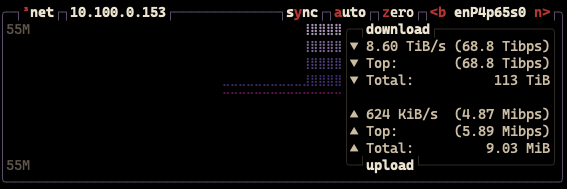

I am unable to use vnstat or monitor the NIC meaningfully, because the throughput is reported in real time in orders of hundreds of Gbps through Tbps(!). I have tried using vnstat -l, bmon, btop and glances and they all show the same erroneous speeds. I tried a reinstall of the OS image (to NVMe) to no avail, and as far as I recall the issue was present before any sysctl additions or the installation of Docker.

Does anyone have any ideas on what’s causing this and how to fix it?