Here are my install notes.

Best option for YOLOv8 object detection?

build hangs at

$ make install_raspi

....

make[3]: Entering directory '/tmp/opencv/opencv-4.10.0/build'

make[3]: Leaving directory '/tmp/opencv/opencv-4.10.0/build'

make[3]: Entering directory '/tmp/opencv/opencv-4.10.0/build'

[ 96%] Building CXX object modules/optflow/CMakeFiles/opencv_perf_optflow.dir/perf/opencl/perf_optflow_dualTVL1.cpp.o

[ 96%] Building CXX object modules/video/CMakeFiles/opencv_perf_video.dir/perf/opencl/perf_dis_optflow.cpp.o

It takes a while to build. How long have you waited? If you have waited more than 1 hour then it maybe due to being out of memory. How much RAM does your SBC have?

One way to get around small amount of RAM is to build in single thread mode by editing this line to $(MAKE) -j 1. I use to do that on a Raspberry Pi 4B, but the build took around 2 hours to complete.

Can you share the commands with camera video number. I’m trying examples/stream

Got apprx. 40fps with 2.2.0 rknn yolov8n

go run bytetrack.go -a :8080 -s 3 -v 11 -c 1280x720@60 -x person -m ../data/yolov8_2.3.0.rknn -t v8

I’m trying few things in go-rknnlite if you have any inputs please let me know.

-

deepsort and this bytetrack are same purpose - which one give more performance. I used deepsort with ReID in rknn_threaded by got 9fps

-

Also if you have bytetrack for rknn_threaded please share the same.

-

I’m trying to preserve the ID of person: like if person in the camera frame then create ID and save and detect the same ID with the same person if he out of frame and come back - do we have any library for this to solve.

If you modify this line to 60 FPS then stream a 60 FPS 720p video you can get 60 FPS playback.

You can convert one of the videos supplied to 60 FPS using;

cd example/data/

ffmpeg -i palace.mp4 -r 60 -vf "fps=60" -c:v libx264 -preset fast -crf 23 -c:a copy palace_60fps.mp4

Then run with

go run bytetrack.go -v ../data/palace_60fps.mp4 -m ../data/yolov8s-640-640-rk3588.rknn -t v8 -a :8080 -s 6

As video playback can be done at 60 FPS and your only getting 40 FPS with go-rknnlite and the CPP code. That suggests the problem is actually with your camera so you should work with debugging that.

However what is your use case were 60 FPS is required versus the standard 30 FPS?

I haven’t done any work with ReID, however you may be able to use ResNet which has some reidentification models.

I’m working only on camera no video playback.

The imx415 now default supported as 60FPS - I did check with gst

With go-rknnlite I can see the maximum of 40FPS with below stream test

go run bytetrack.go -a :8080 -s 3 -v 11 -c 1280x720@60 -x person -m ../data/yolov8_2.3.0.rknn -t v8

I did change the FPS = 60 on bytetrack.go but I didn’t find any change in still got the 40FPS.

Can you explain if fp16 is supported in rknn? I have converted my model (int8 quantization) and the detection forecasts have become worse. Tested in the yolov5 cpp multithread project

Yes FP16 is supported. Its much slower than using INT8 though.

How can I run fp16 in yolov5 cpp multithread? Or does this project not support it? I want to compare the speed and accuracy of detection

The multithreaded code doesn’t, but the rknn_model_zoo example code supports it.

Okay,

Thanks! I’ll check it

Any equivalent CPP code? @3djelly did you observe that in some cases same ID is creating for a person if he comes again? isn’t it an issue?

That’s an interesting subject. I am back to npu and 60fps.

This is exactly what i need. Same person back again, same ID.

Did you use go or cpp?

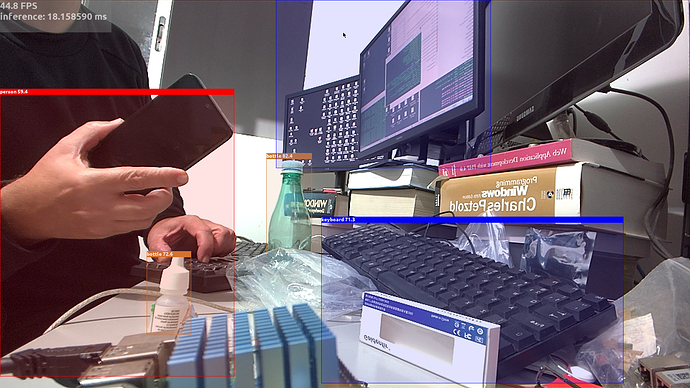

Here is a print screen without a threading pool and no OpenCV.

I’m almost sure i could get 16 ms inference with the previous kernel…

While trying to rewrite the thread pool in C or using someone else pool to take advantage of the parallelism i noticed having a renderer (SDL2) outside of the main thread drops the FPS below 40 fps.

Maybe the main thread has a priority. i need to learn a few more things…

@avaf I can see sometimes ID’s are changing EVEN ON THE SAME FRAME. look like byte track is trying to change the ID based on person walking changes. isn’t it the wrong behavior?

no OpenCV means SDL2?

I don’t have a strong AI background, but i think it’s wrong.

yes. Rendering is expensive, maybe you can have hw accel in OpenCV (have you tried something like that?).

My focus now is to have something near 50fps and then move on to test byte track and other nice things done with go but with C…